You want more compute in less rack, with less noise, fewer cables, and zero drama in ops. A dual-node chassis does that by sharing power, cooling, and space across two independent systems. Below is a plain-English take you can skim, plus tables and real-world use cases. I’ll point to IStoneCase pages where it makes sense.

Dual-Node Server Case

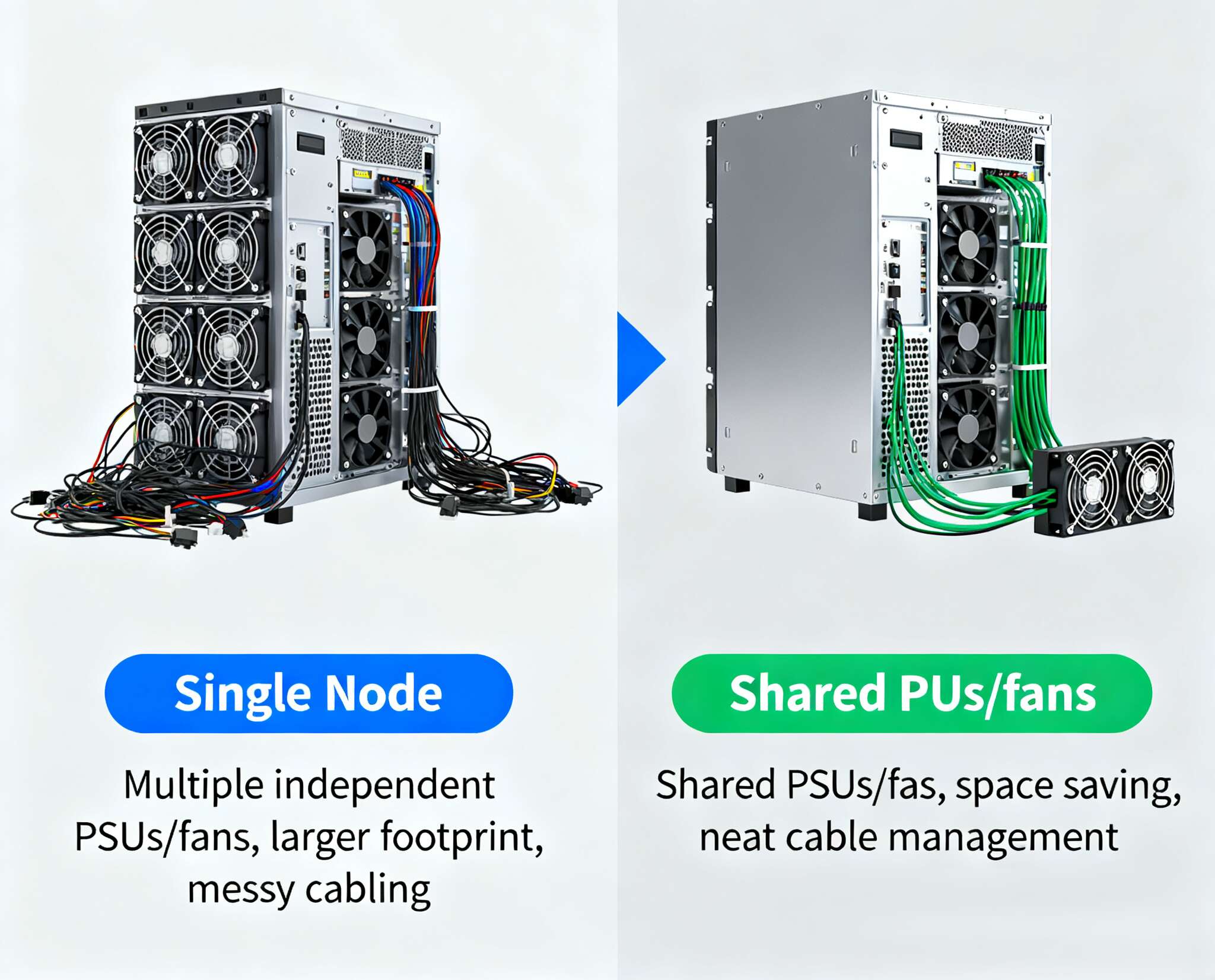

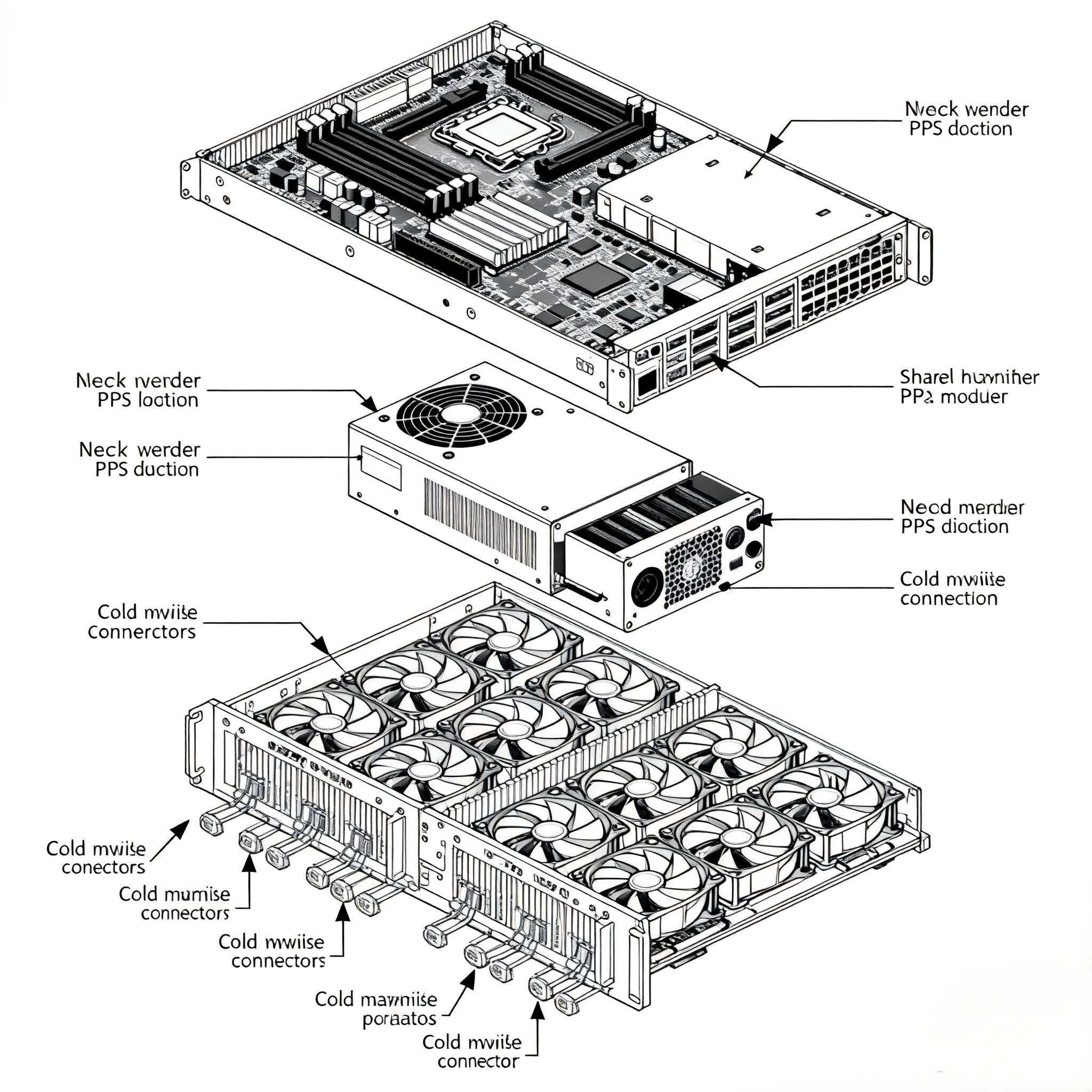

A dual-node server case packs two hot-swappable nodes into one enclosure. They still boot alone, fail alone, and get patched alone. But they share the heavy stuff—PSUs, fans, and airflow guides—so the whole box runs leaner. If you’re shopping, start here: Dual-Node Server Case.

Why people pick this layout:

- Higher density per U in a server rack pc case.

- Shared power and fans reduce part count; spares get simpler.

- Cables and PDUs stay tidy; less spaghetti, better airflow in the cold aisle.

Dual-Node Server Case 2400TB

Storage folks love capacity without a bigger footprint. IStoneCase’s Dual-Node Server Case 2400TB showcases the idea: dual controllers, shared chassis, serious bays. You get HA-style thinking in a compact computer case server form factor, ideal for backup tiers, video lakes, and log retention where east-west traffic is loud.

Shared PSUs in a server pc case

Two nodes. One PSU module set. Each node gets independent rails and firmware control, yet both benefit from N+1 or 1+1 redundancy at the chassis level. That cuts the number of PSU bricks, lowers PDU outlet usage, and keeps your power budget sane. In day-to-day ops it means:

- Fewer power cords dangling behind the rack.

- Easier dual-feed power (A/B) to upstream PDUs.

- Better conversion efficiency at typical loads; the supply doesn’t idle too low.

It’s not magic; just less duplication. If you need a variant or a different connector set, the Customization Server Chassis Service covers OEM/ODM tweaks.

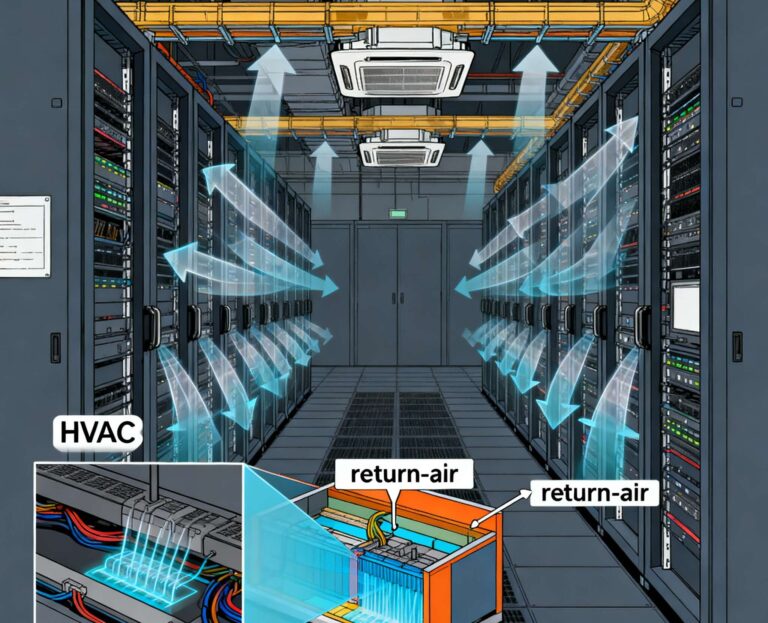

Shared fans and thermal control

Big, directed airflow beats a pile of tiny whiners. Shared fans in a dual-node chassis move air across both nodes with tuned ducts. The BMC can hold a smarter fan curve, so fans don’t scream at 3 a.m. when one DIMM gets warm. You get:

- Lower acoustic profile for edge closets.

- More consistent inlet-to-outlet delta-T; happier drives and VRMs.

- Less dust recirculation; filters last longer.

Minor gotcha: plan your hot-swap paths. Some designs place PSUs mid-rear; make sure you still have easy node pull/insert with your PDU layout. Measure twice, rack once.

Space and cabling in a server rack pc case

Dual-node means you consolidate rails, trays, cable runs, even labels. Top-of-rack stays clean: fewer patch cords, fewer uplinks if you aggregate per node. It just fit better in the rack, honestly. For remote sites, that neatness shrinks “truck-roll time” when someone has to swap a node Friday night.

Real-world use cases (not theory)

- Virtualization & VDI: Pair two nodes for HA at the host layer. Shared fans reduce noise; shared PSUs simplify A/B power. Drop it in a 600 mm deep server rack pc case row and keep ToR wiring boring on purpose.

- AI inferencing at the edge: Compact footprint, GPUs per node (where thermals allow), OOB management on both. Cooling headroom matters; shared fans help when the model spikes for a few mins, not hours.

- HPC/Dev clusters: Many small nodes beat one giant host for scheduler throughput. Dual-node keeps density up while keeping field service easy.

- Storage controllers: With the Dual-Node Server Case 2400TB you can run dual controllers, shared backplane, no extra chassis. Perfect for camera fleets, lab data, or cold logs.

- Branch data center / micro-POP: Low RU count, dual power feeds, quiet enough to live near people. Fans do less work at idle; power stays tidy.

Comparison: atx server case vs dual-node

atx server case builds are flexible and cheap to start, sure. But once you scale beyond a couple hosts, duplicated PSUs and fans add clutter. Dual-node flips that. You share the platform pieces yet keep node independence for patch windows and rollbacks. If you still need an ATX-based design for a special board or rail, ask Customization Server Chassis Service and make it fit your BOM instead of fighting it.

Quick proof table (claims, meaning, source)

| Claim | What it means in practice | Where to check |

|---|---|---|

| Shared PSUs reduce outlets and spares | Fewer PSU modules and PDU sockets; easier dual-feed power; less cable clutter | Dual-Node Server Case |

| Shared fans = lower noise and steadier temps | Single airflow path with tuned curves; less fan thrash; safer VRMs and drives | Dual-Node Server Case |

| Higher density per RU | Two nodes in one shell; better use of rails and vertical space | Dual-Node Server Case |

| Dual controllers in one chassis | HA-style storage without an extra box; simpler cabling | Dual-Node Server Case 2400TB |

| OEM/ODM fit to your stack | Custom backplanes, front panels, rails, and airflow guides | Customization Server Chassis Service |

Ops notes (the stuff people forget)

- Power policy: Decide N+1 vs 1+1. Label PSU inputs A/B so nobody cross-plugs.

- Airflow: Verify front-to-back. Don’t mix reverse airflow in the same row, it breaks the aisle.

- Service path: Check you can pull a node with PDUs mounted; leave finger room.

- OOB: Give each node a management IP. Keep firmware baselines matched but not mixed, ya know.

- Monitoring: Alert on fan RPM bands, not just fail states. That catches the “almost bad” fans.

- Noise: For office closets, shared fans usually mean fewer spikes. Still, measure dBA in your enviroment.

Buyer checklist (fast)

- Workload type (VMs, AI inferencing, object store?).

- Drive mix and bay count for your computer case server plan.

- PCIe lanes and risers per node; leave headroom for NICs or accelerators.

- Rail depth and weight in the server pc case row; don’t guess.

- PDU layout and cord types.

- Spare fans and PSU SKUs; fewer, standardized, labeled.

- Service model: hot-swap trays, tool-less lids, clear LEDs.

- Need custom bits? Ping Customization Server Chassis Service.

Why IStoneCase here (short pitch, no fluff)

IStoneCase — The World’s Leading GPU/Server Case and Storage Chassis OEM/ODM Solution Manufacturer. That mouthful boils down to three things: solid metalwork, thermal discipline, and real customization. We ship GPU server cases, classic server case designs, rackmount and wallmount frames, NAS devices, ITX case options, and chassis guide rail kits for clean installs. If you need a dual-node now and a special backplane later, we don’t make you start over. We tune it. We build it. We ship it in batches for data centers, AI labs, MSPs, and plain old dev teams.

Want a friendly starting point? See Dual-Node Server Case for the platform, add capacity with Dual-Node Server Case 2400TB, or request changes via Customization Server Chassis Service. If you prefer a single-board atx server case build for one-off labs, we can adapt rails and airflow pieces there too.

Extra keyword pointers (so you can find the right thing fast)

- Looking for a server rack pc case that isn’t a furnace? Start with Dual-Node Server Case.

- Need a server pc case with fewer PSUs to manage? Dual-node helps; see the Customization Server Chassis Service for power options.

- Building a computer case server for storage? The Dual-Node Server Case 2400TB is your short list.

- Trying to keep an atx server case but want dual-node-like neatness? Custom rails and airflow kits via Customization can get you most of the way.

Bottom line: share the right parts (PSUs, fans, space), keep nodes independent, and your racks run quieter, cleaner, and easier to scale. Not perfect for every shop, but for many teams, it’s the neat way to grow without blowing up power and cabling.