If you run GPU workloads today, you already feel it: training boxes and inference boxes dont behave the same.

So it also makes zero sense to put both into the exact same chassis by default.

Below we walk through the real stuff that decides your choice of server rack pc case or server pc case for each workload, with concrete points, one table, and some real-world scenes.

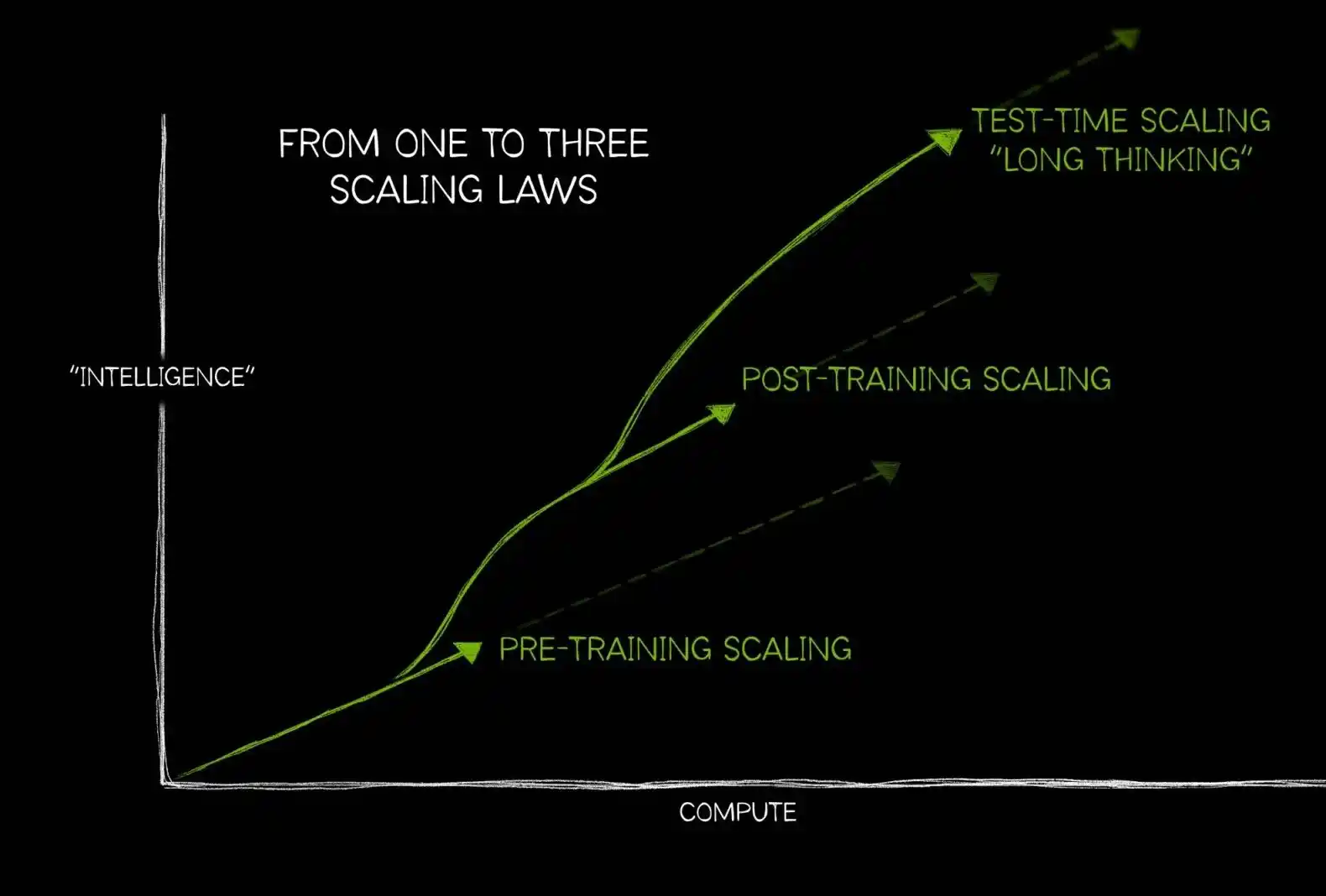

AI Training vs Inference: Different Loads, Different Server PC Case Choices

Let’s keep it simple.

- Training systems

- Long jobs, days or weeks.

- Heavy math, big datasets streaming all the time.

- Goal: finish epoch faster, squeeze every bit from the GPUs.

- Inference systems

- Short requests, many users.

- Each call is small, but QPS is high.

- Goal: low latency, stable SLA, good cost per request.

Because of that, you rarely design the same computer case server for both:

- Training wants dense GPU nodes inside a deep rack case, high airflow, crazy power budget.

- Inference often wants slimmer 1U/2U nodes or even edge boxes, easy to roll out in many sites.

For customers like data centers, algorithm centers, large enterprises, IT service providers and even hobby devs, this difference hits daily O&M life: thermal alarms, noisy gear, hard to service boxes, cable jungle… all start from the wrong chassis choice.

GPU Density and Thermal Design in a Server Rack PC Case

When you talk training, you talk GPU density and thermal envelope first.

The chassis must survive that, not only pass spec on paper.

Training Systems: Many GPUs, Heavy Heat in a Server Rack PC Case

Typical training node:

- 4–8 full-length, full-height GPUs.

- Very deep server rack pc case, often 4U and above.

- High airflow front-to-back, fan wall, maybe liquid ready.

- Clean internal layout for riser cards and cabling, or you kill airflow by yourself.

What the chassis must offer:

- Strong front intake, big fan wall in the middle, straight air tunnel across all GPUs.

- Room for big PSUs and copper.

- Stiff structure, because a fully populated GPU server case is heavy and you dont want rails bending.

This is where a specialized rackmount case from a manufacturer like IStoneCase makes sense. You need someone who design around GPU length, PCIe risers, cable routing, not just “it fits on paper”.

Inference Systems: Leaner Compute and Mixed Workloads

Inference nodes are more diverse:

- Sometimes 1–2 GPUs plus fast CPU.

- Sometimes pure CPU, but lots of memory and fast NVMe.

- Often 1U/2U or short-depth chassis if you deploy in edge rooms.

Here the box:

- Can be slimmer, but still needs directed airflow on the single GPU.

- Needs more I/O options (extra NICs, serial, maybe legacy ports) for integration with other gear.

- Should be easier to maintain, because you may have alot of small sites, not only a big DC.

A compact server pc case or ITX case with good front I/O and cable management already solves many pain points for MSPs and small IT teams.

Training vs Inference Chassis: Key Differences at a Glance

You can drop this table into internal docs or your own deck.

| Aspect | AI Training System Chassis | AI Inference System Chassis |

|---|---|---|

| Main goal | Max throughput, finish epochs faster | Low latency, high QPS, stable service |

| Typical form factor | 4U (or higher) computer case server with deep rack depth | 1U / 2U, short-depth, sometimes wallmount or ITX |

| GPU count | 4–8 high-power GPUs per node | 0–2 GPUs, sometimes many light nodes instead |

| Airflow | Big front intake, fan wall, strict front-to-back airflow, maybe liquid loop ready | Focused airflow on fewer hot spots, noise and dust control for edge |

| Power | Massive PSUs, high peak draw, heavy busbars | Moderate PSUs, focus on efficiency and easy wiring |

| Storage layout | Many hot-swap bays for data loading and checkpoints | Few fast NVMe/M.2 for model weights and logs |

| Network | Multiple high-speed NICs for east-west traffic between nodes | More ports for north-south traffic, load balancers, edge devices |

| Serviceability | Often managed by pro DC teams, longer MTTR is still tolerated | Needs fast swap for fans, PSUs and disks, low MTTR at branch sites |

Power, Cooling and Layout Inside a Computer Case Server

Power and cooling is where many projects get painful.

Training Boxes: Big PSUs, Ugly Cables if Design Is Bad

For training:

- PSUs are big and heavy.

- Cables are thick.

- GPU connectors eat space fast.

If the chassis doesn’t plan PSU location and cable channels, you get:

- Blocked airflow.

- Hot spots near VRMs.

- Hard to close the side panel after a tiny upgrade.

An OEM/ODM builder like IStoneCase can tweak:

- PSU position (front, rear, dual PSU)

- Cable routing holes and tie-down points

- Extra space near PCIe risers

So your high-density computer case server is still serviceable after the 10th field modification.

Inference Boxes: Cooling for Real World, Not Only Lab

Inference nodes live in places like:

- Small office racks with messy cable management.

- Edge closets with poor cold/hot aisle.

- Retail or industrial sites with dust and vibration.

Here, the chassis must:

- Use more dust filters and easy-clean front panel.

- Support quieter fans, or at least fan curve tuning.

- Keep clear paths around hot GPU/CPU areas even in 1U.

You might pick a short-depth ATX server case for a branch rack, with enough rear space for cables and a simple chassis guide rail kit to pull the box out in seconds. That small detail makes O&M teams much more happy.

From Data Center to Edge: Rackmount, Wallmount and ITX Server Case Options

Not every load runs in a big data center.

This is where different chassis families come into play.

Rackmount Cases for Training and Heavy Inference

For big data center or algorithm center:

- 19″ rackmount case is the base line.

- 4U deep chassis for training nodes.

- 1U/2U cluster of inference nodes under the same top-of-rack switch.

You usually combine:

- GPU training boxes in 4U form factor.

- API nodes, gateways, databases in 1U/2U.

- Shared storage in dedicated NAS or JBOD chassis.

A vendor like IStoneCase can supply both the heavy GPU server case and lighter server rack pc case for the surrounding services, with the same faceplate style so your rack looks clean and “same family”.

Wallmount and NAS Devices for Edge Inference

For medium business, retail, smart building, even research labs:

- Wallmount boxes keep gear away from the floor, good against dust and “random kicking”.

- Small NAS devices handle local logs, video, telemetry.

- Edge inference node runs inside a compact server pc case or NAS devices chassis with one GPU.

This gives you:

- Short path from camera or sensor to inference node.

- Less dependency on WAN.

- Simple on-site maintenance, because the whole box is reachable without sliding out a heavy 4U.

ITX and Small Form Factor for Developers and POCs

Dev teams and technical enthusiasts love small but serious boxes:

- Mini-ITX or micro-ATX boards.

- One decent GPU.

- Quiet enough to sit under the desk.

A well built ITX case lets you run real training on small datasets and realistic inference benchmarks without filling a full rack. Later, when you scale into the data center, you just port the workload to a bigger server rack pc case design.

How IStoneCase Supports AI Training and Inference Chassis Projects

IStoneCase positions as:

“The World’s Leading GPU/Server Case and Storage Chassis OEM/ODM Solution Manufacturer”

In practice, that means:

- You can get standard GPU server cases, server case, rackmount case, wallmount case, NAS devices, ITX case and chassis guide rail kits.

- You can also ask for custom front panel, extra bays, different PSU placement, odd depth, unique airflow, even special paint if you really want.

For AI training projects, IStoneCase can:

- Provide deep 4U computer case server options tuned for multi-GPU, big PSUs and front-to-back air.

- Help you keep proper GPU spacing and riser routing to avoid thermal throttling and signal issues.

For inference rollouts, especially at scale:

- Slim ATX and short-depth atx server case designs fit cramped racks and edge rooms.

- Wallmount and NAS style chassis solve “no rack but still need server” headaches.

- OEM/ODM options make it easy to ship the same chassis SKU to many branches and partners.

Whether you are a data center operator, database service provider, research institute, or a small IT services shop, the chassis is not only metal.

It is a real part of your uptime, your on-site workload, and your brand image when customers walk into the equipment room.

Pick training and inference cases with that in mind, and the whole stack from GPU to rail kit will just work better together.