IStoneCase — The World’s Leading GPU/Server Case and Storage Chassis OEM/ODM Solution Manufacturer.

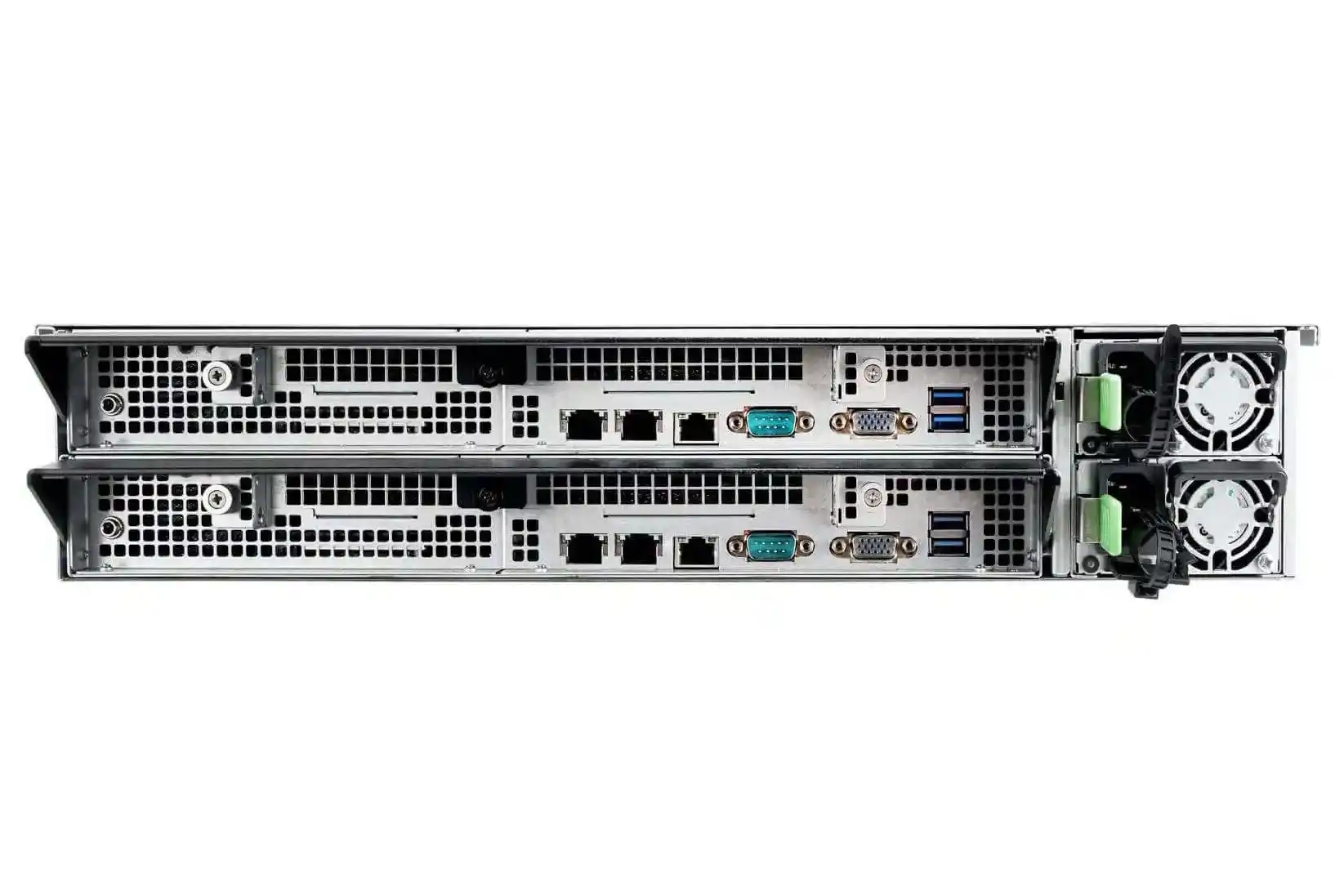

Dual-Node Server vs Single-Node Server: Cost Comparison Model

Let’s keep it real. You’re choosing between a dual-node box and a single-node box. Both can win. The “right” pick depends on total cost of ownership (TCO), uptime risk, licensing, and growth. Dual-node usually costs more up front and in power/cooling. Single-node stays lean, simple, and fast to deploy. But if your SLA bites on downtime, dual-node pays back through high availability (HA) and lower MTTR. Different roads, different tolls.

We’ll walk the model, show a quick decision table, and ground it in real server-room pain points: PUE, per-socket licensing, NUMA hops, PCIe lane pressure, and RMA realities. When chassis matters, IStoneCase brings OEM/ODM tweaks—airflow, rails, sleds—that cut hidden spend over time.

Keywords you might care about (and can actually buy): server rack pc case, server pc case, computer case server, atx server case, plus GPU-ready racks, NAS boxes, rails, and more at IStoneCase.

TCO for Server PC Case and Chassis Choices

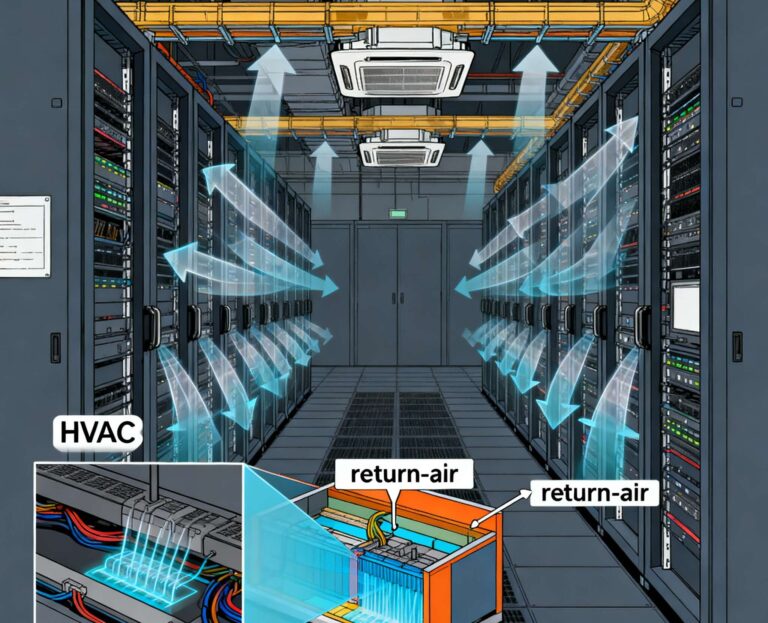

TCO isn’t just the chassis price. It’s also electricity, cooling, rack U, cabling, licensing, service windows, and the cost of “oops, we’re down.” Dual-node skews higher in ongoing power and thermal headroom; single-node skews lower in day-one spend and simpler ops. Chassis design shifts both curves:

- Airflow & pressure: front-to-back airflow, baffle kits, and dust control reduce fan ramp and keep components in the comfort zone.

- Cable & rail hygiene: tool-less Chassis Guide Rail and tidy harnessing shrink service time; your MTTR goes down.

- Room to grow: extra PCIe slots and clean NVMe backplanes delay a forklift upgrade.

- Right form factor: a solid server pc case or server rack pc case avoids “SKU lock-in” when you add GPUs or NICs later.

IStoneCase ships GPU-centric racks, NAS, and custom sleds, so you don’t fight the chassis while you fight your roadmap.

High Availability (HA) in Server Rack PC Case Deployments

If downtime hurts revenue or compliance, dual-node makes sense. You get node-to-node failover, hot-swap parts, and rolling updates without a full stop. Ops can patch firmware on one node while the other carries traffic. That’s the boring, reliable kind of “win” finance teams like. Not gonna lie, single-node can be fine for labs and non-critical portals. But when an SLA sets teeth on edge, HA reduces that “2 a.m. pager” tax.

Ops slang you’ll hear: N+1 power, dual path networking, cold aisle containment, DCIM alarms, change windows, and RPO/RTO. If your team lives in those words, dual-node isn’t luxury—it’s insurance.

Software Licensing Per-Socket vs Per-Core

Licensing can flip the math. Some platforms license per socket or per node. Two sockets or two nodes can mean two licenses. Others license per core, per VM, or per host pool. Please map this before you buy. It’s painful to discover later. We’ve seen teams pick the “cheaper” motherboard and eat higher license costs for years.

Tip: For per-socket models, a high-end single CPU in a well-designed atx server case can beat a dual-socket setup on both cost and simplicity.

Performance, NUMA, and PCIe Lanes

Performance isn’t just GHz. It’s memory locality and PCIe lanes:

- Single-node, single-socket: fewer NUMA edges, tighter latency, easier tuning. Good for OLTP, web tiers, caching, and microservices.

- Dual-node or dual-socket: more memory channels and PCIe lanes for GPUs, NVMe, and high-speed NICs. Great for AI training, VDI, heavy virtualization, column stores.

If you need multiple GPUs, don’t choke your lanes. Use a GPU-ready computer case server with proper risers, airflow, and power budget so the cards don’t throttle.

Use Cases: Data Center, AI/HPC, SMB, Edge

- Data Center Core: Multi-tenant virtualization, big databases, and “never-down” portals lean dual-node. HA keeps change windows short and boring.

- AI / HPC: If you scale out with accelerators, you’ll love more lanes and cooling headroom. Dual-node or dual-socket rigs shine here, especially with GPU-centric chassis.

- SMB & SaaS startups: Ship fast with single-node. Lower opex, simpler patching. When your traffic climbs, add another single-node or jump to dual-node in prod and keep single-node in staging.

- Edge & retail: Space and power are tight. A quiet, efficient server rack pc case or compact ITX Case wins. HA may live at the regional layer instead of the store.

- Storage-heavy: Pair compute with NAS Devices or hybrid JBOD trays. Separate failure domains. Your RMA life gets easier.

Decision Table: Dual-Node vs Single-Node

| Dimension | Single-Node | Dual-Node | Why it matters |

|---|---|---|---|

| Initial hardware | Lower | Higher | Dual adds boards, power stages, and cooling complexity. |

| Power & cooling | Lower baseline | Higher baseline | More components need more airflow; PUE amplifies the gap. |

| Licensing impact | Lower if per-socket | Higher if per-socket | Per-socket/node licensing can multiply recurring cost. |

| Uptime & HA | Maintenance windows required | Rolling updates, failover | HA slashes planned downtime and reduces MTTR. |

| Performance tuning | Simple, fewer NUMA edges | More lanes, more memory | Choose latency simplicity vs expansion ceiling. |

| Expansion runway | Limited slots | More GPUs/NVMe/NICs | PCIe pressure arrives later on dual setups. |

| Ops complexity | Straightforward | Higher | More parts, more playbooks, more monitoring. |

| Best fit | Labs, dev/test, light prod | Critical prod, AI/VDI, big DB | Match the choice to SLA and growth curve. |

(No explicit numbers—your costs vary by workload, energy, and licensing model.)

Procurement Checklist: Rackmount Case, ATX Server Case, NAS, Rails

Don’t overcomplicate it. Use a quick checklist and you’ll avoid “why did we buy this” meetings.

- Chassis fit: Confirm rack depth, U height, and weight with rails. Tool-less rails save hands and time.

- Airflow path: Front-to-back, baffled GPU zones, clean cable lanes. It ain’t pretty if hot air loops back.

- Power budget: Dual PSUs, N+1, clean cable paths, no spaghetti.

- PCIe & bays: Leave headroom for NICs, storage, and accelerators. Tomorrow shows up early.

- Serviceability: Hot-swap trays, easy-reach fans, labeled harnesses. Your RMA clock will thank you.

- Licensing map: Per-socket vs per-core vs host. Decide before you pick sockets and nodes.

- Form factor: For compact installs, an ATX server case or tidy server pc case keeps costs stable.

IStoneCase can tune airflow, add custom brackets, and ship OEM faceplates so your fleet looks clean and works cleaner. Need rails, shelves, or GPU-ready bays? We’ve got it. Bulk orders, ODM tweaks, batch procurement—done.

Chassis and Brand Value: Why IStoneCase

You don’t just buy metal. You buy an ecosystem: GPU-ready frames, rackmount, wallmount, NAS, ITX, and rails that match. We build to last. We build to scale. And we listen. If your algorithm center needs a weird riser or your data center needs a custom front panel, we’ll design it. If your ops team don’t love fan noise, we fix airflow. Simple as that.

- Product lines worth a look: GPU server case, Rackmount Case, NAS Devices, ITX Case.

- Custom OEM/ODM: branding, thermals, rails, cable kits, and integration for “roll-in-the-rack and go.”

Bottom Line: Pick the Cost Curve You Can Live With

If downtime costs you sleep or revenue, go dual-node and stop worrying. If you need speed, simplicity, and steady cash flow, start single-node and scale out. Both paths work. Don’t chase buzzwords—chase fit.

When you’re ready, bring your workload profile and a short wish list. We’ll help you map TCO, license shape, and chassis picks—then build the box you actually need, not the one a spec sheet wanted. That’s how you keep spend honest and teams happy.