You want a straight answer: yes, GPUs in a dual-node chassis are not only doable, they’re practical. Two hot-swap nodes in one enclosure give you density, shared power and fans, and simpler ops. The trick is picking the right chassis, cooling path, and I/O layout—then locking SKUs so nothing “mysteriously” changes at build time. Below I’ll walk through the real constraints and the payoffs, in plain words, with tables and concrete takeaways. I’ll also show where IStoneCase fits if you need OEM/ODM or bulk.

Dual-node chassis GPU feasibility (2U/4U with multi-GPU)

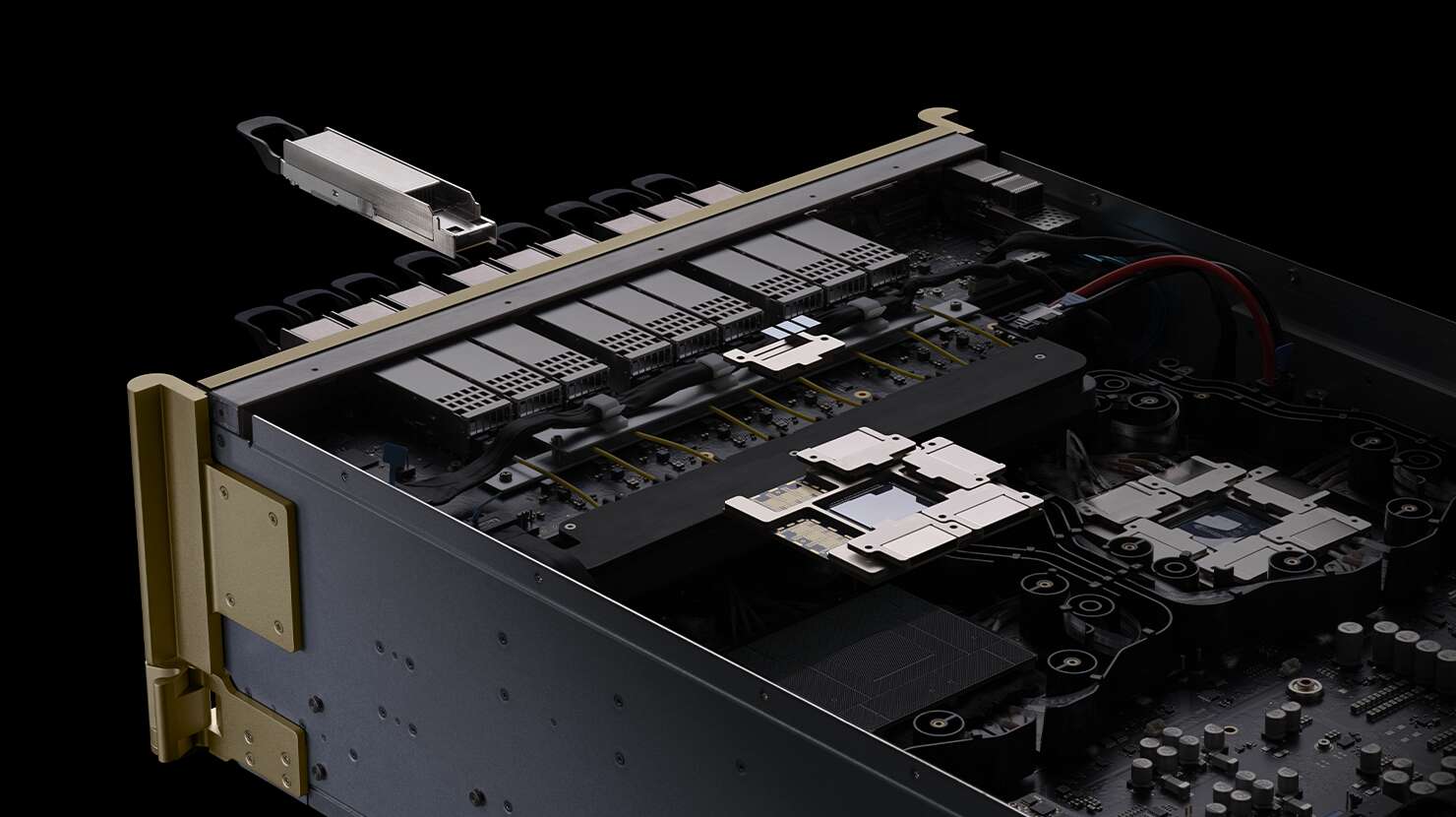

A dual-node chassis is one box with two independent compute sleds. Each node gets its own CPU, memory, storage, and PCIe lanes. The chassis shares the power supplies and the fan wall. With the right airflow and lane mapping, each node can drive multiple GPUs—often three double-wide or more single-wide, depending on slot geometry and thermals.

If you’re hunting for a server rack pc case, server pc case, or computer case server that can host dual nodes plus accelerators, start by matching GPU TDP to fan and PSU headroom. Don’t guess; read the fan curve and the PSU spec, then leave margin.

PCIe 4.0 x16 lanes and OCP 3.0 NICs (bandwidth and topology)

GPUs love lanes. Aim for PCIe 4.0 x16 per accelerator (or PCIe 5.0 where available). Use OCP 3.0 NIC (AIOM) for 100G+ uplinks without burning extra slots. Watch for PCIe bifurcation rules from the board vendor. If you need GPUDirect-ish patterns across nodes (e.g., training sharded models or heavy all-to-all inference), plan the fabric so in-chassis GPU-to-GPU and cross-node traffic both have room. Nothing hurts more than a shiny GPU farm bottlenecked by a single NIC.

Power & cooling envelope in 2U/4U dual-node servers

This is where builds succeed—or overheat. Confirm:

- PSU headroom with redundancy on; avoid running near the rails.

- Airflow front-to-back aligned to your hot-/cold-aisle. Fill blank panels; don’t leave pressure leaks.

- Fan wall RPM vs. acoustic/MTBF targets. High static-pressure fans are your friend.

- If GPU TDP is high, consider liquid-ready cold plates or a taller RU. Sometimes 4U gives you bigger heat sinks and cleaner cable dressing than 2U.

If your deployment needs roomier thermals or more slots, see IStoneCase’s families:

- GPU Server Case · GPU Server Case (Catalog)

- 4U GPU Server Case · 5U GPU Server Case · 6U GPU Server Case

These cover ATX/E-ATX layouts too, handy when you need an atx server case option with more breathing room.

Real workloads: VDI, rendering, AI inference, media transcode

You don’t buy dual-node GPU boxes for “nice to have.” You buy them to ship work:

- AI inference (batch & online): Multi-GPU per node lets you pin models by SKU and scale horizontally. Great for LLM serving, vector search, and computer vision.

- Rendering & M&E: Daytime remote workstations; nighttime render farm. The two nodes let you separate interactive sessions from queue jobs.

- VDI: Pack more seats per RU, with single-wide GPUs that sip power but push frames.

- Transcode/streaming: NVENC/NVDEC density shines when you toss many single-slot cards into one chassis.

- Edge/branch: Ruggedized racks love dual-node because spares and power feeds are tight. One box, two independent nodes = less truck rolls.

Claim–evidence–impact (table)

| Claim (what’s true) | Evidence / Specs (typical) | Impact (so what) | Source type |

|---|---|---|---|

| Dual-node 2U/4U can host multiple GPUs per node | Per-node PCIe 4.0 x16 slots; up to 3× double-wide or 4–6× single-wide depending on layout | High density in small RU; simpler power & fan sharing | Vendor datasheets & platform quickspecs |

| Shared PSUs and fan wall cut overhead | Redundant 2.x kW PSUs common; high static-pressure fan wall | Better efficiency and fewer FRUs to stock | Vendor datasheets; lab burn-in notes |

| OCP 3.0 NICs free PCIe slots | NIC as AIOM/OCP 3.0; 100/200G options | More GPUs fit, clean cabling, higher east-west BW | Board manuals; build logs |

| Thermals gate GPU count | Fan wall CFM/SP → stable GPU temps under load | Prevents downclocking; longer component life | Thermal logs from validation |

| SKU lock avoids surprises | Same board rev, riser, shroud, and cable kits | Repeatable builds; predictable lead times | Procurement SOP & BOM control |

| Dual-purpose cycles boost ROI | Workstations by day, batch jobs by night | Higher utilization without extra racks | Customer PoC diaries |

| 4U/5U/6U can de-risk heat | Taller chassis = bigger heatsinks + easier cable runs | Lower fan RPM, less noise, fewer thermal incidents | Field deployments; NOC reports |

Note: values above reflect common industry configs; exact limits depend on your chosen board, risers, and coolers.

Node-level bill of materials (BOM) you should actually check

- CPU sockets & lane map: Confirm total PCIe lanes after NVMe and NICs.

- Risers & slot spacing: Double-wide GPUs need clear 2-slot spacing; watch for hidden M.2 heat shadows.

- OCP 3.0 slot: Reserve for your 100G or higher fabric.

- Fan wall + shroud: The right air shroud can drop GPU temps by double-digit °C.

- PSU SKU: Same wattage, same efficiency bin; avoid mixing revisions.

- Firmware bundle: Lock BIOS/BMC/PCIe retimer versions. Dont mix and match; it bites.

This is boring paperwork, but it keeps fleets healthy.

Practical deployment patterns (with jargon but useful)

- Cold aisle / hot aisle discipline: Fillers installed, brush strips on cable cutouts, no “Swiss-cheese” fronts.

- RU budget vs. heat: If 2U is tight at your watt-per-GPU, step to 4U and stop fighting physics.

- Fabric layout: 2×100G per node (or higher) to split north-south and east-west traffic; think service mesh + storage streams.

- MTBF and FRU stock: Keep a spare sled, PSUs, and at least one full riser kit per pod.

- Observability: Export BMC and GPU telemetry; catch creeping fan failures before throttling. It’s not rocket sience, but it saves nights.

IStoneCase options if you need OEM/ODM or bulk

If your team needs a server pc case or atx server case tuned for dual-node GPU builds, IStoneCase (IStoneCase — The World’s Leading GPU/Server Case and Storage Chassis OEM/ODM Solution Manufacturer) ships cases and customizations for data centers, algorithm hubs, enterprises, MSPs, research labs, and devs. Start here:

- Catalog overview: GPU Server Case

- Taller, cooler: 4U GPU Server Case · 5U GPU Server Case · 6U GPU Server Case

- Workstation-friendly GPU cases: ISC GPU Server Case WS04A2 · ISC GPU Server CaseWS06A

- End-to-end tailoring: Customization Server Chassis Service

We do OEM/ODM, bulk orders, and spec tweaks (rails, guide kits, cable routing, sled handles). If you’ve got an oddball board or quirky riser, we’ll adjust the sheet metal and airflow guides. That’s kinda our day job.

Quick workload-to-hardware mapping (table)

| Workload / Scenario | Node GPU form factor | NIC plan | Chassis pick |

|---|---|---|---|

| AI inference at scale | 3× single-wide (or 2× double-wide) per node | Dual 100G; split service vs. storage | 2U dual-node if TDP moderate; jump to 4U GPU Server Case if hot |

| Remote workstation by day, render by night | 2–3× double-wide per node | 100–200G; QoS on render queue | 5U GPU Server Case for quieter fans |

| VDI farm | 4–6× single-wide per node | 100G per node; L2/L3 close to users | 6U GPU Server Case if you need cooler temps |

| Edge / branch racks | 1–2× single-wide per node | 25–100G; compact optics | ISC GPU Server Case WS04A2 |

| Media transcode | 4× single-wide per node | 100G; multicast/ABR aware | Catalog GPU Server Case or customized |