Listen up. We get it. You’re a research startup or a busy AI development team, and those massive, unexpected monthly cloud bills? They hurt. Every single month. You need serious computing power—we know your models don’t train themselves—but you also can’t just toss money at massive corporations. You need a smarter, more sustainable strategy.

This essay isn’t about saving a few bucks on a CPU fan. It’s about building a robust, high-performance system for the long haul, using a server pc case solution that gives you maximum control and, critically, maximum savings. Let’s talk about the hardware reality, and how we can help you build your own powerhouse.

Why Your Team Shouldn’t Pay the Cloud Tax Anymore

We see many smart developers and researchers making the move: owning their compute infrastructure. They complete the training cycle on their own hardware because, quite frankly, renting costs a fortune over time.

Think about it. Cloud providers are fantastic for quick spikes or small development tasks. But for the heavy, hours-long, iterative training a serious AI model needs? You’ll be surprised how much cheaper it is to buy the gear once than rent it forever. That initial investment in a proper server rack pc case and internal components pays for itself, sometimes within a few months. It’s a fundamental shift that transforms your operational budget.

| Core Argument (Specific Argument) | Detailed Analysis and Professional Insight |

| DIY Compared to Cloud Services is Way More Cost-Effective. | Building your own deep learning machine often costs one-tenth the price of renting equivalent cloud compute over a year. For long-duration model training, this radically reduces operating expenses. |

| GPU Memory (VRAM) is the Crucial Factor. | Your VRAM limits the size of your models and datasets. For modern AI and large language models (LLMs), 12GB+ is not a luxury, it’s a baseline. |

The True Boss: GPU Memory (VRAM)

Here’s a piece of industry insight: If you’re building a budget AI rig, you must prioritize the GPU. That’s your heavy lifting. The one non-negotiable component that dictates your training capability is the amount of VRAM. You simply won’t train those big, fancy LLMs or handle massive image datasets without serious VRAM.

And here’s another truth you need to hear: NVIDIA still runs the show. While other chips are improving, the industry standard for AI is still the CUDA platform. You don’t want your team wasting time fixing broken software dependencies just to save a tiny bit on a non-NVIDIA card. It isn’t worth it. Focus your budget on the card with the most VRAM, and keep it NVIDIA.

For instance, your team needs a specialized machine for heavy-duty parallel processing, right? You should look directly at a dedicated GPU Server Case design. That’s where the power lives.

Choosing the Right Home for Your Power: The ATX Server Case

Now, let’s talk about the chassis. It’s not just a fancy metal box; it’s the foundation that ensures all those expensive components actually perform. That’s where the durability and engineering of a quality vendor really matter.

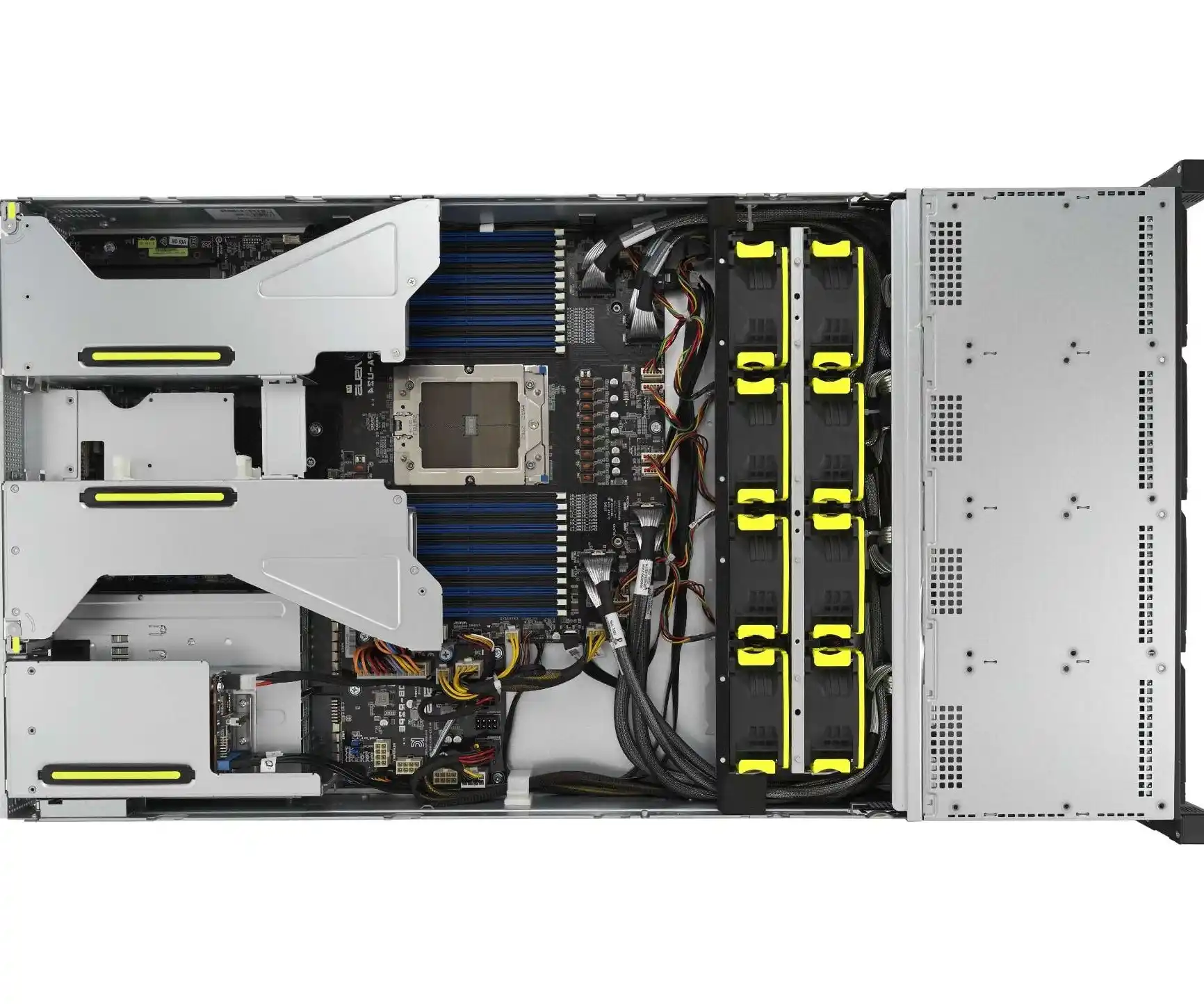

A quality computer case server needs to address two primary concerns for AI work: space and airflow.

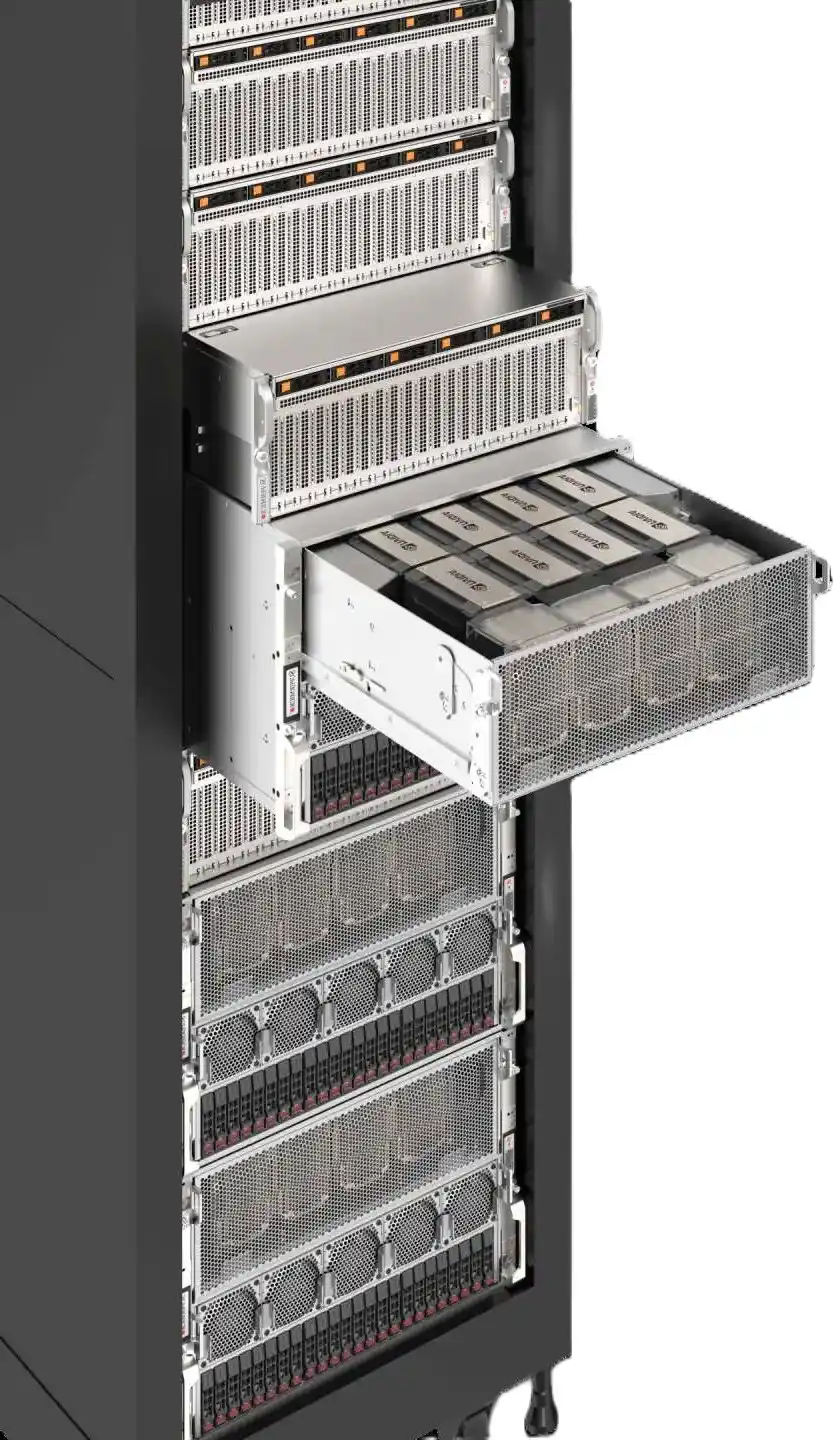

- Space and Scalability: Modern GPUs are massive. They are long, thick, and need serious power. You need a big atx server case or a multi-slot Rackmount Case to comfortably fit them and the necessary high-wattage power supply. We see a lot of researchers and algorithm centers love a good server rack pc case design because it’s inherently scalable; you can just add another unit when your team grows.

- Airflow and Stability: This is the invisible efficiency of your operation. A cheap case with poor airflow? That GPU you just bought? It’ll overheat during a long training run, throttle its speed, and essentially waste all that money you saved. You’re losing performance for what? A few dollars on the chassis? A robust case ensures proper ventilation, allowing your GPU to run 24/7 at maximum clock speed.

This is precisely why companies choose Istonecase. We don’t just sell metal boxes; we provide the engineered foundation for stable, high-performance AI compute. We specialize in custom solutions. Since we do OEM/ODM, we can tailor a high-quality computer case server to fit your specific GPU/PSU/Motherboard combination perfectly, giving you maximum return on your compute investment.

| Component Category | Key Consideration (Why it Matters) | Budget-Friendly Recommendation (Smart Move) |

| Chassis | Space, Airflow, Expandability, Noise Control | Mid-Tower or Rackmount Case. Must accommodate long GPUs and dedicated cooling fans. |

| GPU | VRAM Capacity (Absolutely Critical) | NVIDIA RTX 3060 12GB (New, excellent price-to-VRAM ratio) or a used high-VRAM server card. |

| RAM | Handling Large Datasets (Avoid Bottlenecks) | Minimum 32GB. Larger LLM or image processing models require 128GB or more. |

| PSU | Stability and Efficiency (Your System’s Backbone) | Choose an 80 Plus Gold certified unit with a 100W power buffer above the system’s needs. |

Simple Components That Won’t Break the Bank

When you’re juggling your startup’s budget, remember this: don’t overspend on the CPU. It handles operating systems and data loading, but the GPU handles the matrix math. Get an older-generation Core i7 or an AMD Ryzen 5. It does the job just fine. Spend the difference on more VRAM.

And don’t overlook the power supply unit (PSU). It’s the heart of your operation, the thing that keeps the power clean and steady. Choose a reliable, highly efficient unit—the 80 Plus Gold rating is a smart move because it actually saves you money on your electricity bill over the life of the machine. That’s real commercial value.

Where the Server PC Case Shines: Actual Usage Scenarios (Scenarios)

Think about the diverse applications your clients have.

For a small research lab where noise matters, a quiet ITX Case is a fantastic option, letting developers run small models on their desk without disruption.

For an IT services firm setting up machines in non-traditional spaces, a Wallmount Case offers flexibility without sacrificing power. It’s a neat solution for edge computing locations.

For a growing data center or database service provider, scalable Server Case solutions are essential for future expansion. You need durable hardware that can be serviced quickly. That’s why we offer specialized solutions, including easy-access Chassis Guide Rail options for quick maintenance. And don’t forget the need for robust data storage—that’s where our NAS Devices come into play.

Whether you’re an internal algorithm center or a large enterprise, we provide the metal shell that guarantees the longevity of your high-value components.

Your Next Move

Stop renting; start owning your AI infrastructure. You’ll gain better performance consistency and financial control.

The goal here isn’t to buy any cheap box. It’s to invest in a durable, well-engineered server case that protects your most expensive part—the GPU—and ensures high-speed performance for years. We manufacture durability.

If you’re ready to buy in bulk or need a unique size, remember that Istonecase is your go-to OEM/ODM solution manufacturer. We build the exact Server Case your algorithm center requires. Don’t compromise your AI future.