If you’re planning an AI data center refresh, you already know the weird truth: the chassis is “just metal”… until it isn’t. One bad airflow path, one messy cable run, one rail that flexes, and your whole rack-and-stack day turns into pain.

Also, the words people type into Google are telling. When someone searches server rack pc case, server pc case, computer case server, or atx server case, they’re usually not buying a pretty box. They’re trying to ship GPUs fast, keep them cool, and fix them at 2 a.m. without pulling the whole rack apart.

Below is a practical, shop-floor view of what’s coming in 2025–2030, and how you can spec chassis that won’t age badly. I’ll also weave in where IStoneCase fits—OEM/ODM, bulk builds, and the unglamorous parts (rails, bays, airflow) that save your team time.

Trend map you can screenshot

| Trend keyword | What’s changing | Chassis impact (real-world) |

|---|---|---|

| Rack-scale | Nodes become swappable “trays” | More blind-mate design, stronger rails, faster service |

| 21-inch Open Rack / ORW | Wider racks for AI density | More room for power + cooling + cable lanes |

| 48V busbar + power shelf | Power goes rack-level | Fewer PSUs per node, cleaner cabling, safer swaps |

| Higher rack power | Density keeps rising | Airflow budget gets tight, liquid-ready layouts grow |

| Direct-to-chip liquid cooling | Liquid becomes normal | QD ports, manifolds, leak sensing space |

| Modular reference platforms | Standard blocks win | Chassis becomes a platform, not a one-off |

| 2U/compact GPUs spread | Not everything is 4U/6U | Tight thermals, careful layout, strong structure |

| PCIe 7.0 era | Signal gets fragile | Shorter paths, retimers, smarter internal routing |

| EDSFF storage | Better airflow + service | Front hot-swap layouts evolve |

| Integrated management | More control silicon | More sensors, wiring, and “keep-out” zones |

1) Rack-scale modularity (OCP DC-MHS)

AI teams don’t want to baby individual servers. They want rack-scale building blocks: slide a tray out, slide a tray in, done. That mindset forces chassis vendors to care about boring details like latch feel, guide pin alignment, and how a tech’s hands fit when the aisle is tight.

Where you’ll feel it:

- Your ops team asks for lower MTTR (mean time to repair), not fancy paint.

- Your deployment checklist starts mentioning “blind-mate” and “tool-less” more often.

If you build with an OEM/ODM partner, this is where custom metalwork actually pays off. You can tune handle placement, tray stops, and service loops for your rack workflow. That’s the kind of stuff IStoneCase lives in when you spec a fleet build, not a one-off sample.

Links: GPU Server Case • Rackmount Case

2) 21-inch Open Rack and Open Rack Wide (ORW)

Wider racks aren’t about fashion. They’re about space for power lanes, cooling paths, and cable management. When you cram modern GPUs into traditional layouts, cables start blocking airflow, and then fans scream like a hair dryer.

Practical chassis changes you’ll see:

- Wider internal “cable corridors”

- Cleaner front-to-back airflow lanes

- More room for liquid tubing bends (no kink city)

Even if you’re still on 19-inch today, it’s smart to choose chassis families that can evolve—same internal skeleton, different mounting strategy. That’s easier with OEM/ODM chassis lines than with random spot buys.

3) 48V busbar and rack-level power shelf

The power story keeps moving upward—from node-level PSUs toward rack-level power shelves and 48V distribution. Why? Less cable clutter, fewer conversion stages, and simpler maintenance. In plain terms: fewer things to wiggle loose.

Chassis-level implications:

- More blind-mate power interfaces

- Less “PSU real estate” inside each node

- Better safety design around touch points

If you’ve ever watched a tech fight a stiff PSU cable in a packed rack, you get why this matters. It’s not glamorous, but it reduces dumb failures.

Related reading on IStoneCase’s site: Data Center Chassis Selection Guide for AI Training Workloads

4) Higher rack power density and airflow budget

Racks keep getting hotter. That pushes chassis makers to treat airflow like money: you can spend it once, then it’s gone.

What changes in the metal box:

- Shorter, straighter air paths

- Bigger fan walls (or better ducting)

- Stricter “keep-out zones” so cables don’t block intakes

This is where a solid server rack pc case spec beats a “close enough” buy. You want repeatable airflow and predictable thermals across a whole batch, not just the first unit that got hand-tuned.

5) Direct-to-chip liquid cooling and leak-aware design

Liquid cooling is moving from “special project” to “normal option,” especially direct-to-chip. And no, you don’t have to liquid-cool everything. But you should design like you might.

Chassis features that suddenly matter:

- Quick-disconnect (QD) port placement that doesn’t smash knuckles

- Space for manifolds and drip-less routing

- Sensor mounting points (leak + temp + flow)

If you’re building a mixed fleet (some air, some liquid), pick a chassis family where both versions share as many parts as possible. That keeps spares simple, which your ops team will love.

6) Modular reference architectures (NVIDIA MGX-style thinking)

Vendors push modular “mix-and-match” platforms so they can ship faster and validate more configs. That doesn’t kill customization. It shifts customization into mechanical fit, thermals, and serviceability.

So instead of reinventing the whole enclosure, you tune:

- GPU spacing

- Fan + duct geometry

- Front I/O and drive layouts

- Rail alignment and pull-out behavior

OEM/ODM shops that already mass-produce GPU chassis tend to handle this smoother, because they’ve seen the weird edge cases (like sag under load, or a latch that fails after 2,000 cycles).

7) 2U GPU servers, compact deployments, and tight thermals

Not every buyer runs a mega training cluster. Plenty of teams run enterprise inference, private AI labs, or “GPU-as-a-service” racks where 2U density matters.

Here’s the catch: compact boxes punish sloppy design. One bad airflow turn and temps spike.

If you’re speccing 2U/4U:

- Demand clean front-to-back airflow

- Keep cable bundles out of the intake zone

- Make sure rails don’t flex when a tech yanks the chassis (it happens)

IStoneCase has a wide catalog of rackmount enclosures you can standardize on, then tweak for your board/GPU combo.

Links: Rackmount Case • example product format: 2U Rackmount Case

8) PCIe 7.0 era interconnect and signal integrity in the chassis

As PCIe speeds climb, “just route it” stops working. You start thinking about trace length, retimers, and how close noisy parts sit to sensitive paths.

That pushes chassis design toward:

- Shorter internal paths

- Cleaner segregation of power vs signal zones

- Better grounding and EMI control

It’s the kind of stuff you don’t see in a product photo, but you feel it when stability tests stop failing randomly. Also, your debugging time goes down, which is kinda priceless.

9) EDSFF storage (E1.S / E3.*) and front service

AI isn’t only GPUs. It’s also datasets, checkpoints, logs, and “oops we need more fast scratch.” Storage form factors keep shifting toward EDSFF because it plays nicer with airflow and service.

Chassis design follows:

- Better front hot-swap ergonomics

- Cleaner airflow through drive zones

- More consistent backplane layouts

If you need storage-heavy builds, it’s worth looking at purpose-built NAS and storage chassis instead of hacking it into a GPU frame.

Links: NAS Case • NAS bulk purchase article

10) Integrated management, telemetry, and control silicon

More “brains” land inside the system: controllers, sensors, security modules, fan logic. That increases wiring, raises thermal hotspots, and steals space you used to ignore.

So the chassis needs:

- Sensor-friendly mounting points

- Smarter cable routing channels

- Cooling attention for small-but-hot components

This is also where a good OEM/ODM partner helps you avoid re-spins. You don’t wanna cut metal twice because a sensor harness doesn’t fit.

A quick buyer’s table: pain points → chassis choices

| Your pain point | The chassis feature that fixes it | Where IStoneCase fits |

|---|---|---|

| “Swaps take forever” | Tool-less rails, smooth slide, consistent mounting | Chassis Guide Rail (their rails cover 1U–4U and support heavier loads, which helps with GPU rigs) |

| “Thermals are unstable” | Clean front-to-back airflow, fan wall options, ducting | GPU Server Case and OEM/ODM airflow tuning |

| “We need bulk, consistent builds” | Repeatable BOM, standardized chassis families | IStoneCase OEM/ODM + bulk wholesale workflow |

| “Storage keeps bottlenecking” | Hot-swap bays, storage-focused chassis | NAS Case |

| “We mix boards and GPUs” | Flexible internal brackets, configurable PCIe slots | OEM/ODM mechanical tailoring for your spec |

Where IStoneCase shows up in a real deployment

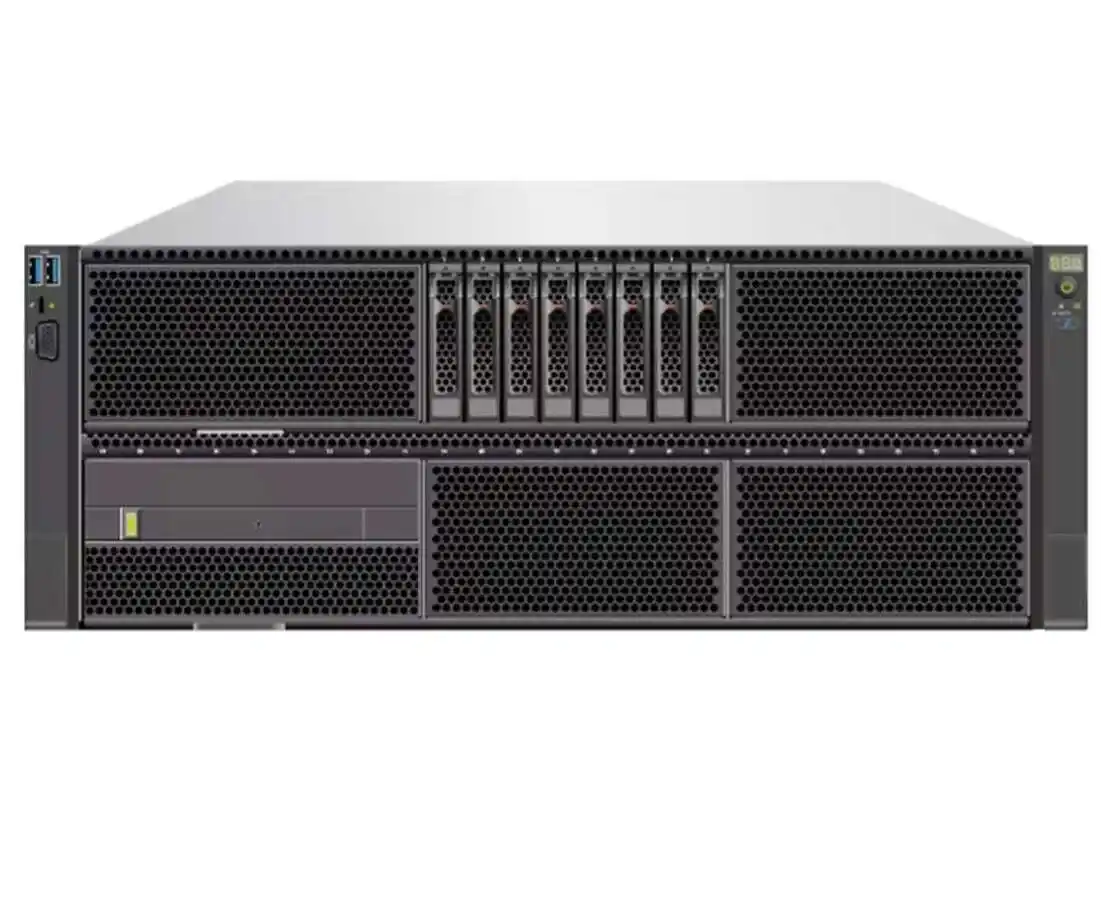

Say you’re rolling out a new AI pod for a mid-size enterprise. You might run:

- A few 4U/6U GPU nodes for training bursts

- Several compact inference boxes

- A storage chassis for fast data staging

That’s where IStoneCase – The World’s Leading GPU/Server Case and Storage Chassis OEM/ODM Solution Manufacturer fits naturally. You can keep one supplier for GPU server cases, rackmount and wallmount options, NAS devices, ITX builds, plus chassis guide rails, then adapt details for your rack depth, motherboard, and GPU layout.

Links: 6U GPU Server Case • Chassis Guide Rail • Rackmount Case

And yeah, sometimes you still need an atx server case for a specific legacy card mix or a lab build. That’s fine. The point is: pick a chassis roadmap that matches where power, cooling, and service are going—so your “computer case server” choice doesn’t trap you next year.