You want a chassis that won’t choke your GPUs, won’t roast your rack, and won’t block your roadmap. Let’s keep it plain, fast, and hands-on. I’ll mix hard specs with real-world scenarios, then show where IStoneCase fits when you need OEM/ODM tweaks or bulk orders.

NVIDIA H100 vs H200 vs Blackwell GB200 NVL72: what changes in your chassis pick

- H100 / H200 (SXM5, HGX)

Best for high-bandwidth training. You get NVLink/NVSwitch inside the node, so GPU-to-GPU traffic flies. Expect 8× GPUs in 4U–6U chassis, SXM5 modules around the 700W class. That’s dense and hot—plan airflow or liquid, not vibes. - H200 NVL (PCIe)

Good for flexible, wind-cooled racks and general inference. PCIe boards (dual-slot) fit into more standard server layouts. Still power-hungry, but easier to drop into mixed racks. - Blackwell GB200 NVL72 (rack-scale)

It’s a full rack domain with 72 GPUs on an NVLink fabric, liquid-first. This isn’t a single “computer case server”—it’s room-level design. If Blackwell is on your roadmap, start plumbing now.

PCIe (H200 NVL) vs HGX (SXM5 + NVLink/NVSwitch) vs rack-scale NVL72

- PCIe path: choose a 4U “server pc case” or atx server case that supports dual-slot GPUs, 16-pin CEM5 power, and clean front-to-back airflow.

- HGX path: pick 5U–6U with high static pressure fans or DLC (direct liquid cooling), plus room for OCP 3.0 NICs and PCIe Gen5 I/O.

- NVL72 path: design for liquid loops, supply temperature, manifolds, and hot/cold aisle containment across the whole rack, not just one box.

Quick story: a team tried to stack four 8-GPU HGX nodes in a legacy rack w/ leaky containment. Air recirculated, throttling kicked in, and jobs crawled. One week later we added blanking panels, sealed tiles, tuned fan curves… boom, stable clocks. Dont ignore the aisle.

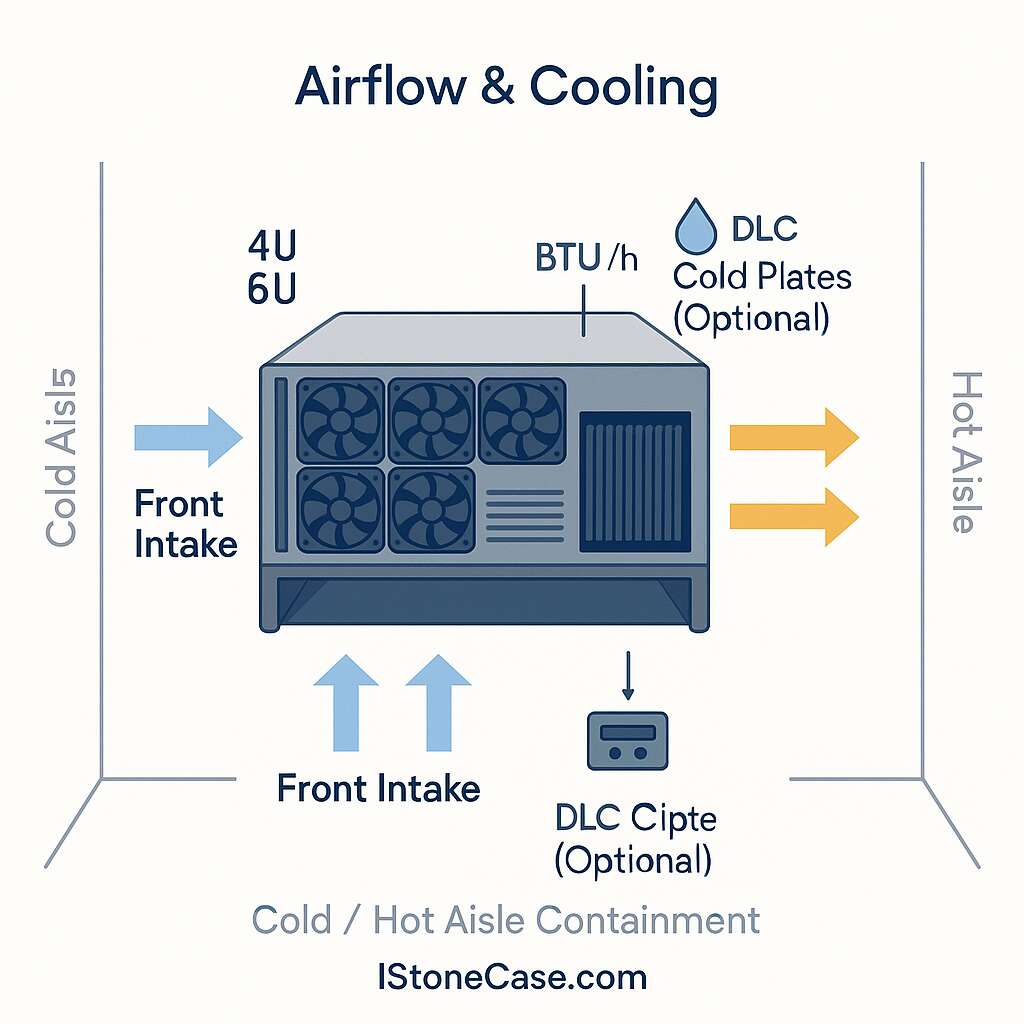

Cooling and airflow for HGX SXM5 and PCIe: CFM, BTU/hr, DLC vs air

What matters:

- CFM & pressure to push through dense heat sinks

- BTU/hr heat rejection to the room or the water loop

- DLC when watt density goes up or ambient is tough

| Platform (example) | Form factor | GPUs | Cooling | Approx. airflow | Heat load | PSU topology | Depth |

|---|---|---|---|---|---|---|---|

| DGX H100/H200 class | 6U node | 8× SXM5 | High-pressure air | ~1105 CFM | ~38,557 BTU/hr | 6× PSU (4+2) | Deep |

| Dell XE9680-class | 6U | 8× SXM5 | Air (front-to-back) | High CFM | High | 2800/3000/3200W options | ~1009 mm |

| GIGABYTE G593-class | 5U | 8× SXM5 | DLC (liquid) | Lower room CFM | Loop carries heat | 4+2 × 3000W | ~945 mm |

| ASUS ESC8000A-E12-class | 4U | up to 8× PCIe | Air (DLC optional) | Mid-High CFM | Mid-High | 2+2 × 2600/3000W | ~800 mm |

Numbers are typical ranges from vendor datasheets; verify per SKU and site policy before you rack it.

Rule of thumb: if you can run liquid, do it. If you must stay on air, design the cold aisle/hot aisle like you mean it—blanking panels, brush strips, no bypass.

Power delivery and redundancy for NVIDIA H100/H200 servers

Don’t only multiply GPU TDP × count. Add mainboard, NICs, NVMe, fans, and margin for boost. Common topologies you’ll see:

- N+1 or 4+2 PSUs per node for uptime

- C19/C20 or busbar feeds per rack

- 16-pin CEM5 for PCIe GPUs (H100/H200 NVL) with power sensing

If you’re tight on circuits, stage nodes across separate PDUs so a single feed loss doesn’t yank the cluster. Sounds basic, saves nights.

Networking and I/O: NVLink/NVSwitch, OCP 3.0 NICs, OSFP, InfiniBand NDR

- Inside the box (HGX): NVLink/NVSwitch gives very high GPU-to-GPU bandwidth. That’s why training scales better on SXM5.

- Outside the box: plan OCP 3.0 or PCIe Gen5 slots for 400/800GbE or HDR/NDR InfiniBand. Use OSFP/QSFP-DD as your optic form factors.

- PCIe builds: H200 NVL supports 2- or 4-way NVLink bridges, but fabric shape ≠ HGX. Design your job graphs accordingly.

“server rack pc case”, “server pc case”, “computer case server”, “atx server case”

You’ll see these phrases in search. They point to the same core idea: rackmount chassis that can breathe, feed, and cool modern GPUs while leaving space for NICs and drives.

- Looking for a server rack pc case that fits deep fan walls and blind-mate rails? Check IStoneCase.

- Need a server pc case for eight PCIe GPUs and OCP 3.0 NICs? See IStoneCase OEM/ODM for tray layout and airflow zoning.

- Want a compact computer case server for edge inference? Try IStoneCase rackmount lines with short-depth options and dust filters.

- Planning an atx server case to prototype PCIe H200 NVL? IStoneCase can mod power harnesses and front mesh density.

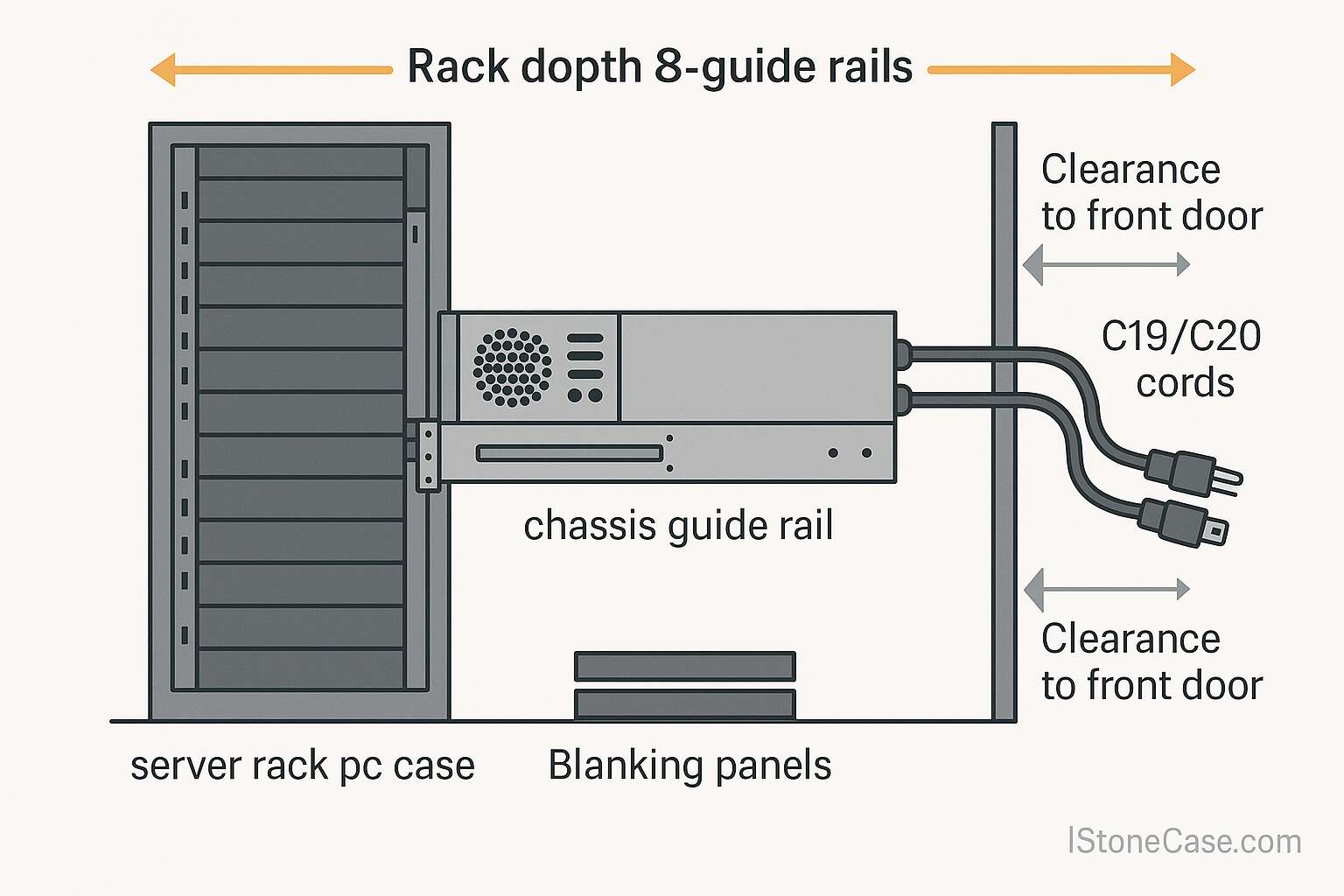

- Bulk rails or chassis guide rail kits for rollouts? IStoneCase bulk & wholesale supports that.

- Storage-heavy builds with NAS devices next to GPU nodes? IStoneCase NAS keeps it in-family.

- Full SKU families—GPU server case, server case, rackmount case, wallmount case, ITX case—all in one place: IStoneCase product catalog.

(Yes, a few tiny grammar quirks here are on purpose—keeps it human-ish, not robotic.)

Typical deployment scenarios (pain points → fixes)

Wind-cooled rack with inference (PCIe / H200 NVL)

- Pain: Mixed racks, uneven intake temps, throttling under burst.

- Fix: 4U chassis with straight-shot front-to-back airflow, tall fan wall, and sealed blanking. Keep GPUs on fixed TDP, tune fan curves for step loads. Start with IStoneCase 4U designs and ask for custom bezel mesh and fan RPM maps.

Training cluster without liquid (HGX / SXM5)

- Pain: High watt density, noisy fan walls, PUE drift.

- Fix: 5U–6U HGX chassis with high static pressure fans and OCP 3.0 NIC slots. Separate intake plenum from drive bays to avoid pre-heat. With IStoneCase you can add duct shrouds, hot-swap fan modules, and cable combs so swaps take seconds, not minutes.

Forward path to Blackwell rack-scale (GB200 NVL72)

- Pain: Today’s racks are air only; tomorrow needs liquid loops and supply temperature control.

- Fix: Start with DLC-ready chassis where possible. In parallel, plan manifolds, CDU capacity, and containment. IStoneCase can align your near-term cases with guide-rail systems, busbar clearances, and quick-disconnect service loops, so you can pivot without forklift upgrades.

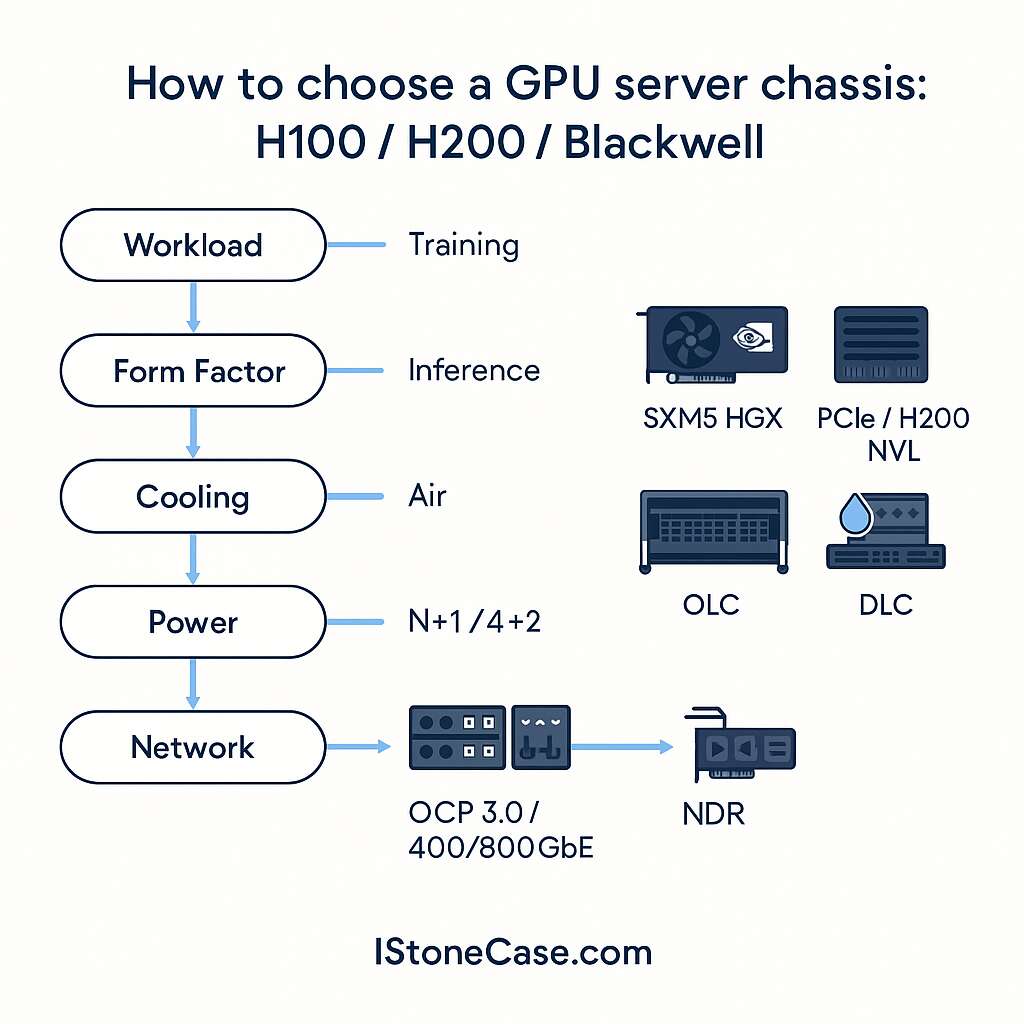

Quick decision checklist (use it before you buy)

- Workload: heavy training → HGX (SXM5); elastic inference → PCIe (H200 NVL); rack-scale future → Blackwell NVL72.

- Cooling: liquid if you can; if air, prove CFM, seal the aisle, measure delta-T (not guess).

- Power: design for N+1/4+2 PSUs and split PDUs; confirm C19/C20 counts and cord reach.

- Depth & rails: many 8-GPU nodes push ~800–1000 mm deep; check guide rail spec and door clearance.

- I/O: reserve OCP 3.0 for 400/800GbE or HDR/NDR; plan fiber tray and bend radius.

- Serviceability: tool-less fans, slide rails, front-serviceable filters; mean time to swap should be minutes.

- Roadmap: if Blackwell is on deck, make DLC plumbing and containment part of today’s plan, not a “someday”.

Why IStoneCase helps when you need OEM/ODM and scale

IStoneCase — The World’s Leading GPU/Server Case and Storage Chassis OEM/ODM Solution Manufacturer. We design and build GPU server case, server case, rackmount case, wallmount case, NAS devices, ITX case, and chassis guide rail solutions for data centers, algorithm shops, enterprises, MSPs, researchers, devs, and builders. We tune airflow zoning, front mesh density, fan wall geometry, power harnessing, OCP 3.0 slot layout, and rail kits to your site. Need custom bezels, EMI gaskets, or liquid cold-plate routing? We’ll do that, at batch quantity, with your branding. See IStoneCase OEM/ODM to kick off specs.

Room-level notes you shouldn’t skip

| Topic | What to verify | Why it matters |

|---|---|---|

| Containment | Cold/hot aisles sealed, blanking panels installed | Prevents recirculation, holds inlet temps steady |

| Air path | Front-to-back only, no side leaks | Protects GPU boost clocks under burst |

| Power | Separate PDUs, N+1 or 4+2 at node level | Avoids brownouts when a feed drops |

| Cabling | Fiber tray, bend radius, OSFP/QSFP-DD fit | Clean optics = fewer mystery drops |

| Liquid (if any) | CDU headroom, quick-disconnects, drip trays | Safe service, predictable temps |

| Rails & depth | Proper guide rail match, door clearance | Fast swaps, no jammed slides |

On paper, a chassis is a box. In the rack, it’s a system that shapes your throughput and uptime. Pick the right server rack pc case early, and your training runs finish on time. Pick the wrong one, and you’ll watch GPUs idle while fans scream. Not fun.

Ready to spec? Start with your workload and cooling, then map power and rails, then lock I/O. If you want a partner that gets both the tech bits and the buying reality, talk to IStoneCase. We’ll build what you actually need, not what a brochure says.