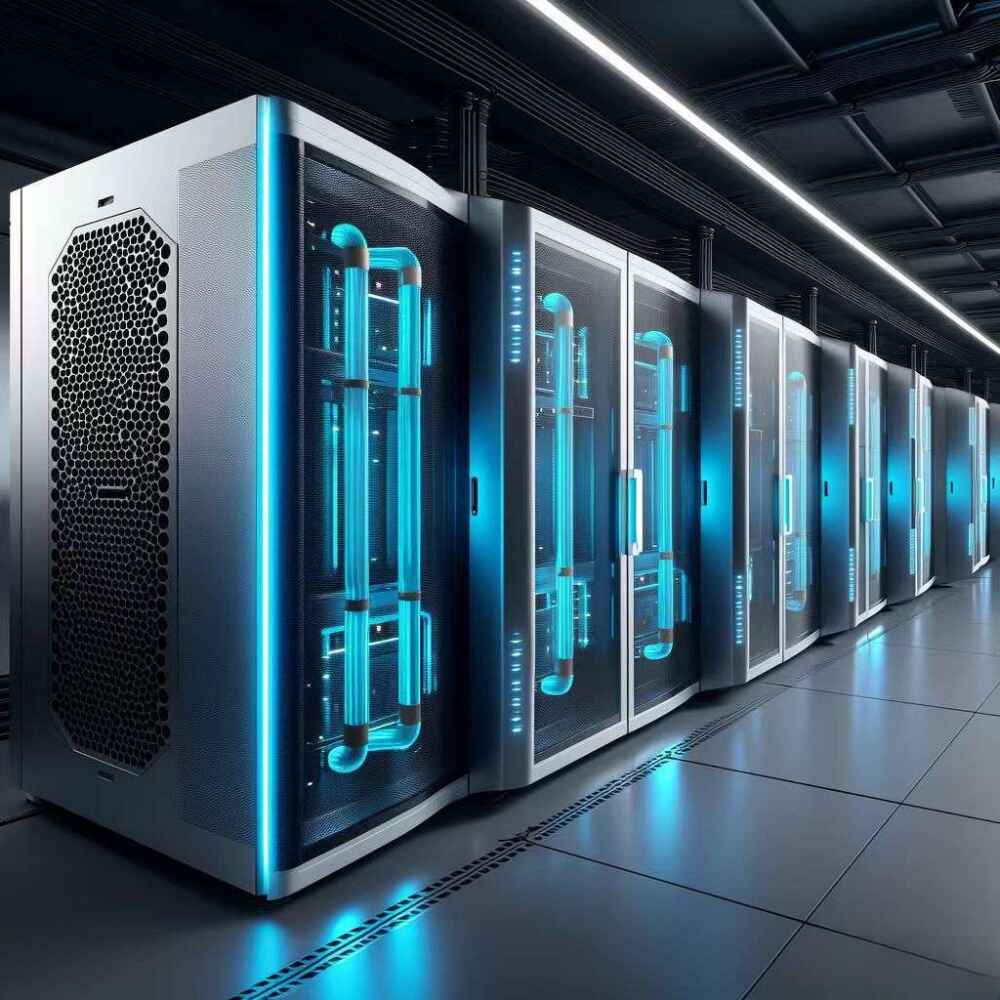

You’re planning dense AI racks. Fans scream, heat soars, PUE creeps up. Let’s talk liquid-cooled server enclosure for H100/GB200—what it is, why it matters, and how to land it cleanly with real-world scenarios and hardware you can actually buy.

NVIDIA GB200 NVL72 cabinet-level liquid cooling

Dense training stacks like GB200 don’t behave like a normal computer case server. They’re a cabinet-scale system with direct-to-chip cooling, manifold distribution, and leak-safe quick disconnects. In plain words: coolant touches the hot spots (GPU, CPU, VRM, DIMM, PSU) and carries heat out—fast and predictably. This isn’t “nice to have.” It’s the difference between stable full-load clocks and thermal throttling during long epochs.

Where your enclosure matters: the chassis needs cold plate clearance, rigid rail support, hose routing paths, and service-friendly front access. If you can’t pull a node, bleed a line, or swap a pump in minutes, your ops team will hate the design (and you).

Direct-to-chip liquid cooling for H100

For H100 clusters, liquid plates reduce delta-T and allow higher density per rack. That’s your ticket to more compute per square meter without over-pressuring CRAC units. The enclosure must integrate:

- CPU/GPU/DIMM/VRM cold-plate mounting stiffness (no plate bowing).

- Manifold and dripless QD layout to avoid knuckle-scrapes and weird bend-radius issues.

- Cable troughs separate from wet lines (safety + serviceability).

- Rail stiffness for heavy trays (no sag, no tilt).

Short take: If the enclosure isn’t built for DLC, you’ll fight it every maintenance window.

GB200 is cabinet-scale; your chassis still decides uptime

“Liquid-cooled” is not just fluid. It’s also service loops, rails, and door swing. Here’s how enclosure choices influence availability:

- Swappable coils/pumps/CDU access: Mean time to service (MTTS) drops, failover improves.

- Front-I/O preference: Less aisle collision, safer hose routing.

- Rails with anti-racking features: No micro-misalignments that stress QDs (you really don’t want that drip).

For buyers who ask: “Do we need water to the building?”

Some sites won’t bring facility water to the white space on day one. You can still deploy, using liquid-to-air rear-door or in-row heat exchangers. It’s not a one-size-fits-all, but it lets you ship GPUs now, plan facility water later. The enclosure should reserve space and mounting points for either direction: facility loop later, rear-door coil today.

Server rack pc case & chassis choices (H100/GB200-ready)

IStoneCase ships rackmount lines that map to real deployment patterns—not lab fantasies:

- 1U–6U families for control, storage, and mixed GPU nodes

See: Server Case · 1U Server Case · 2U Server Case · 4U Server Case · 6U Server Case - Rails that don’t flex under wet weight

See: Chassis Guide Rail · 2U Chassis Guide Rail · 4U Chassis Guide Rail - OEM/ODM paths for custom manifolds, cold-plate clearances, and hose strain relief

See: Customization Server Chassis Service

You’ll see the keywords you care about—server rack pc case, server pc case, computer case server, atx server case—not as fluff, but as the actual building blocks you’ll deploy in a live rack.

Practical scenarios you’ll meet (and how the enclosure solves them)

(1) Mixed H100 training + storage in the same row

You run 8–16 H100 per node for training. Next rack hosts NVMe-heavy storage. Liquid cooling keeps GPU nodes dense; storage stays air-cooled. The enclosure must isolate liquid lines from storage airflow, and rails must carry higher mass for GPU trays. If rails chatter or twist, QDs loosen over time—bad news.

(2) GB200 NVL72 cabinet drops into brownfield DC

Facility water isn’t ready. You drop a rear-door liquid-to-air heat exchanger first, target stable inlet temps, and plan a facility loop retrofit. The enclosure’s rear clearance and hinge geometry decide whether the door coil even fits. Seen too many installs fail here because the door can’t open fully—dont do that.

(3) 24/7 inference with tight SLO

Latency variance is your KPI, not peak TFLOPs. Liquid plates shave thermal spikes that cause frequency wobble. A properly braced server pc case controls vibration, keeps hoses off fans, and speeds hot-swap. That’s how you keep p95 steady thru traffic bursts.

(4) Lab→Prod scale-up

Your lab starts on atx server case prototypes. Production needs the same board keep-outs, tubing angles, and port locations. ODM continuity avoids re-qualification hell and oddball leak audits.

Specification table (what to check before you buy)

| Requirement (keyword-accurate) | Why it matters in H100/GB200 liquid cooling | What to look for in the server pc case |

|---|---|---|

| Direct-to-chip cold plate clearance | Prevents plate tilt → uneven TIM spread | Machined standoffs, backplates, torque spec labels |

| Manifold routing & QD accessibility | Faster service, lower leak risk | Front-service manifolds, labeled wet/dry zones |

| Chassis Guide Rail stiffness | Heavy wet nodes won’t rack or sag | Certified load rating, anti-racking latch |

| Front-I/O layout | Safer hose paths, fewer aisle collisions | Cable gutters separate from coolant lines |

| Drain/fill ports & bleed points | Shorter maintenance windows | Tool-less caps, drip trays, clear marking |

| Sensor & leak-detect integration | Early warning beats outage | Probe mounts, cable passthroughs with grommets |

| Rear-door HX compatibility | Brownfield drop-in without facility water | Door swing radius spec, hinge strength, depth headroom |

| ATX/server board keep-outs | Lab→Prod without redesign | ATX/E-ATX support, GPU bracket grid, PSU bays |

This table isn’t theory; it’s the checklist teams use before a PO. Miss any one line and your TTR balloons during peak loads.

Why IStoneCase (business value, said plainly)

- Predictable lead & repeatability. ODM means the second hundred units match the first hundred—same hole patterns, same rail response, same cable clears. Less “surprise during install.”

- Thermal-first mechanicals. Braced frames keep plates flat, rails don’t yaw, and doors don’t collide with rear HX. Small stuff, giant impact.

- Lifecycle service. Bleed points, drain pans, labeling—tiny additions that cut minutes every time you crack a loop. Over a year, that’s real uptime.

- Portfolio that maps to your row. Control plane in 1U, storage in 4U/6U, GPU trays where you need them. You can mix server rack pc case with computer case server builds without changing your rail language.

- No drama ODM. Bring your board files, plate footprint, hose spec. We’ll tune bracketry, standoffs, and grommets to your BOM and get you thru validation.

Buyer FAQ (jargon on purpose)

Q: Will this fit my “atx server case” pilots?

A: If your lab boards are ATX/E-ATX, we keep keep-outs identical in production enclosures. No mystery shims, no plate-bow.

Q: Our ops team fears leaks.

A: Good. So do we. We spec dripless QDs, hard stops on bend radius, and leak-detect probes near low points. Also: we route wet lines away from fan inlets, so a single drop won’t atomize. It sounds picky, but it’s why we sleep at night.

Q: Brownfield site, no building water yet.

A: Start with rear-door coil or in-row HX, then plan facility loop. Our doors and rails are rated for the heavier assembly and swing radius. No re-buy later.

Q: Can we hit shipping deadlines?

A: Yep, with locked rail kits and repeatable panel sets. ODM’s whole point is fewer unknowns. Minor typos in SOW happen—enviroment names and all—but hardware should not surprise you.

Quick links (choose your path)

- Server Case — overview & specs

- 1U Server Case — control plane / front-I/O builds

- 2U Server Case — mixed GPU / storage nodes

- 4U Server Case — high-mass trays, better airflow corridors

- 6U Server Case — deep GPU trays, generous hose radius

- Chassis Guide Rail — load-rated rails for wet weight

- Customization Server Chassis Service — ODM for manifolds, keep-outs, leak-detect

Bottom line

If you’re serious about H100/GB200, the enclosure is not a metal box—it’s your cooling strategy, your service plan, and your uptime promise. Choose a server rack pc case that respects DLC realities, builds in rail stiffness, and leaves space for the path you’re on (rear-door today, facility loop tomorrow). With IStoneCase, you get a server pc case platform and atx server case options that scale from lab pilots to cabinet-level rollouts—without rewiring your row or your weekend.