You’ve got hungry GPUs, finite lanes, and a chassis that has to move crazy heat. Let’s make the lanes work for you—without turning the backplane into a science project. I’ll keep it plain-spoken, tactical, and tied to real gear.

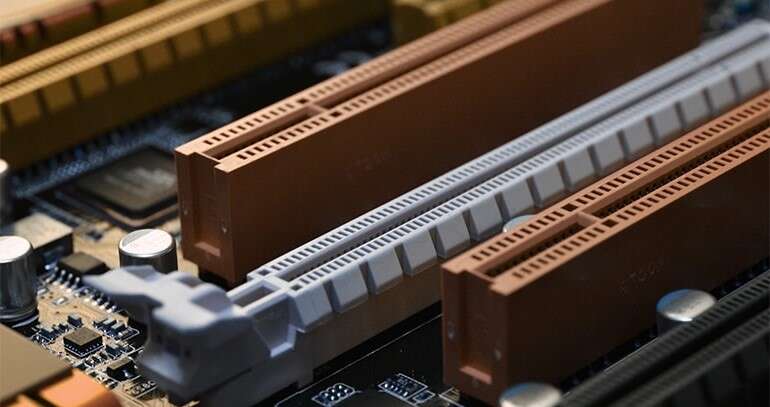

PCIe Gen4/Gen5 lane planning in GPU chassis

Start with the lane ceiling, not the wish list. Count what the CPU/board can natively expose, then decide what must be switched or retimed. In Gen4/Gen5 speeds, long traces and many connectors eat margin fast; a retimer-first mindset keeps 32 GT/s links honest. For multi-GPU rigs, plan the x16 priorities (GPUs first, then NIC/DPU, then NVMe) and leave room for the network uplink you’ll inevitably add later. Yeah, future-you will thank you.

Backplanes & slots: retimer-first, switch when lanes run out

When slots multiply, your backplane becomes a traffic cop. Retimers clean up jitter and clocking so each hop behaves; switches (PCIe fan-out) turn a few x16 ups into many x16 downs. Add back-drill, short stubs, low-loss materials, and MCIO / SlimSAS (SFF-TA-1016) cable options to protect your SI budget. Keep retimers near connectors and long cable runs; don’t be shy—Gen5 isn’t forgiving.

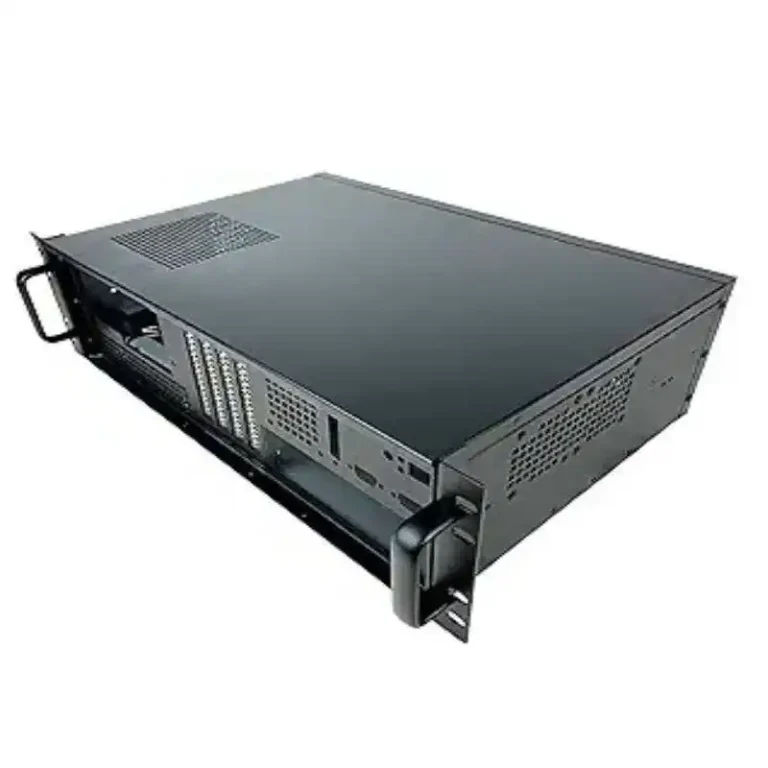

server rack pc case: airflow, cabling, and service loops

If your deployment lives in racks (most do), cooling and service matter as much as lanes. Choose a server rack pc case with straight-through airflow, front-to-back cable discipline, and room for MCIO harnesses without hard 90° bends. Side benefit: clean cable dressing lowers risk of micro-movements that nudge marginal links into flake-town over time. Not cute, just true.

server pc case vs computer case server: the slot map actually changes

People say “server pc case” and “computer case server” like they’re the same. They’re not when you inspect slot topologies. Enterprise chassis route x16 lanes with retimer pads, bifurcation options (x16 → x8/x8 or x16 → x4/x4/x4/x4), and proper connector families. Consumer-ish enclosures often lack those PCB provisions, so you end up fighting both thermals and signal integrity. Save your weekend—pick a real server chassis.

atx server case: motherboard real estate and slot spacing

An atx server case gives you compatibility, but watch slot pitch, air shrouds, and PSU partitioning. Dual-slot or even triple-slot accelerators need breathing space; so does a 400G NIC. Tight spacing turns into hot recirculation, which turns into down-clocked GPUs, which… you get it. Better to lock a 4U/5U/6U layout from day one and route lanes to match the physical thermals.

Quick lane budget (illustrative, not gospel)

This is a first-pass sizing tool. Your actual board, NUMA layout, and backplane topology will tweak it. Use it to avoid magical thinking.

| Platform (typical) | Devices (example) | Lane usage (rough) | Notes |

|---|---|---|---|

| 1× high-lane CPU | 4× GPUs (x16) + 1× 400G NIC (x16) + 4× NVMe (x4) | ≈ 96 lanes | GPU gets priority; you still have headroom for another x16 or two x8 if SI allows. |

| 1× high-lane CPU | 8× GPUs (x16) | ≈ 128 lanes | Usually maxes native lanes; add NIC via switch or drop some links to x8. |

| 2× CPU platform | 8× GPUs (x16) + 2× 400G NIC (x16) + 4× NVMe (x4) | ≈ 176 lanes | Over budget natively. Use a Gen5 switch backplane for fan-out or rebalance widths. |

If you’re thinking “we’ll squeeze it somehow”—sure, but Gen5 isn’t a playground. Plan the fan-out.

Backplane & cabling checklist (Gen4/Gen5 sanity)

| Item | What to do | Why it matters |

|---|---|---|

| Retimers on long hops | Put them near MCIO/slot connectors | Recover eye, tame jitter; Gen5 hates loss. |

| Back-drill & short stubs | Remove unused via barrels | Stubs reflect; reflections kill margin at 32 GT/s. |

| Connector choice | Use MCIO / SlimSAS for internal runs | Known insertion-loss behavior; easier cable management. |

| Bifurcation options | Keep x16→x8/x8 or x4/x4/x4/x4 in BIOS & board | Lets you right-size lanes per device mix. |

| Thermals first | Duct GPUs, isolate PSU heat, add pressure ceiling | Stable temps stabilize links—yes, really. |

| Service loops | Leave slack for swaps | Reduce micro-strain that degrades marginal links. |

Real-world scenarios (you’ll likely hit one)

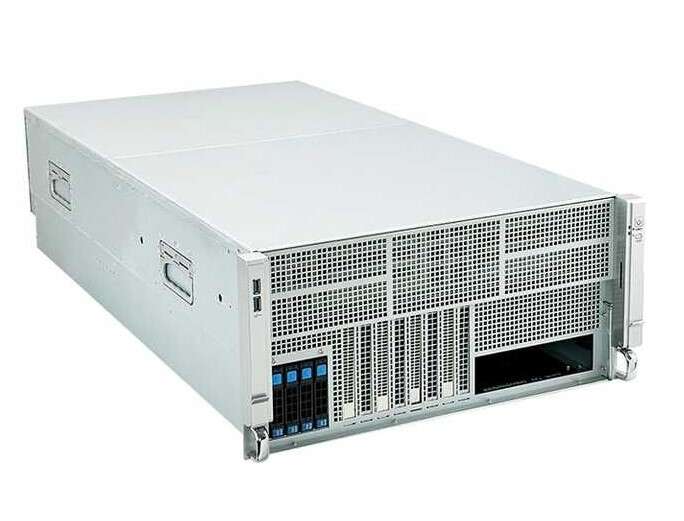

Multi-tenant training pod in a GPU server case

You’re carving a single chassis into two sandboxes. Route the primary four GPUs as x16 direct to CPU-A. The second pair goes through a Gen5 switch hanging from another x16. NIC gets the next x16; NVMe rides bifurcated x4s. On the backplane, drop retimers at the long cable legs. Use a proper GPU server case with straight-shot airflow and shrouds so fans don’t compete.

Edge inference in wallmount + short cable runs

Edge boxes can’t spare a full rack. A Wallmount Case with 2–4 slots supports one accelerator at x16 and a NIC at x8/x16. Keep PCB traces short; add a single retimer near the NIC slot if you must route through a riser. Ensure front-to-rear ducting; small boxes overheat faster than you think, even at modest TDP.

Storage-heavy data node with a sprinkling of compute

When I/O rules the day, pick a Rackmount Case with lots of bays and a mid-plane that won’t fight your NVMe cabling. Give your GPU a clean x16, NIC a clean x16, and fan out SSDs via bifurcation plus short MCIO cables. If bays get extreme, plan Chassis Guide Rail for serviceability—removing a heavy case from the rack shouldn’t break cables and links. It happens, dont ask.

How IStoneCase fits the puzzle (OEM/ODM that speaks “lane”)

You don’t buy a box; you buy a topology that keeps clocks clean and fans sane. IStoneCase builds GPU server cases, classic Server Case, Rackmount Case, NAS Case, ITX Case, and rails—then tunes layouts, slot spacing, and cable routes to your lane map. If you need brackets for retimers, custom shrouds, or a switch-ready backplane footprint, that’s normal Tuesday. For true one-off needs and bulk rollouts, tap Server Case OEM/ODM. We’ll co-design the fan-out, SI features, and the little things—fasteners, strain reliefs, door clearances—that keep fleets easy to live with.

SEO quick note

IStoneCase – The World’s Leading GPU/Server Case and Storage Chassis OEM/ODM Solution Manufacturer. We ship GPU server cases, Rackmount, Wallmount, and ITX chassis for data centers, algorithm centers, enterprises, SMBs, IT providers, devs, and research labs. Tailored, durable, performance-first.

Put it all together (simple playbook)

- Draw the lane map first (x16 priorities, then NIC/DPU, then NVMe).

- Pick the enclosure to match thermals and cable paths (don’t retrofit pain).

- Commit to retimers where runs/links get long; place them by connectors.

- Use a switch backplane when native lanes won’t cut it; don’t hope, plan.

- Validate SI with back-drill, low-loss materials, and clean routing.

- Leave a slot for the next-gen NIC; growth always arrives early.

Need a hand choosing the right GPU server case or a rack-friendly server rack pc case for a new lane map? Ping IStoneCase—OEM/ODM is kinda our daily bread, and we’ll get your fans, slots, and links aligned before you even power on.

Bonus: quick glossary (no fluff)

- Retimer: Re-clocks and re-times a PCIe link; the Gen5 sanity tool.

- Redriver: Boosts amplitude/equalization only; limited at Gen5.

- Bifurcation: Splitting an x16 into smaller widths (x8/x8 or x4×4).

- MCIO / SlimSAS: High-speed internal cable standards used for PCIe/NVMe.

- SI budget: Your loss/jitter/return-loss allowance; protects eye diagrams.

- Fan-out switch: PCIe switch that multiplies downstream x16 slots.

Read the draft out loud; if any sentence trips you up, cut it or split it. Good writing flows like a stable 32 GT/s link—quiet, clean, and fast.