You’re planning a GPU box and you’re asking the classic question: should we treat the path like Gen4 or aim straight for Gen5? Short take: design for Gen5 from day one. Gen5 doubles the speed (32 GT/s) and slashes your margin. That hits risers, backplanes, cables, connectors, airflow, even how you bolt cards into the server rack pc case. Below I’ll break it down in plain talk, show a quick table, drop real keywords (not fluffy), and tie choices back to IStoneCase gear and services so you can actually ship.

PCIe Gen4 vs Gen5 signal integrity budget

Gen5 is picky about loss and reflections. Your end-to-end insertion loss budget tightens, so every via, long PCB run, and daisy-chained connector eats headroom. With Gen4 you might get away with a long riser PCB; with Gen5 you’ll often move to low-loss cable (MCIO style) or add a retimer. Keep the topology simple: root complex → minimal board trace → connector → cable → GPU. If you stretch that chain, you’ll see eye closure, random LTSSM retrains, and silent perf drops. Not fun.

Also mind skew. Differential pairs need tight intra-pair match. Keep AC caps close. Avoid layer bounces near the receiver. Don’t over long-route traces; it looks okay in CAD, then bites in lab.

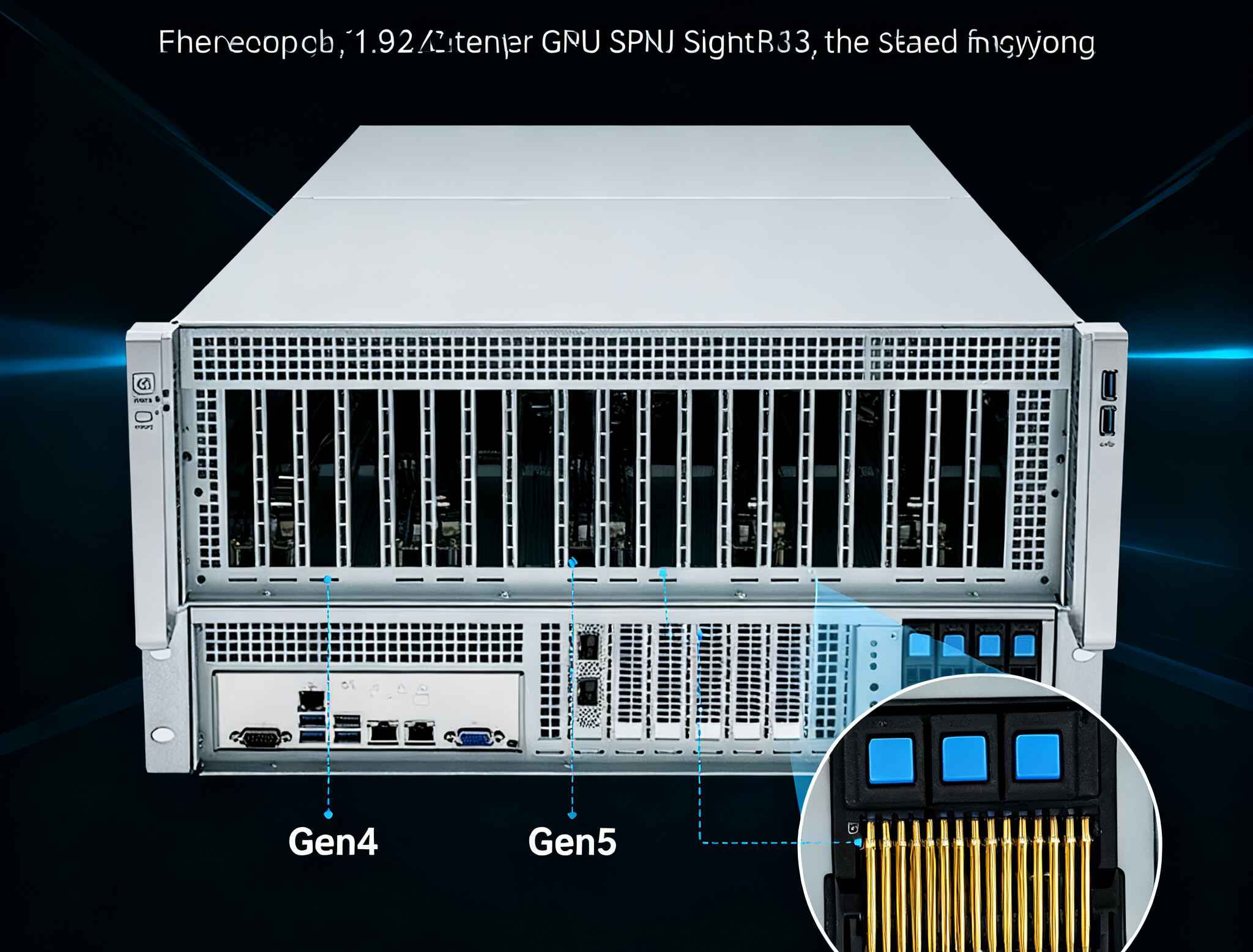

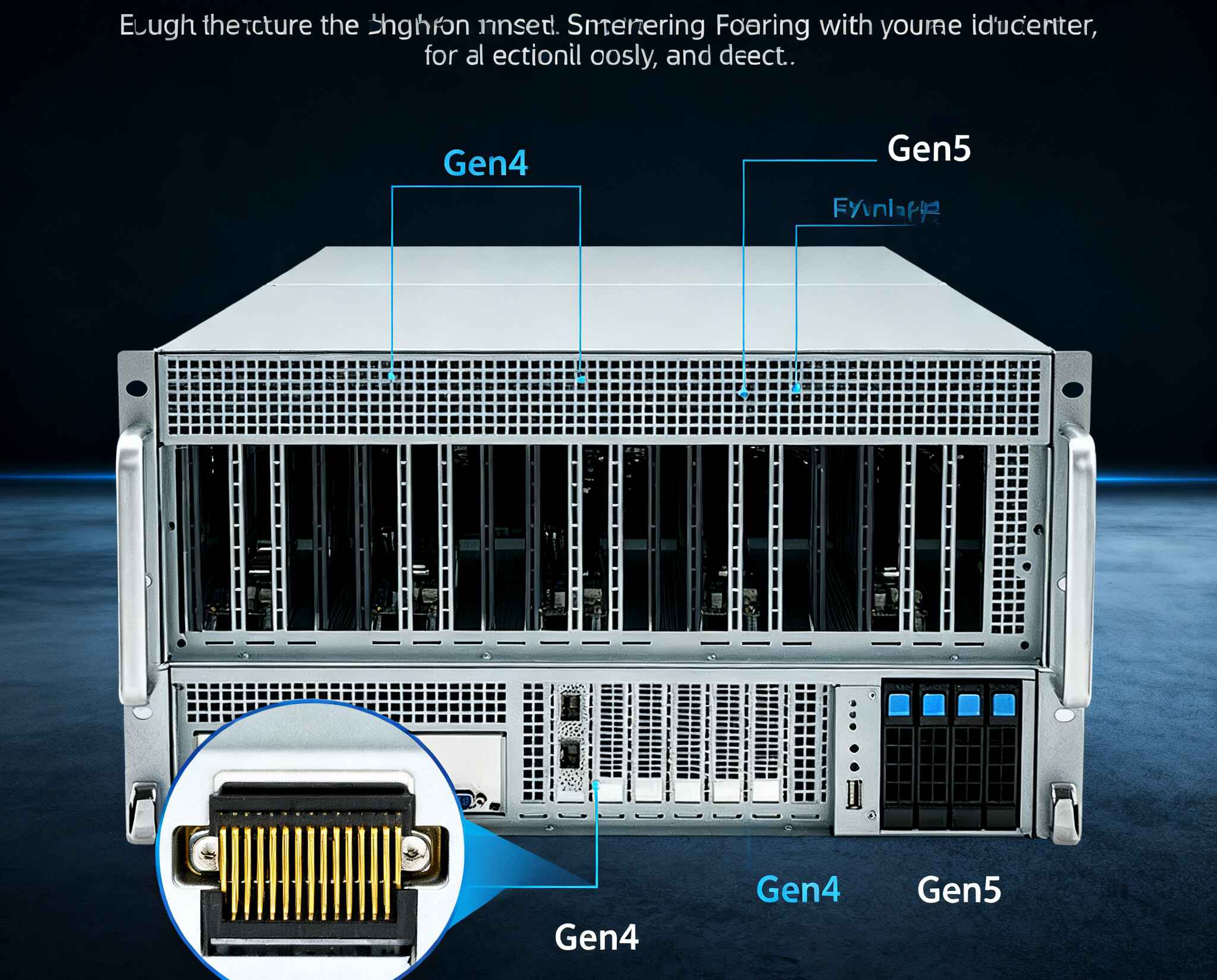

PCIe riser (Gen4 vs Gen5) selection

Riser choice flips between two families:

- PCB riser (short, few connectors): okay for Gen4 and very short Gen5 hops.

- Cable riser (MCIO/SlimSAS-like, high-performance cable): the Gen5 default for distance, especially inside a dense server pc case.

When do you add active parts?

- Redriver: light EQ, low latency. Works only when the channel is already good.

- Retimer: full re-clock/re-shape. Handles longer, messier paths typical in multi-GPU rigs. Adds a little latency, but it saves links that would otherwise downshift or flap.

Mechanically, choose risers that don’t fight airflow or block front-to-back paths. In 4U and up, a flat cable riser can route cleanly along shrouds and keep the fans happy.

PCIe backplane design for GPU chassis

A backplane can be a gift or a trap. Gen5 punishes long FR-4, via farms, and stacked connectors. Two moves help:

- Cable-ized backplane: use MCIO cables instead of long board traces. You reduce loss and crosstalk.

- Mid-span retimer: place the retimer around the electrical midpoint of the channel. That splits the loss budget and simplifies equalization.

Plan power and sideband early. If you’re doing bifurcation (x16 into 2×x8 or 4×x4), verify that the riser/backplane pinout and cable mappings keep lane order clean. If lanes cross, you’ll fight skew and training weirdness.

Retimer vs Redriver for PCIe Gen5

Let’s be straight: Gen5 leans retimer. If your path is short, simple, and you’ve got premium materials, a redriver can be enough. But in real GPU chassis—multiple connectors, long distance, tight bends—retimers pull you across the finish line. Budget the latency (small, but it’s there). Fewer retimers is better; place them where they do the most work (usually center-ish). And cool them—tiny package, non-tiny heat.

Validation: lane margining, eye diagram, compliance

Don’t stop at “it links up.” Do lane margining to see how much safety you’ve got at 32 GT/s. Run eye diagrams on representative worst-case lanes. Flip cards around to catch tolerance stacking. Include firmware that can force Gen4 fallback; it’s a useful A/B for bring-up. Also, watch error counters under load—some channels pass idle tests but puke under full TDP and heat. This matter, not optional.

Thermal, airflow, and mechanics for multi-GPU rigs

Signal integrity dies if thermals aren’t right. Hot PCBs shift characteristics and sag margins. Give Gen5 channels cool, straight airflow. For FHFL accelerators, ensure shrouds guide air through the heat sinks, not around them. Use smooth cable paths so you don’t choke fan intakes. If you’re on rails, plan service loops: you want to slide a computer case server out without yanking an MCIO harness.

Rack size matters:

- 4U: great density for 4–8 GPUs, careful cabling, tight airflow control.

- 5U / 6U: more room for cable-ized backplanes, retimer boards, and larger fan walls.

Comparison: Gen4 vs Gen5 riser/backplane (quick table)

| Topic | Gen4 (16 GT/s) | Gen5 (32 GT/s) | Practical note |

|---|---|---|---|

| Insertion-loss budget | More forgiving | Much tighter end-to-end | Count every connector/via; leave safety margin |

| Channel length | Moderate PCB ok | Long PCB risky | Prefer high-performance cable over long FR-4 |

| Equalization | Often redriver-only | Retimer recommended | Place near mid-span; limit quantity |

| Skew & matching | Tight | Tighter | Shorter pairs, fewer layer jumps |

| Topology | Flexible | Keep simple | Root→connector→cable→GPU—done |

| Validation | Link-up tests | Lane margining needed | Burn-in under full load, hot box if you can |

| Thermals | Important | Critical | Air shrouds, cable routing, fan wall pressure |

IStoneCase GPU Server Case options (with internal links)

You can pick a chassis that already leaves headroom for Gen5 routing and cooling. A few starting points:

- GPU Server Case: landing page for the full family and OEM/ODM options.

- GPU Server Case (category): explore layouts that favor cable-ized risers and straight airflow.

- 4U GPU Server Case: dense builds with careful fan walls; ideal for short cable runs.

- 5U GPU Server Case: extra vertical room for retimer boards and service loops.

- 6U GPU Server Case: best for extreme TDP and cleaner MCIO harnessing.

- ISC GPU Server Case WS04A2: 4U model suited to Gen5 cable risers and front-to-back cooling.

- ISC GPU Server CaseWS06A: larger footprint for multi-retimer layouts.

- Customization Server Chassis Service: OEM/ODM if you need custom backplane pinouts, MCIO harness kits, or special fan trays.

We support buyers across data centers, AI/algorithm hubs, enterprises big and small, MSPs, labs, and builders who just want stuff that works. If you need an atx server case variant tuned for accelerators, tell us your GPU mix and airflow targets—we’ll map the harness and make it fit.

Real-world scenarios (what to build and why)

- HPC cluster node (4–8 GPUs): Choose 4U/5U, cable risers with a single mid-span retimer, straight-through airflow, rail kit for fast swap. This fits a server rack pc case in 800 mm racks.

- Edge AI inference: Short channels, minimal connectors, maybe no retimer. Lower fan noise, but don’t starve the cards.

- Research box on a budget: Start Gen4 today but route and cool for Gen5. Use a cable riser now; add retimer only if you see margin issues later.

- OEM platform: Use the Customization Server Chassis Service for pre-built MCIO looms, labeled lanes, and compliance test docs, so your ops team doesn’t hunt for Lane 11 at 2 a.m.

Buying notes for server pc case / computer case server / atx server case

- Check GPU length (FHFL), slot pitch, and power connectors.

- Confirm riser/backplane supports x16 per card at Gen5, or your intended bifurcation.

- Demand airflow maps and fan curves; Gen5 stability loves cool boards.

- Ask for lane margining screenshots, not just “yes it links.”

- If you service often, request harness strain-relief and extra cable length for slide-out rails.

Hands-on checklist (copy this into your build doc)

- Draw the full path (CPU to GPU) including every connector.

- Budget loss with real part numbers; leave slack.

- Prefer cable-ized risers; keep PCB traces short near the root complex and GPU.

- Add retimer only where needed; place near mid-span; cool it.

- Lock differential pair rules early (skew, spacing, via counts).

- Validate with lane margining and eye checks under burn-in.

- Route cables so they don’t block fans. Zip-tie anchors help.

- Keep a Gen4 fallback switch in firmware for bring-up.

- Document lane mapping; label harness ends.

- Before ship: shake-test, heat-soak, and re-run margining. Yes, again.

Why IStoneCase fits this job

We build GPU and server pc case platforms that assume Gen5 pain from the start: cable-friendly internals, straight airflow paths, and room for retimers without hacky brackets. As an OEM/ODM, we tweak backplanes, MCIO lengths, fan trays, and rails to your rack depth and service style. You don’t need magic words—just tell us your card list and rack plan, we’ll handle the messy bits.

Want a quick spec and a bill of materials mapped to your GPUs and slots? Ping the links above, pick a 4U/5U/6U starting point, and we’ll draft the harness and placement. It’s not rocket science, but yeah, Gen5 can be tricky—so let’s make it boring-reliable.