You’ve got hungry GPUs. Let’s feed them without drama—and without guesswork.

N+1 redundancy and A/B power paths for multi-GPU servers

Redundancy starts with N+1 thinking. One extra supply—or one independent feed—takes the hit when a module fails or a breaker trips. With A/B feeds, each path must carry at least half of peak so the box keeps training when one side dies. That’s the difference between resilient and oops, cluster down. In practice, size cabling, PDUs, and breakers so one side can hold the fort alone.

ATX 3 kW power supply for workstation: pros and limits

A single ATX ~3 kW unit looks clean for a desk-side rig. Four 12V-2×6 leads at 600 W per lead can satisfy up to four boards—if the platform and OCP policy allow it. It’s simple, cheaper to integrate, and it lives inside an atx server case or a roomy computer case server.

But it’s not redundancy. If that one unit coughs, your job stops. Also, many 3 kW ATX models require 220–240 V input and a C19 cord. On 120 V circuits, you’ll hit a wall fast. The fans gets loud under load too—OK for a lab, not always for an office.

Quick fit: pair a 3 kW ATX with a deep GPU Server Case that supports long cards, straight airflow, and clean 12V-2×6 routing.

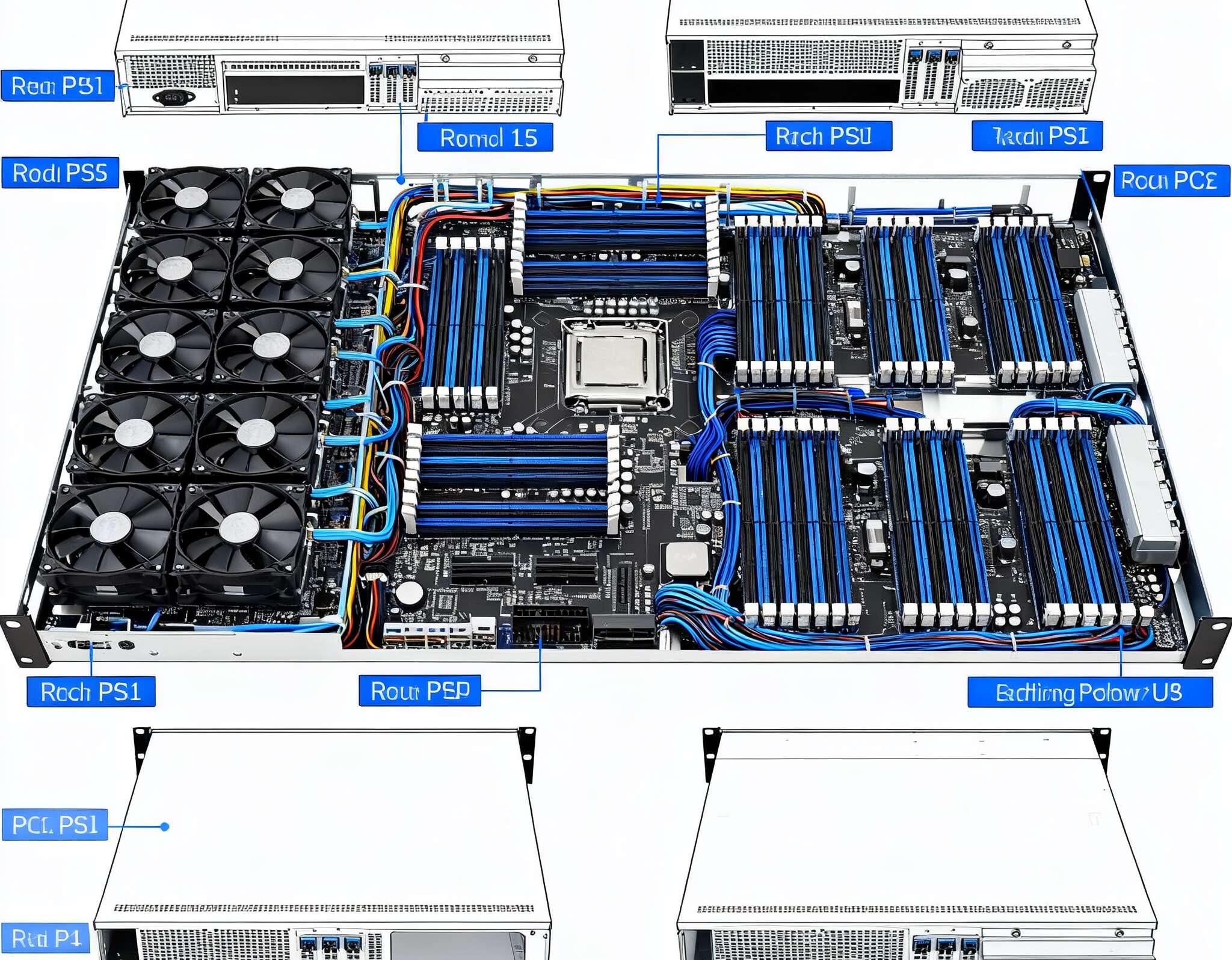

CRPS/2U redundant PSU modules for rack servers

If you want hot-swap and real failover, go CRPS/2U redundant. Two or more 2–3 kW modules slide into a backplane, share the load, and one unit can die without drama. You get PMBus telemetry, fault LEDs, and N+1 or N+N layouts. Efficiency? Often Titanium-class, which saves heat budget and fan noise upstream.

Box it right: a server rack pc case like 4U GPU Server Case or 5U GPU Server Case leaves room for CRPS canisters, mid-plane fans, and straight-shot cable trays.

OCP ORv3 48 V rack power (3 kW rectifiers, 15–18 kW shelves)

Going bigger? OCP ORv3 pushes AC to a 48 V bus at the rack. Each rectifier is around 3 kW; a shelf holds 5–6 modules with N+1. You then down-convert in the node. That’s not a desktop trick; it’s rack-level architecture for clusters. The upside is crazy uptime, fast swap, and less copper loss.

If you’re building a pod, match ORv3 shelves with 6U GPU Server Case nodes for high-density cooling.

Two-PSU split load is not redundancy

People sometimes run “two ATX supplies” with a sync board. It looks fine on paper. It’s still not redundancy. If PSU-A powers half the GPUs and fails, those boards drop. Worse, don’t cross-feed a single GPU from two PSUs; ground offsets and protection trips can brick your night. Keep one GPU = one PSU rail set. If you must split, do it per device, never per connector.

Power input reality check: 120 V vs 240 V, C19, branch circuits

A 3 kW continuous draw on 120 V / 20 A circuits just doesn’t hold for long duty. The safe continuous current derates you below that. For near-3 kW rigs, plan 220–240 V, C19 outlets, and PDUs rated for the job. In a rack, distribute across A/B PDUs so a tripped breaker on A doesn’t ruin your Tuesday. Sounds boring, saves weekends.

Connector math: 12V-2×6 and 600 W per lead

Use the new 12V-2×6 (PCIe 5.x) for high-draw boards. Budget 600 W per cable. Don’t Y-split those to the moon; keep thermal headroom at the connector and at the card. Route short, avoid tight bends, and keep exhaust paths clear so cable jackets don’t cook.

2–3 kW options at a glance

| Option | Power range | Redundancy | Input | Hot-swap | Typical chassis | When to choose | Source note |

|---|---|---|---|---|---|---|---|

| Single ATX ~3 kW | 2.5–3.0 kW | No | 220–240 V (C19) | No | atx server case, desk-side tower | Fewer failures, cost-sensitive labs, fast build | Vendor datasheets |

| Dual CRPS (2×2–3 kW) | 2–6 kW | N+1 | 200–240 V | Yes | 4U/5U rackmount | Uptime first, field-replaceable | CRPS design guides |

| ORv3 48 V shelf | 15–18 kW shelf | N+1 | AC→48 V | Yes | Rack bus + 4U/6U nodes | Cluster scale, rack power unification | OCP ORv3 specs |

| Two ATX split load | 1.5–3.0 kW | No | 120/240 V | No | Mixed | Temporary expansion only | Community best practices |

No external links; names for orientation only.

Real-world builds with IStoneCase hardware

Workstation, 2–3 GPUs, minimal fuss

Pair a 3 kW ATX unit with the ISC GPU Server Case WS04A2. You get front-to-back airflow, full-length card space, and a tidy cable path to four 12V-2×6 drops. It’s a straight-shoot choice for model fine-tunes, CV pipelines, or rendering. It just work, as long as you’ve got 240 V handy.

4–8 GPUs rack node, uptime matters

Go CRPS inside ISC GPU Server CaseWS06A or the broader GPU Server Case family. Two or three CRPS modules share the load. Lose one, keep training. PMBus gives you live power draw, inlet temp, and fan alarms. Your SRE says thanks.

Pod-ready racks, clean power domain

Adopt ORv3 shelves in the rack and feed 48 V to nodes built on 4U GPU Server Case or 6U GPU Server Case. Now your rectifiers are hot-swappable at chest height. Inventory is easier. Cabling shrinks. And you can scale rows without re-wiring each node. For buyers planning multi-rack footprints, that’s less oops and more throughput.

Need oddball bays, custom standoffs, or special baffles for finicky accelerators? Tap Customization Server Chassis Service. We tweak rails, fan walls, and PSU cages so your BOM matches your workload, not the other way round.

Deployment playbooks (quick, no fluff)

Data center

Use CRPS or ORv3. Wire A/B PDUs; verify one side carries the whole node at peak for a burn-in window. Track power with PMBus, alert on inlet temp and fan delta. Stage spare modules on the same row. Swap failures in minutes, not hours.

On-prem lab

If downtime is OK-ish, a single 3 kW ATX saves space and money. Still do the math: peak draw, cable count, and airflow. Validate the wall power first. A server pc case with high static-pressure fans beats a consumer tower here.

Startup office

Noise matters. So does the lease. Consider a short rack with CRPS nodes in a back room, not under a desk. Put the loud parts behind a door, run long fiber to the dev area. Everyone happy, GPUs busy.

Cabling rules you shouldn’t break

- One GPU, one PSU source. Don’t mix rails from two supplies into a single card.

- Keep each 12V-2×6 under 600 W per lead.

- Lock the connectors. If a latch feels off, replace the cable.

- Route away from exhaust. Hot air cooks plastic, plastic cooks contacts.

- Test failover: pull a CRPS, watch the node keep training. Better to learn now than Friday night.

Performance and noise notes

High-efficiency modules reduce heat, so the fan curve stays calmer. CRPS fans are still sharp under heavy load, because small blowers and back-pressure. ATX 3 kW units use larger fans, sometimes smoother in tone, sometimes not. Measure at your ear, not just spec sheets. And remember: once you pack 6–8 GPUs, most of the noise is just the accelerator blowers doing work. It ain’t silent.

Where IStoneCase fits (and why buyers pick us)

IStoneCase builds rackmount and desk-side enclosures tuned for airflow, card clearance, and PSU routing. We support OEM/ODM batches and bulk purchase for data centers, algorithm hubs, MSPs, research labs, and dev shops. If you need a server pc case that handles thick coolers, or a computer case server that accepts CRPS canisters without hacks, we’ve done that. We also ship rails and trays that don’t twist after two quarters of thermal cycles. Small thing, big uptime.

Start with GPU Server Case, then map to GPU Server Case variants—4U, 5U, 6U—and slot in WS04A2 or WS06A where depth or PCIe population demands it. If your layout is messy, we’ll customize the baffle, cable combs, or even the PSU cage so your build is not fighting your case.