(You want clear rules, real-world use cases, and zero fluff. Let’s keep it simple, a bit chatty, and very practical. Some tiny grammar slips ahead—its fine, it just work.)

Direct-Attach (TQ/A) vs SAS Expander (EL1/EL2) Backplanes

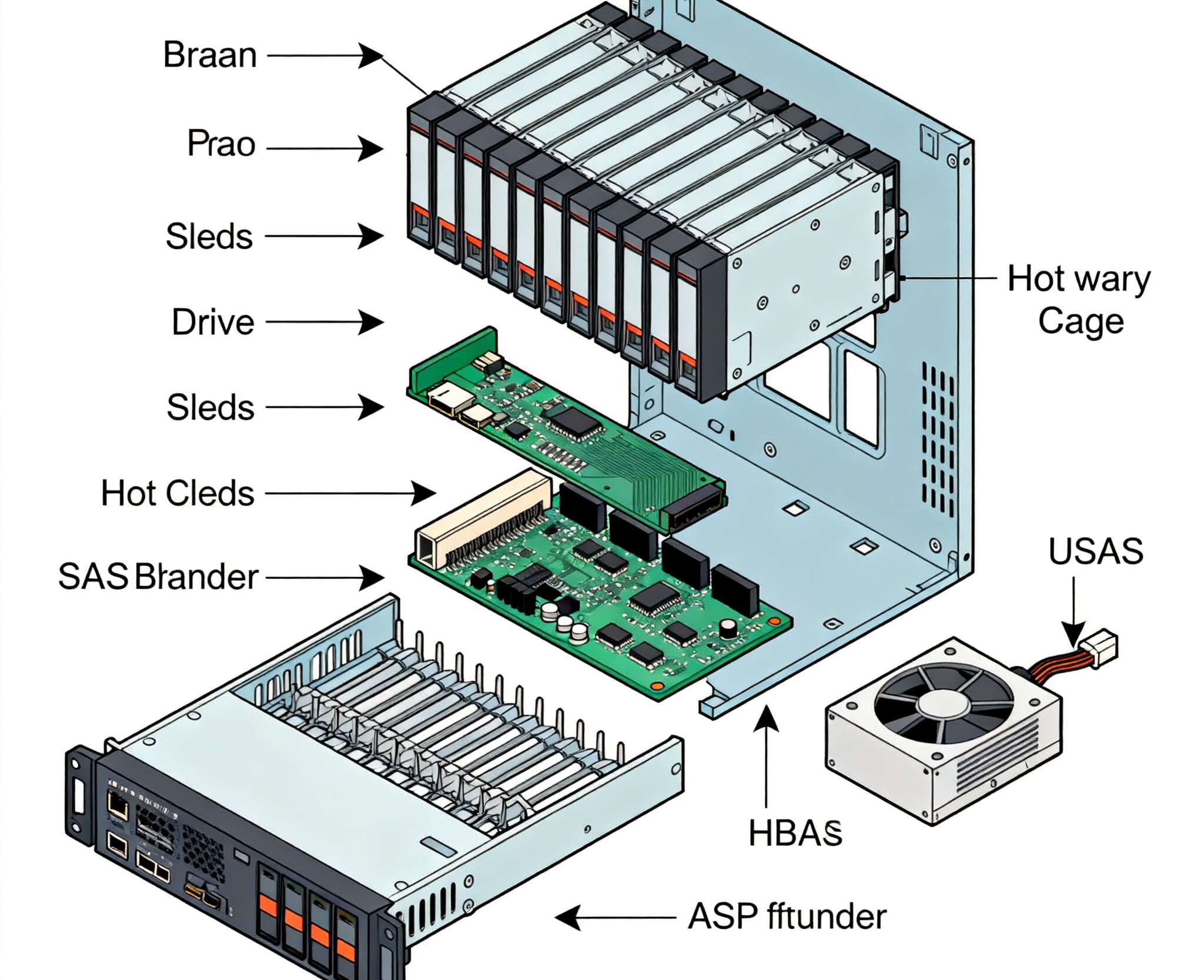

Start here. Direct-attach backplanes (often labeled TQ or A) run individual links from each drive—or from groups of four via mini-SAS—straight into your HBA or motherboard. Path is short. Latency stays low. Downside? Cable spaghetti. Airflow suffers. Maintenance gets fiddly.

SAS expander backplanes (EL1/EL2) sit like a small switch: you feed them one or more ×4 uplinks, and they fan that bandwidth across many bays. Cabling gets clean, airflow calms, serviceability improves. There’s a touch more latency, and you must plan uplink lanes so a pile of busy drives don’t dogpile one thin pipe. EL2 adds dual expanders for multipath/HA.

TL;DR

- Small builds or latency-sensitive? Direct-attach.

- Bigger arrays or cleaner service windows? Expander.

Quick comparison

| Backplane Type | Cabling & Airflow | Latency/Overhead | Bandwidth Planning | Best Fit |

|---|---|---|---|---|

| Direct-Attach (TQ/A) | Many cables; harder airflow | Lowest | Simple (1:1 or 1:4 groups) | Small/medium bays, lab boxes |

| SAS Expander (EL1) | Very clean; easy service | Low-moderate | Size uplinks vs bays | Mid/large arrays, homelab to SMB |

| SAS Expander (EL2) | Clean + redundancy | Low-moderate | Dual paths, failover | HA needs, dual-HBA, enterprise-ish |

SAS-2 6Gb/s vs SAS-3 12Gb/s: Throughput and Headroom

Numbers matter. SAS-2 lanes run 6Gb/s. SAS-3 doubles to 12Gb/s per lane. A typical mini-SAS ×4 uplink aggregates four lanes. With spinners, you rarely saturate a modern SAS-3 uplink. With SSDs or mixed random workloads, lane math suddenly matters. If you’re buying fresh gear in 2025, SAS-3 gives you more headroom and better interop with newer HBAs and expanders.

Rule of thumb: if the chassis supports SAS-3, grab it. It’s not “overkill”; it’s future headroom.

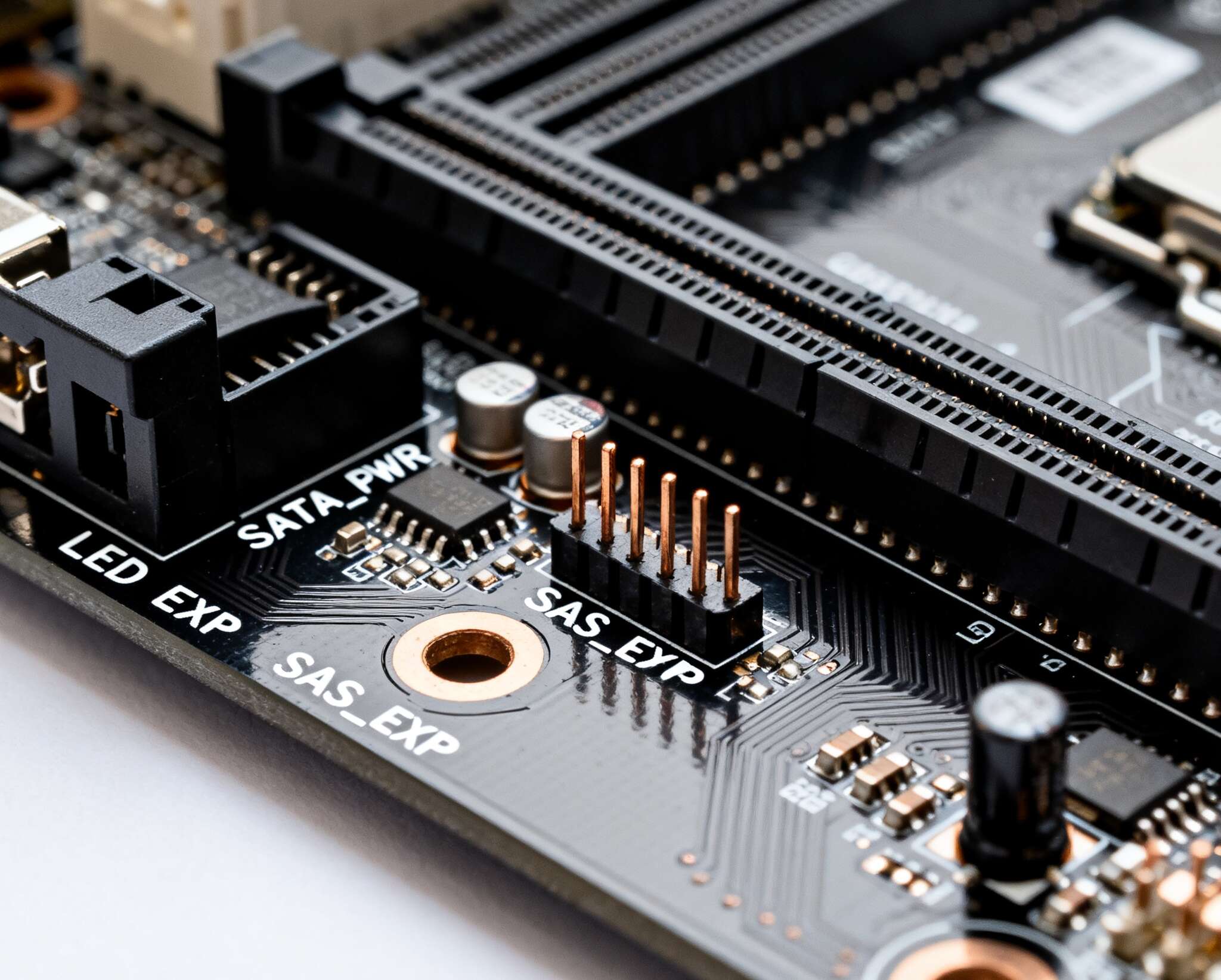

SFF-8087 vs SFF-8643 (Mini-SAS HD) Connectors

Legacy SFF-8087 is common on SAS-2 era gear. SFF-8643 (HD) is the compact, higher-gen internal plug you’ll see on SAS-3 backplanes and HBAs. Both carry ×4 lanes, they just use different shells. Match connectors end-to-end, buy good shielded cables, and keep lengths reasonable. Less cables = happier airflow.

Pro tip: avoid random adapters chains. One clean cable beats three dongles every time.

SAS Backplane with SATA Drives (STP) Compatibility

A SAS controller/backplane talks to SATA disks just fine via STP tunneling. The reverse ain’t true: a SATA-only controller/backplane can’t run SAS drives. If you plan to mix SAS and SATA now—or later—choose a SAS backplane. It saves you from silent compatibility gotchas.

Bandwidth Planning: Uplink Lanes, Dual-Link, Multipath

Expanders share uplink lanes across bays. That’s normal. What you control is uplink count and topology:

- Single uplink (×4): clean and simple; great for HDD arrays and light SSD presence.

- Dual-link to same expander: fatter pipe for busy pools; reduces oversubscription.

- EL2 + dual HBAs: true multipath, resilience, and easier maintenance windows.

- Queue depth matters: high IOPS workloads (VM farms, small-block DB) hit the uplink earlier than big sequential backups.

Think like a networker: how many “lanes” feed your “switch,” and who’s bursting at the same time?

Cooling, SES/SGPIO, and Serviceability in a NAS Case

Backplanes with expanders run warm. Give them real airflow: a fan wall, a decent air shroud, and no blocked intakes. Don’t under-volt fans until things act weird. SES/SGPIO support is not “nice to have”—it lights the right LED when a disk fails and lets you blink a drive for service. Your future self will thank you.

If you need a tidy, easy-to-service enclosure, start with a proper NAS Case (see NAS Case), not a wobbly, thin steel box that flexes. Chassis stiffness, sled quality, and front panel rigidity all reduce vibration noise and RMA headaches.

Scenario Picks: 4-Bay, 6-Bay, 8-Bay, 9-Bay, 12-Bay NAS

No single “best.” Match the backplane to the bay count, drives, and workload.

4-Bay NAS (home lab, small office)

Visit: 4-Bay NAS

Go direct-attach if you’re running four HDDs and a modest HBA. It’s quiet, simple, and cheap to cable. If you plan two SATA SSDs for VM storage, still fine—just watch cabling neatness.

6-Bay NAS (backup + light VM)

Visit: 6-Bay NAS

This is the “on the fence” size. Direct-attach keeps latency low; a small expander backplane cleans your cable mess and improves airflow. If you routinely run scrubs and VM workloads at once, an EL1 with a dual-link uplink is comfy.

8-Bay NAS (media + containers + snapshots)

Visit: 8-Bay NAS

Now cable management starts to matter a lot. I’d lean SAS-3 EL1, one or two uplinks depending on write-heavy tasks. Add SES so you can blink a drive and pull the right one fast. Dont guess.

9-Bay NAS (odd-count arrays, ZFS)

Visit: 9-Bay NAS

Nine bays screams “expander me.” With ZFS scrubs and resilver, bursts get spicy. Plan dual-link if you host VMs or do frequent snapshot sends. Keep the fan wall honest; expander silicon likes airflow.

12-Bay NAS (SMB file server, lab CI cache)

Visit: 12-Bay NAS

At this point, expander is the default. SAS-3, EL1 minimum; EL2 if you want multipath or are running a mirror of SSDs next to big HDD vdevs. Budget for proper rails and a server rack pc case footprint, not a desk toy.

Port & Speed Cheat Sheet (keep nearby)

| Spec / Connector | What It Is | Lanes | Typical Use |

|---|---|---|---|

| SAS-2 | 6Gb/s per lane | 1 | Older HBAs/backplanes; fine for HDD pools |

| SAS-3 | 12Gb/s per lane | 1 | Current standard; better headroom |

| SFF-8087 (internal) | Mini-SAS (legacy) | ×4 | SAS-2 era HBAs/backplanes |

| SFF-8643 (internal HD) | Mini-SAS HD | ×4 | SAS-3 HBAs/backplanes |

| Expander EL1 | Single expander | n/a | Clean wiring; shared uplinks |

| Expander EL2 | Dual expanders | n/a | Dual path, HA, failover |

(n/a simply means “not a lane format”; it’s a topology feature.)

Deployment Notes: server rack pc case vs atx server case vs computer case server

If you plan to rack it, a server rack pc case gives you better airflow direction, front-to-back fan walls, and proper rails. An atx server case works for tower builds or short-depth racks but mind cable bend-radius and PSU clearance. When people say computer case server, they usually mean “consumer case pressed into server duty”—it can work, but serviceability and drive thermals get tricky.

For purpose-built builds and volume orders, jump to Customization Server Chassis Service. You can spec airflow baffles, backplane type, LED harnessing, and sled design so your techs can swap drives blindfolded (dont literally).

Where IStoneCase fits (business value, light pitch)

IStoneCase designs enclosures for real operators: data centers, AI labs, MSPs, and builders who care about uptime not vibes. Need a clean server pc case you can roll out in batches? Want a short-depth rackmount for edge closets, or a silent-ish tower for an office corner? We tune cooling, we validate backplanes with common HBAs, and we’ll re-loom LEDs so fault lights actually map to your bay order. For ready-to-go storage, browse our NAS Case lineup; for batch orders and OEM labels, see Customization Server Chassis Service. If you’re scaling from 4 to 12 bays, step through 4-Bay NAS, 6-Bay NAS, 8-Bay NAS, 9-Bay NAS, and 12-Bay NAS to match your growth curve.

Buying checklist (copy/paste this before checkout)

- Pick backplane type first: Direct-attach for tiny rigs; EL1/EL2 for tidy wiring and growth.

- Confirm SAS-3 where possible; it buys you compatibility and headroom.

- Match SFF-8087/8643 ends; keep cables short and shielded.

- If mixing drives, choose SAS backplane so SATA works today and SAS tomorrow.

- Plan uplinks: single link for HDD-heavy pools; dual-link for SSD-heavy or bursty jobs.

- Ensure SES/SGPIO for LEDs; service gets faster, mistakes go down.

- Secure cooling: fan wall, shroud, and unobstructed intake; don’t starve the expander.

- Choose the right chassis class: rackmount server rack pc case for dense bays; tower atx server case for office installs.