You’re building a virtualization cluster and the I/O plan keeps biting? Let’s fix it. We’ll talk storage and network lanes, then map them to the server rack pc case you’ll actually rack. I’ll keep it plain, punchy, and tied to real workloads. Where it makes sense, I’ll point to IStoneCase product families so you can move from talk to build.

Quick table — I/O planning → action → chassis notes

| Topic | What to plan | Practical move | Notes for chassis choice |

|---|---|---|---|

| Workload profile | IOPS, throughput, latency, read/write ratio, common block sizes | Capture a week of stats from current systems; don’t guess | Leave room for extra NICs/HBAs; prefer cases with spare PCIe slots and front NVMe bays |

| Shared storage & heartbeat | Stable path for metadata/cluster heartbeat | Put heartbeat/management on its own network or datastore path | Choose a chassis with dual PSUs and clean cable paths to keep mgmt links isolated |

| Multipath I/O | Redundant paths host-side (MPIO/DM-multipath) | Standardize multipath settings across all nodes | Ensure enough PCIe risers for dual HBAs/NICs; room for future fabric jump |

| Virtual disk and block size | VHDX/VMDK format, 4K/512e alignment | Fixed/thick disks for steady perf; keep snapshot chains short | NVMe backplanes help random I/O; check backplane support before commit |

| Live migration / vMotion | Separate network, RDMA if available | Carve a dedicated NIC pair for LM/vMotion; enable compression/RDMA | Prioritize chassis airflow for high-TDP NICs; plan for 25/100GbE upgrades |

| vSAN / distributed storage BW | East-west storage bandwidth baseline | Start at 10GbE for all-flash; many stacks benefit from 25GbE+ | Pick cases with enough NIC space and clear airflow; fans matter here |

| Storage QoS | Noisy-neighbor control | Enable Storage QoS/SIOC; cap bursty tenants | Extra NVMe bays = extra tiers for hot data isolation |

| Big VMs & NUMA | Interrupt/queue placement, parallel I/O | Add storage queues/channels; consider CPU affinity | Taller chassis (3U/4U/6U) = more cooling headroom for many controllers |

Workload profiling: IOPS • throughput • latency (get the truth first)

Before you pick a server pc case, capture how your guests really behave: random vs sequential, hot hours, queue depth, typical block sizes. You’ll avoid “IO blender” chaos and buy the right disks and NICs the first time. Record this profile and carry it through procurement, staging, and go-live. Sounds boring, but it saves your weekend.

Shared storage and heartbeat isolation (keep fences away)

Clusters need shared storage and a steady heartbeat. Don’t let template imports or clone storms slam the same path as your quorum or metadata. Separate them. Different NICs. Different VLANs. Even a tiny SSD mirror for witness can help. When it’s quiet, clusters stay up; when it’s loud, they still stay up.

Multipath I/O on the host (and consistent everywhere)

Put MPIO/DM-multipath on the host and make the config identical across nodes. Your SAN will thank you and failovers feel boring—in a good way. Consistency reduces mean-time-to-recover when 2 a.m. calls you up. Yea, you don’t want that.

Virtual disk format and block size (VHDX/VMDK + 4K/512e)

Use fixed/thick disks for predictable performance. Keep snapshot chains short. Align to 4K where possible or use 512e if your stack expects it. Bad alignment multiplies read-modify-write penalties. Small change, big difference.

Live migration / vMotion network and RDMA (separate it, speed it)

Live migration and vMotion are heavy. Give them their own network and bandwidth. If your fabric supports RDMA, flip it on. You’ll move VMs faster and with less jitter on the rest of the stack. No magic here—just lanes for the trucks.

vSAN / distributed storage bandwidth baseline (10/25GbE and up)

All-flash backends push serious east-west traffic. 10GbE is a floor; many teams standardize on 25GbE or better. Don’t only count peak IOPS; count rebuilds, resync, and rebalancing. That’s when weak links show up and yell.

Storage QoS: tame the noisy neighbor

Enable Storage QoS (or SIOC/SDRS equivalents). Cap spikes. Guarantee minimums. Your polite tenants won’t suffer because a single test box decides to run fio at lunch.

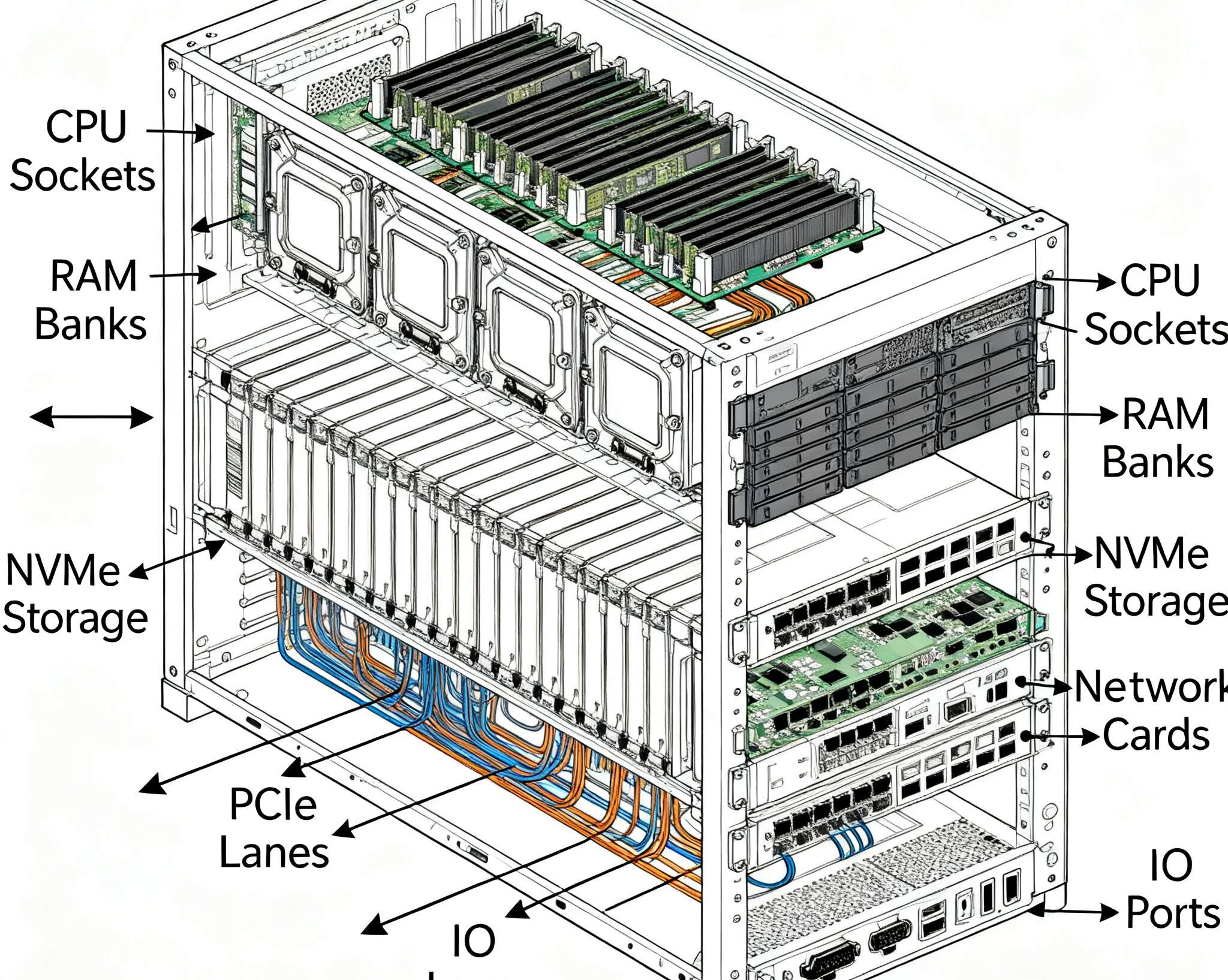

Chassis and backplane as I/O capacity (PCIe, NVMe, OCP NIC)

Think of the computer case server as an I/O capacity pool: PCIe lanes, risers, hot-swap bays, OCP NIC slots, fan walls. Today you might populate 2 NICs and 1 HBA; tomorrow you add 100GbE, more NVMe, maybe a GPU for offload. Pick a chassis that can grow, not just survive day one.

Mapping rack units to real-world roles

Below are practical, no-nonsense roles and how they map to IStoneCase families. Click in to explore specs and options.

1U for front-end and edge (high density, sharp thermals)

Tight space, fast cores, and a couple of NICs? A 1U Server Case keeps density high and airflow direct. Great for stateless front-end, small VDI pods, or network functions. It’s slim, but do mind PCIe slot counts.

2U for balanced clusters (slots + cooling sweet spot)

A 2U Server Case hits the balance: more risers, better fan walls, extra NVMe cages. Ideal for mixed hypervisor nodes where you need a pair of 25/100GbE ports, dual HBAs, and a few hot-swap bays.

3U for storage-rich nodes (more bays, calmer fans)

If you expect high random I/O and lots of local NVMe/SAS tier, a 3U Server Case buys you bays and bigger fans—less whine, more cooling. Great for backup targets, vSAN/Ceph nodes, or big SQL boxes.

4U for expansion and accelerators (headroom matters)

A 4U Server Case leaves room for accelerator cards, extra HBAs, and generous cable management. Perfect when you’re mixing storage controllers with DPUs or need airflow for power-hungry NICs.

6U for specialized and ultra-dense storage

When you need massive local storage, unusual motherboard form factors, or a sea of NVMe, the 6U Server Case gives you mechanical and thermal margin. Not for every rack, but when you need it, nothing else fits.

Guide rails, service loops, and human-friendly maintenance

Tool-less swaps and safe slides keep uptime high. Use Chassis Guide Rail kits sized to your RU and depth. For common builds, you’ll find 2U Chassis Guide Rail and 4U Chassis Guide Rail options ready. Service loops for power and network stop that one-cable-got-shorter drama when you pull a node. Small detail, big sanity.

OEM/ODM: when the standard bill of materials doesn’t cut it

Need a non-standard backplane map, extra NVMe in the front, or OCP NIC 3.0 plus legacy PCIe in the same node? That’s where IStoneCase earns its keep. With Customization Server Chassis Service, you can align thermals, risers, and cable paths to your exact hypervisor image, not “close enough.” We’re IStoneCase—The World’s Leading GPU/Server Case and Storage Chassis OEM/ODM Solution Manufacturer—and we build at scale for data centers, MSPs, research labs, and yes, builders who just want it right.

Headline: chassis families you can actually buy today

If you prefer to browse by family, start at Server Case. You’ll find rackmount, wallmount, NAS-style, and GPU-ready lines for clusters, algorithm centers, and enterprise back offices. Whether you call it atx server case or just a solid server rack pc case, pick the shell that unlocks your I/O plan, not blocks it.

Real-world scenarios (and what to pick)

VDI or app farms (steady small I/O, lots of vMotion)

- Use separate NICs for live migration, keep the path clean.

- 2U or 3U works well: enough risers for dual 25GbE and an HBA.

- NVMe front bays help persistent desktops; airflow stays happy.

All-flash vSAN / Ceph (east-west is king)

- Budget for 25GbE+ east-west from day one.

- Go 3U/4U to get front NVMe and fan wall capacity.

- Keep Storage QoS on; noisy builds will happen, it’s fine.

SQL/OLTP or latency-sensitive stuff

- Fixed/thick disks; 4K alignment; short snapshot chains.

- Pin interrupts and add queue depth as you scale cores.

- Consider 3U for calmer acoustics and cooling headroom.

Edge clusters (tight racks, dust, weird power)

- 1U/2U ruggedized options keep density; use dust filters.

- Out-of-band management on a quiet VLAN; heartbeat separate.

- Rails that match the cabinet save time and fingers (trust me).

Keywords, demystified (plain talk)

You’ll see server pc case, computer case server, server rack pc case, and atx server case used almost interchangeably online. In practice: ATX is about motherboard form factor, rack pc hints at rackmount fitment, and server case signals airflow and serviceability tuned for 24/7 duty. Whatever you call it, the rule holds: pick the chassis that gives your I/O plan lanes, slots, and cooling—then life gets simple.

Why I/O planning decides the chassis (not the other way around)

- Active voice time: you define I/O lanes first; the metal follows.

- You size NICs and HBAs to the profile; then you choose case height for slots and airflow.

- You leave room for tomorrow’s fabric and NVMe tiers; future-you says thanks.

- You keep management paths quiet, even during bursts, so clusters don’t flap.

- You lock QoS, so one tenant can’t wreck the neighborhood.

Small grammar moment: sometimes we over-optimize, and it’s not helpful; don’t worry too much tho. Get the lanes right, keep configs same across nodes, and the stack will just works better, really.