IStoneCase — the world’s leading GPU/server case and storage chassis OEM/ODM solution manufacturer — builds gear for people who actually rack stuff, not just talk about it. Let’s keep it plain and useful.

EIA-310 19-inch rack vs OCP ORV3 21-inch rack (why the spec matters before you buy)

Most deployments still live on the EIA-310 19-inch standard. It’s universal, friendly to legacy rails, and easy to source. OCP ORV3 brings a 21-inch opening, fatter intake, and 48V power shelves with busbars. If you run dense GPUs or plan liquid later, ORV3 helps. If you need broad compatibility today, 19-inch wins. Decide this first, otherwise you’ll chase adapters later and that’s… messy.

Server Case, 1U/2U/3U/4U/6U (real choices for real rack life)

RU height isn’t vanity. It sets your thermals, serviceability, and cable sanity. Quick map:

- 1U: low-latency edge, stateless services, dense front-to-back airflow.

- 2U: sweet spot for mixed CPU + a couple of GPUs, more fan wall, better acoustics.

- 3U/4U: heavy GPUs, chunky coolers, tons of hot-swap bays.

- 6U: high-capacity storage/NAS or liquid manifolds with room to breathe.

Explore IStoneCase categories:

Server rack pc case & atx server case: pick form factor with airflow in mind

“server rack pc case” and “atx server case” aren’t just keywords. They flag two traps: motherboard geometry and airflow path. Use ATX/SSI-EEB boards when you can; they align with fan walls and PSU ducts. Keep the path front-to-back. Side-exhaust builds look cute on slides, then melt VRM zones in real racks. Don’t do that.

Server pc case vs computer case server (naming aside, serviceability wins deals)

You’ll hear server pc case and computer case server used interchangeably. The point is service time. Tool-less trays, captive screws, and labeled fan modules cut MTTR. You save days over a year. It’s boring, it’s money, and your on-call folks will actually sleep. IStoneCase leans into those small bits because they pay off in the field.

Chassis Guide Rail (2U/4U): alignment, sag, and finger-safe installs

Rails are not an afterthought. Bad ones bend, bind, or eat your fingers. Choose rails rated for the real mass (fully populated) and check travel clearance at the cold aisle. IStoneCase offers matched sets:

Customization Server Chassis Service (OEM/ODM): fix workflow, not only the metal

When your topology gets spicy — odd GPU mix, weird NIC placement, non-standard PSUs — you need ODM. We’ll tweak fan walls, shrouds, backplanes, or add L-brackets for cable trays. DFM/DFX, EMI gasketing, front I/O, label packs… it’s all on the table. Hit: Customization Server Chassis Service.

Workload-to-Chassis Quick Map (copy-paste into your plan)

| Workload / Scenario | RU Height | Cooling Path | Storage Bay Strategy | PSU Strategy | Notes | IStoneCase Link |

|---|---|---|---|---|---|---|

| AI inference (moderate GPUs) | 2U | High-pressure front→back | 4–8 hot-swap, OS M.2 | Redundant (N+1) | Keep GPU in direct fan stream; blanking panels stop recirculation | 2U Server Case |

| AI training / heavy GPU | 4U | Front→back, liquid-ready later | 8–24 hot-swap | Dual redundant | Leave headroom for manifold/quick-disconnects | 4U Server Case |

| High-capacity NAS / object | 6U | Front→back with pressure dome | 24–60 hot-swap mix | Dual redundant | Separate intake plenum to cool drive wall | 6U Server Case |

| Edge compute / branch kit | 1U | Straight shot front→back | 2–4 bays | Single + cold spare | Short-depth option for shallow racks | 1U Server Case |

| Lab/dev cluster (ATX boards) | 3U / 4U | Front→back | 8–16 hot-swap | Redundant | “atx server case” with better cable routing | 3U Server Case / 4U Server Case |

| Mixed CPU + GPU storage | 3U / 4U | Fan wall + mid-plane shroud | 12–24 hot-swap | Redundant | Balance GPU thermals vs. drive intake | Server Case |

Tiny grammar note: yea, we left “atx server case” lowercase on purpose; folks search it like that, so we speak how they type. It’s fine.

Factory capability checklist (what to ask before you place that PO)

- Thermal validation: fan curves, inlet ΔT, and smoke tests. You want logs, not vibes.

- EMI & grounding: paint-masking strategy on contact points; real gaskets, not just hope.

- Backplane options: SAS/SATA/NVMe mix, expander firmware, slot mapping doc.

- Rail + rack fit: 19-inch vs 21-inch confirmed, hole pattern, and clear rail SKUs.

- Power: redundant PSUs with the right pinout, cable length planned to the millimeter (sorry, to the mm).

- Service flow: hot-swap fans, no-tool drive caddies, silk-screened guides.

- L10/L11: on-line burn-in, OS image load, and final pack by node or by rack.

- Labeling: asset tags, MAC labels at the right face, QR for fast RMA.

- Docs: exploded views, torque specs, spare kit list. Do not skip.

IStoneCase checks those boxes; we build to your runbook so the rack day dont go sideways.

Practical scenes: where the metal actually lives

- Cold-aisle rows in a colo: Use higher-static fan walls and tight blanking panels. You’ll stop hot-air washback and keep neighbors happy.

- Campus data rooms: Mixed 1U/2U fleets? Standardize rails and power cords. Future-you will thank present-you.

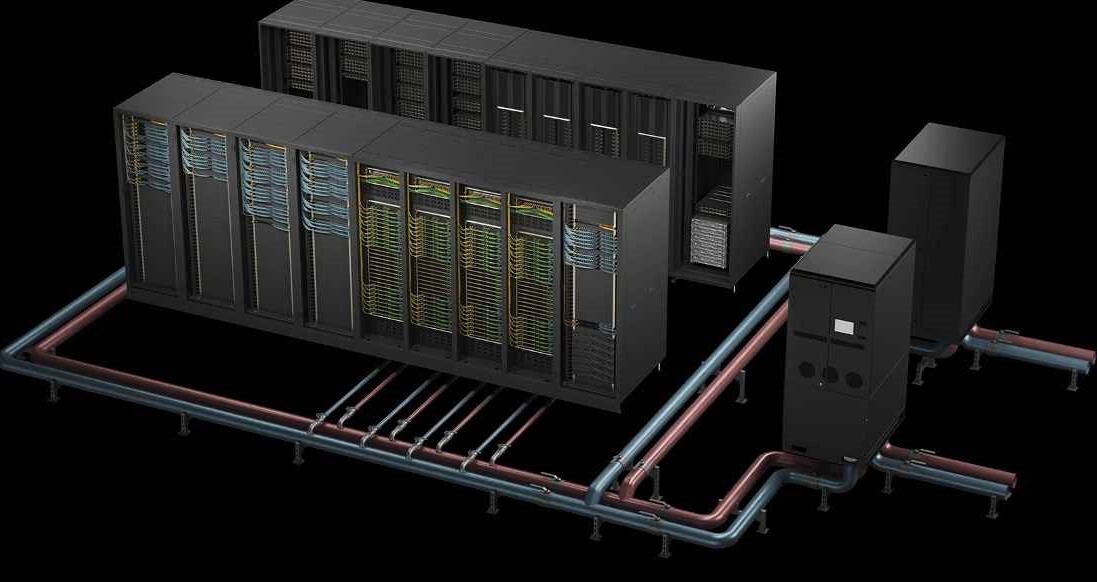

- AI pods: Start with air. Keep front-end inference in 2U; park training in 4U with space for later liquid. Don’t paint yourself into a thermal corner.

- Edge closets: Short-depth 1U with dust filters, lockable bezels, and right-angle power cords so doors actually close.

- Storage walls: 6U plus mid-plane shroud; drive cooling first, compute second. It’s storage; keep it boring and very reliable.

Why IStoneCase fits buyers who need scale, not drama

You need volume SKUs for MSPs and resellers, but also one-off tweaks for that algorithm center who wants a weird NIC riser and front USB blocked. We do both. Our line spans GPU server cases, rackmount, wallmount, NAS devices, ITX, and matched chassis guide rail kits. We keep the tone practical and delivery predictable. It just work… okay, “works.” You get the point.

Quick SEO-friendly note without fluff (because you asked)

If you look for server rack pc case, server pc case, computer case server, or atx server case, you’re really hunting for thermal headroom, drive density, and service minutes. That’s what we design for, not just pretty bezels.

Mini FAQ (straight talk)

Q: 19-inch or ORV3 21-inch?

If you plan 48V busbar and dense GPUs soon, go 21-inch. If you need max compatibility today, 19-inch stays king.

Q: Air or liquid?

Start air on 1–2 GPUs per node. If you hit sustained high TDPs, design the chassis for later quick-disconnects. Don’t over-optimize day one.

Q: Rails? Really that important?

Yes. Get the matched set. Bad rails turn a 5-minute swap into a 40-minute circus.

Closing (what to do next)

- Lock the rack standard (19″ vs 21″).

- Map the workload to RU (table above).

- Choose the chassis and the rails together.

- Pull in ODM only where it unlocks ops speed — fan wall, shroud, backplane, labels.

- Order sample units, do a one-week burn-in, then scale.

Need help picking a chassis right now? Start here: Server Case and jump to the RU that fits your scene. We’ll tune it, we’ll ship it, and your team will get on with the real job.