You run cluster budgets. You watch power, space, and uptime like a hawk. That’s why dual-node chassis keep showing up in real-world rollouts. Two independent servers inside one enclosure trim waste, simplify ops, and stay friendly to dense compute. Below, I’ll keep it practical, pull in field slang, and tie it back to IStoneCase offerings where it helps.

Quick links: Dual-Node Server Case, Dual-Node Server Case 2400TB, Customization Server Chassis Service, IStoneCase homepage

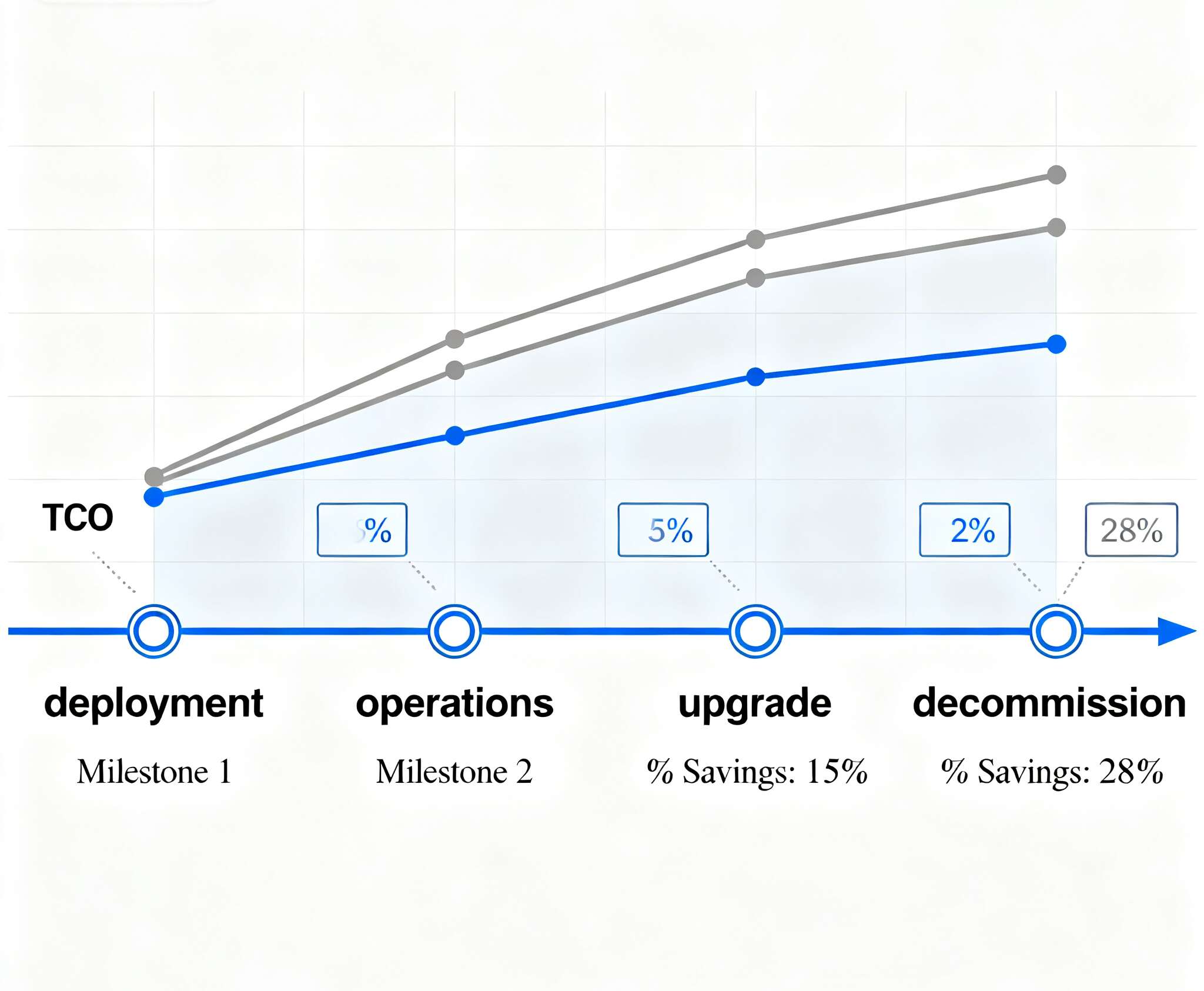

Dual-Node Server Case for HPC & virtualization: TCO basics

Let’s level-set. TCO isn’t just sticker price; it’s rack space, power draw, cooling, cabling, service time, spare parts, and upgrade paths. A dual-node enclosure hosts two independent nodes sharing mechanicals like fans and power supplies. You still get separate CPUs, memory, NICs, and storage paths per node. For HPC (tight loops, batch jobs, east-west traffic) and virtualization (resource pooling, live migration), that shared shell cuts dead weight without cutting performance.

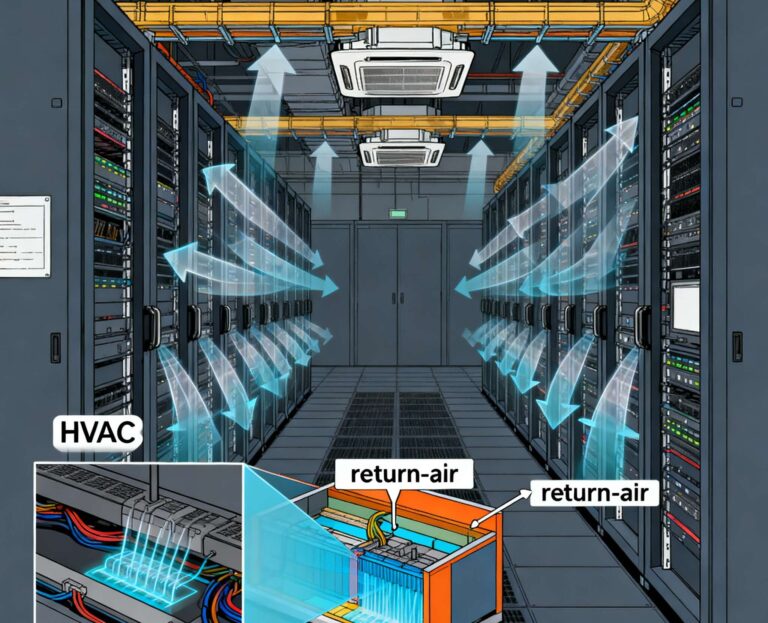

Higher density in a server rack pc case (HPC clusters & VM farms)

In dense rows, every U counts. A dual-node system packs two hosts where you’d expect one. That means fewer rails to mount, fewer labels to scan, and less U-space to rent. For schedulers chasing short MTTR and fast back-fill, tighter packing keeps latency-sensitive workloads close and keeps cabling short.

- You roll 40 hosts? You don’t always need 40 chassis.

- Power whips and PDUs see less sprawl.

- Cold-aisle containment works better when airflow is predictable, not messy.

If you’re comparing models, see IStoneCase’s Dual-Node Server Case and the storage-heavy Dual-Node Server Case 2400TB for hybrid HPC + virtualization stacks.

Shared power & cooling in a computer case server (efficiency without drama)

Two nodes share fans and high-efficiency PSUs. At normal data-center loads, shared components often sit in a better efficiency window. You push fewer watts into the room for the same compute, so the chiller breathes easier. Air shrouds guide flow through hot components; optional high-static-pressure fans keep the speed curve steady under bursty traffic. Less noise, less waste heat. It just work.

Why ops teams like it

- SKU rationalization: fewer spare fan SKUs, fewer PSU variants, simpler RMA bins.

- Predictable thermals: one airflow story across two nodes beats two random stories.

- SLA hygiene: steadier temps → fewer thermal throttles → cleaner performance graphs.

atx server case considerations (serviceability, standards, FRU access)

Even when you run dual-node, you still care about standard parts: ATX/EPS power, front-access hot-swap bays, FRU labeling, and simple rail kits. Field techs shouldn’t wrestle with weird toolings. Look for:

- Front-pull fans with clear arrows (airflow direction),

- OOB ports you can bond into your DCIM,

- Distinct node IDs to avoid “oops wrong server” during swap.

IStoneCase designs target those boring but critical details; if you want custom FRU layouts or badge kits, the Customization Server Chassis Service covers that.

Simplified cabling, O&M, and SLA hygiene in a server pc case

Cabling is where TCO quietly leaks. Dual-node often means:

- Fewer AC cords per host pair,

- Shorter copper runs to TOR (and fewer drops to label),

- One, tidy OOB pattern across two hosts.

That cuts human error. It speeds IMAC (Install, Move, Add, Change). And when something fails, your MTTR shrinks because techs can actually see and reach the right connector. You’ll also feel it in provisioning: PXE, firmware baselines, and templated BIOS settings go faster when the physical layer isn’t a spaghetti bowl.

Tip: tag each node’s OOB with a shared chassis asset tag + node letter. Tiny trick, big win when you’re on a bridge call.

Scale-out fit: HPC schedulers & virtualization clusters

HPC stacks love horizontal growth. Dual-node chassis makes “add 2 more” a near-default move. Queues refill, job placement stays local for NUMA friendliness, and east-west traffic keeps hops low. On the virtualization side, dual-node is friendly to live migration, SR-IOV NIC layouts, and storage vMotion. You can carve nodes for mixed tenancy, then pin the noisy neighbor elsewhere. Fewer enclosures also makes firmware windows less painful. Roll staggered updates, keep the cluster green.

Lifecycle & sustainability: less waste, smoother refresh

Shared mechanicals mean less duplicated metal and plastic across the fleet. Over a few refresh cycles, you carry fewer dead spares, toss fewer parts, and keep e-waste down. That’s not just a feel-good line; it makes audits easier and warehouse shelves cleaner. When you refresh, slide in newer nodes with the same rails and airflow story—no new playbook needed. Your capex and opex don’t whiplash.

Evidence you can skim (table)

| TCO lever (keyword-accurate) | What dual-node changes | Why it matters for HPC & virtualization | Impact style |

|---|---|---|---|

| Server rack pc case density | Two nodes share one enclosure; fewer rails and U-space | More hosts per rack, tighter east-west links, easier cold-aisle design | Lower space pressure, simpler capacity planning |

| Shared PSU & fans in a computer case server | One cooling path and high-efficiency PSUs for two nodes | Less power overhead, smoother thermals, fewer fan SKUs | Power & cooling efficiency, steadier performance |

| Simplified cabling in a server pc case | Fewer AC cords and TOR runs per compute pair | Fewer touch points, faster IMAC, fewer mis-patches | Lower MTTR, cleaner audits |

| OOB & DCIM | Consolidated OOB patterns, easy labeling | Faster troubleshooting, repeatable firmware baselines | Higher uptime during maintenance |

| Lifecycle & FRU logistics | Shared spares, unified rails, standard FRUs | Smaller spare pool, simpler RMA, less e-waste | Smoother refresh cycles |

| HPC & virtualization scale-out | Add capacity by the pair; keep jobs local | Better NUMA locality, easy live migration, SR-IOV friendly | Predictable cluster growth |

No cost math shown here—because your tariffs, cooling, and utilization vary. But the operational pattern is stable across sites.

Real-world scene: bursting AI training in a dual-node lane

Picture a mixed cluster. Some nodes chew on CFD. Others run a fat virtualization pool for databases and app tiers. You’ve got a surprise surge: a month of training runs. With dual-node chassis, you can swing two nodes per enclosure toward the GPU slice or carve them as CPU-heavy VM hosts without re-threading the whole rack. Short copper to TOR keeps jitter low. PSUs don’t scream. When the rush ends, you rebalance—same rails, same airflow, zero drama.

And if you need unusual NIC layouts, front I/O, or a custom faceplate for your service tags, you spec it once. The Customization Server Chassis Service handles ODM tweaks so your NOC playbooks don’t fork.

IStoneCase solutions (accurate keywords, zero fluff)

- Dual-Node Server Case — the baseline enclosure for dense HPC & virtualization rollouts. Good airflow story, service-friendly FRUs, and clean rail kits.

- Dual-Node Server Case 2400TB — when the VM farm or data lake wants heavy local storage, this model pairs dual controllers with serious bay count.

- Customization Server Chassis Service — OEM/ODM options for airflow shrouds, backplane maps, front I/O, and branding. If you operate at scale—data centers, research labs, MSPs—this keeps your fleet consistent.

IStoneCase builds for performance and durability, with SKU paths for GPU server case, rackmount, wallmount, NAS, and ITX. If you buy in bulk or plan phased rollouts, standardizing the chassis pays back in less warehouse chaos and faster rack-and-stack.

Buying notes (keep it practical)

- Map TDP budgets to your aisle temps. Leave headroom for bursty jobs.

- Align NIC topology with your east-west traffic model. Don’t over-subscribe just because “it was fine last year.”

- Keep firmware baselines in code. If the chassis stays the same, your golden image stays boring. That’s good.

- Don’t ignore front-of-rack ergonomics. If a tech can’t yank a fan with gloves on, your MTTR will hate you.

- Finally, test live migration under stress before go-live. Dual-node makes it easier; still, trust but verify.