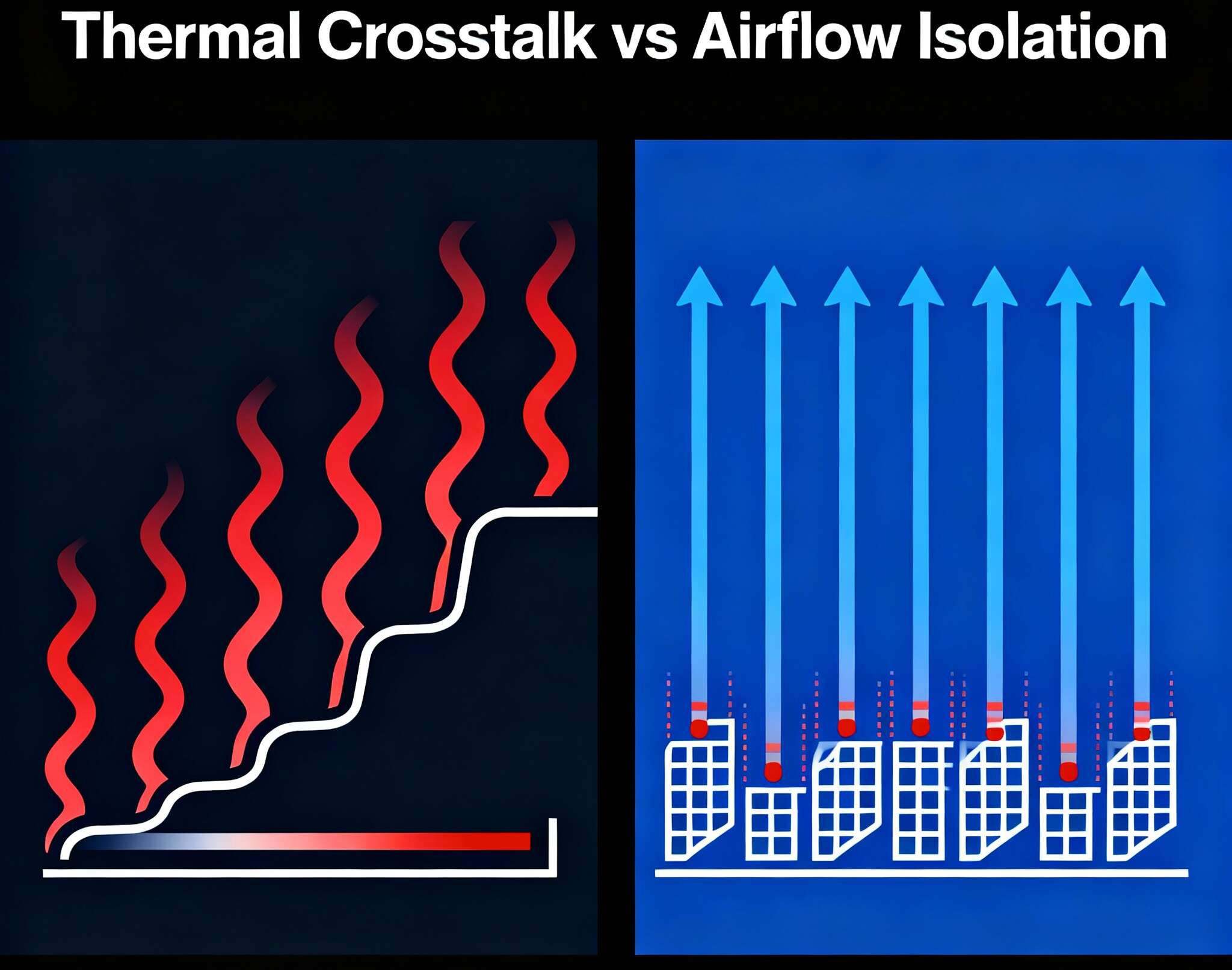

You’ve seen it: one node runs a bursty job, fans ramp, nearby nodes heat up for no “good” reason, and throttling sneaks in. That’s thermal crosstalk. The fix isn’t magic—just airflow discipline. In this piece I’ll keep it plain, hands-on, and tied to real rack life. I’ll also point to where IStoneCase fits, because hardware that respects airflow rules saves you headaches later.

quick note: I’ll use common DC lingo—cold aisle containment, ΔP, bypass air, recirc, shroud, dummy sled—because that’s how teams actually talk on the floor.

Thermal crosstalk between nodes

When adjacent sleds share a pressure plane, a “hungry” node can steal intake from a “quiet” neighbor. In closed aisles, this turns into competition for a limited cold air supply. Result: inlet temp excursions, oscillating fan RPMs, and weird performance dips you can’t reproduce on a bench.

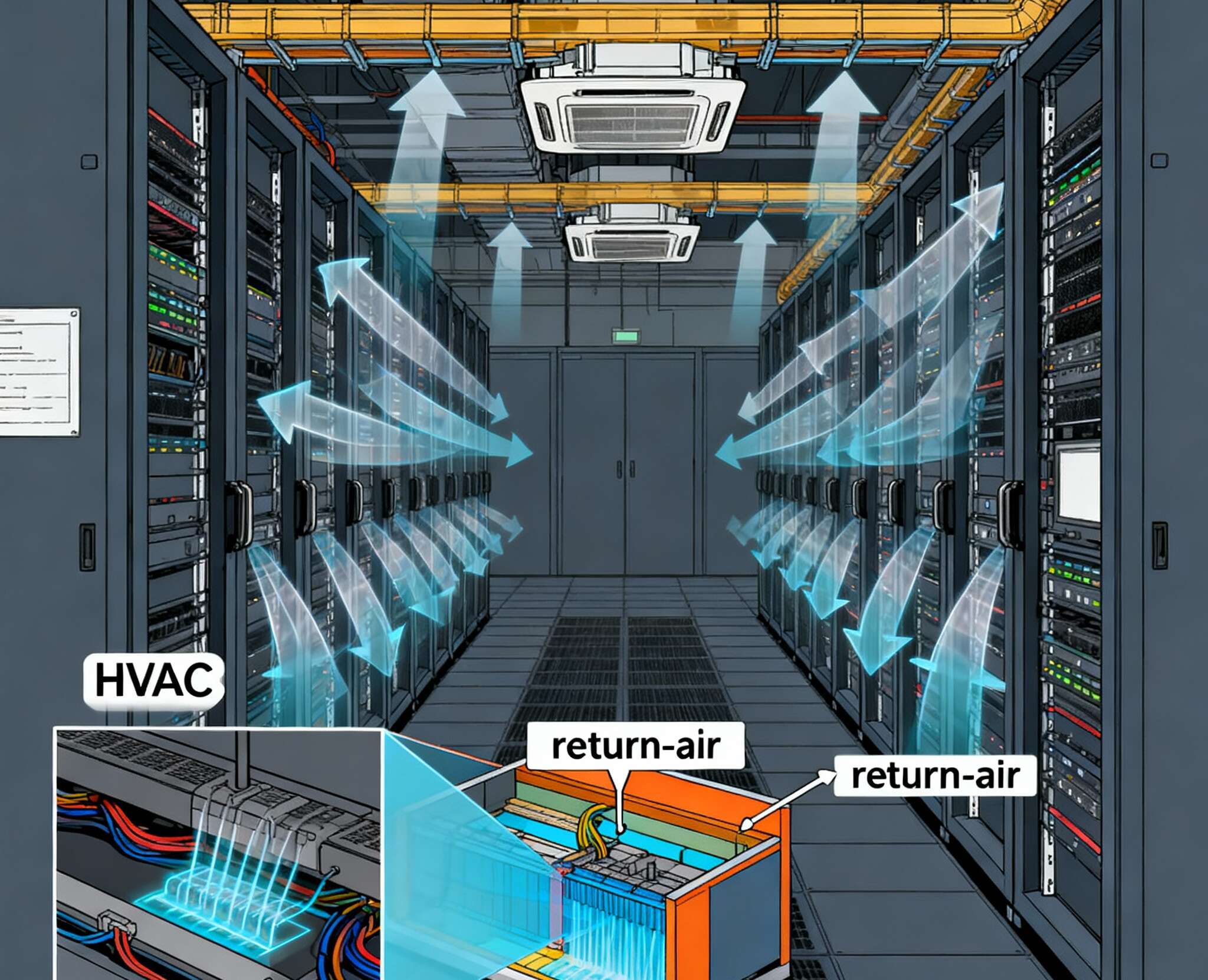

Cold aisle containment and airflow competition

Containment is great, but it raises the bar for balance. Without enough static pressure at the perforated tiles (or front door), you’ll see reverse flow and hot air recirculation. Short version: if supply can’t keep up, nodes bite each other’s airflow. You notice it as “why is Node-B hot when Node-A spikes?”

Airflow isolation between nodes

Isolation reduces crosstalk by forcing air to go through each node, not around it. Think: well-fitted air shrouds, blanking panels, sealed cable cutouts, and dummy/blank sleds in empty bays. That cuts the sneaky bypass paths and stabilizes each node’s intake.

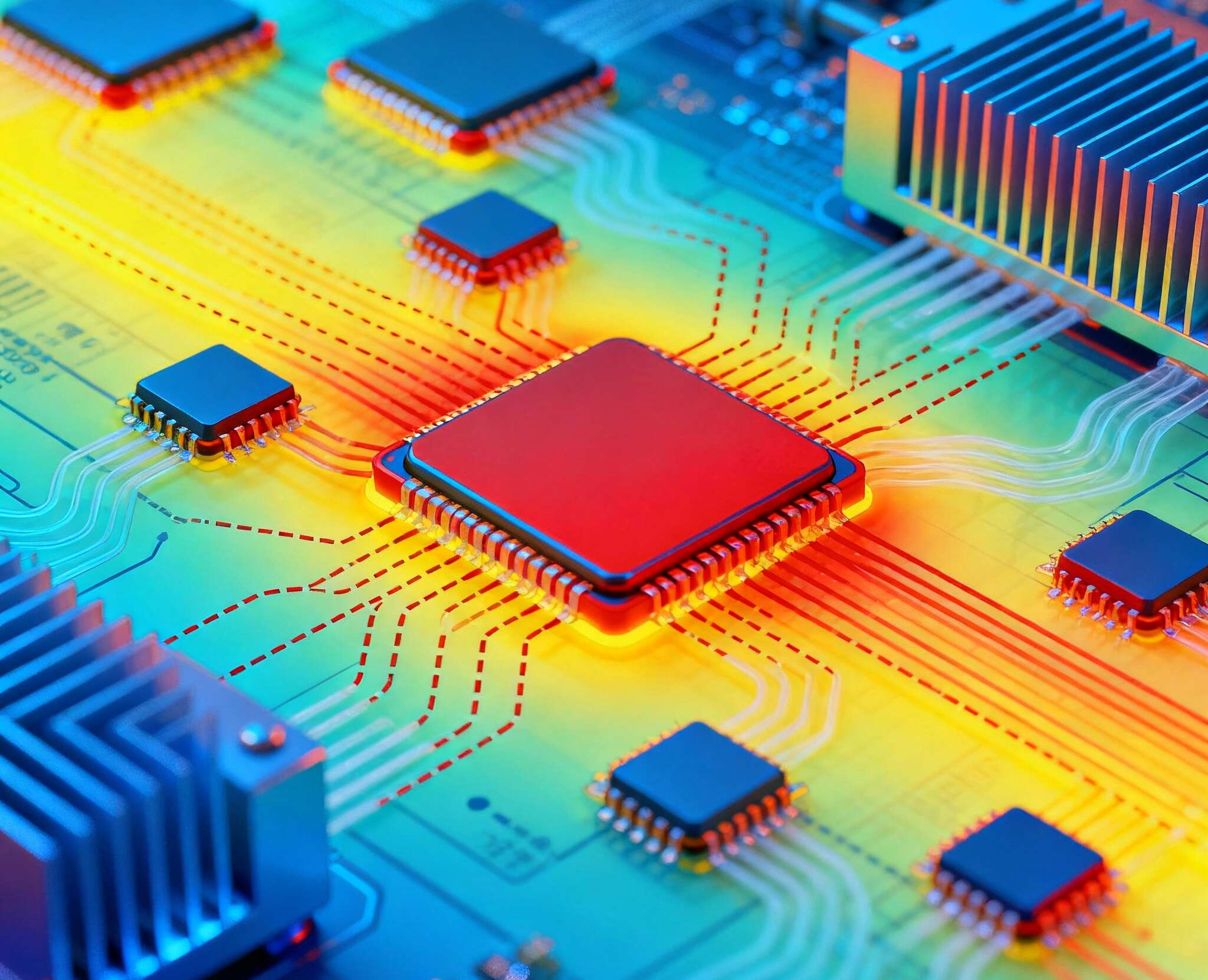

Air shroud, blanking panels, dummy sleds

- Air shrouds route cold air over CPU, DIMMs, NVMe, VRMs—no wandering.

- Blanking panels stop front-to-back short-circuit flow through empty U-spaces.

- Dummy sleds keep multi-node chassis from turning empty slots into leaks.

- Cable baffles & gaskets close those “not-a-big-deal” gaps that are a big deal.

Pressure control and airflow direction monitoring

Don’t watch temperature alone; it’s laggy. Watch pressure and flow direction at the aisle and, if you can, per rack. A simple ΔP setpoint across the cold aisle and rack face reduces the yo-yo effect. If your CRAH/CRAC supports it, run pressure-based control (with temperature as a guardrail) so nodes don’t fight for air every time a workload surges.

Workload-aware thermal orchestration

Software helps too. If two neighbors both hit turbo, they’ll yank airflow at the same time. Use your scheduler to stagger hot jobs, cap transient turbo on tightly packed sleds, or migrate spiky tasks off a cluster that’s already at fan-limit. Sounds fancy, but a couple of simple rules—“don’t co-locate two max-TDP jobs in adjacent bays when aisle ΔP is low”—go far.

Field-tested claims, symptoms, fixes (with IStoneCase fit)

| Claim (what’s true) | What you’ll see in the rack | What actually fixes it | Where IStoneCase helps |

|---|---|---|---|

| Nodes compete for limited cold air in containment | Inlet temps swing 4–8°C, fans chase, throttling appears under burst | Maintain aisle ΔP, add tile CFM where the hot rows live, verify door perforation | Use a well-sealed chassis; our Dual-Node Server Case ships with tight shrouds and bay seals |

| Bypass paths cause crosstalk | Hot spots near empty U-spaces, “mystery” warm intakes | Blanking panels and cable grommets, no exceptions | Chassis + rack kits from IStoneCase pair with panels that actually fit; fewer “temporary” gaps |

| Empty sled = giant leak | Neighbor node needs higher fan RPM to hold inlet | Install dummy/blank sleds; keep the pressure path continuous | Multi-node options in the Dual-Node Server Case 2400TB lineup include filler sleds |

| Shroud mismatch after upgrades | After CPU/GPU swap, temps rise “for no reason” | Re-spec air shrouds to new heat sources; validate fit with smoke/CFD | Our OEM/ODM team maps real heat sources; see Customization Server Chassis Service |

| Only temp sensors? You’re late | Fans lag, alarms come after a cliff | Add directional flow and pressure probes; control on ΔP | Chassis prepared for front-face probe mounting; easier instrumentation |

Practical scenarios (what teams actually do)

- Edge racks with mixed gear: One computer case server runs storage-heavy, the neighbor is compute-heavy. Storage pulls low CFM, compute is spiky. You isolate with shrouds and blanking panels, then set a minimum ΔP so the compute sled doesn’t starve the storage sled when it surges. If you need a compact atx server case, we can tune the intake geometry; check the Customization Server Chassis Service.

- Four-node chassis, one bay empty: You think it’s fine. It ain’t. That gap becomes a bypass. Pop in the dummy sled and watch neighboring CPU inlet temps drop. Our Dual-Node Server Case supports blank fillers so you don’t run “open-mouth” bays.

- High-density GPU aisle: Fans are already loud; adding containment punished weak spots. Seal the cable cutouts, verify door CFM, and move to pressure-based cooling control. For a tight server pc case fitout, OEM the shroud to clear real GPU heights via Customization Server Chassis Service.

- Rollout for MSPs: Mixed clients, mixed loads, one bill. You don’t want surprises. Standardize on a chassis that preserves airflow by design. Our Dual-Node Server Case 2400TB keeps throughput high without turning the neighboring node into a space heater.

Metrics & checklist for airflow isolation

| Category | What to track | Good practice |

|---|---|---|

| Pressure | Cold aisle to room ΔP; rack face ΔP | Keep a steady setpoint so nodes don’t “suck wind” from neighbors |

| Flow direction | Detect reverse flow at inlets | Directional probes on problem racks; alarms on flow flip |

| Intake temperature | Per node, not just per rack | Follow the variation: the swing matters as much as the mean |

| Fan headroom | % RPM vs. max | Leave margin so bursts don’t slam into the ceiling |

| Bypass control | % of U-spaces blanked; dummy sled usage | 100% blanking on empties; no excuses |

| Shroud fit | Visual + smoke + (if available) quick CFD | Re-shroud after CPU/GPU refresh; don’t assume “close enough” |

Small “wrong” but real: Dont forget cable grommets. Those tiny slots leak alot of CFM when you add them all up. Close them and your graphs calm down.

Why this matters for performance, uptime, and yes—business

Less crosstalk means fewer throttling events, longer component life, and lower fan energy. That’s not abstract. If your server rack pc case preserves pressure and blocks bypass, your cluster runs steadier under the same power envelope. And when you scale, you carry the same airflow playbook forward—no surprise fire drills.

IStoneCase helps in two ways:

- Good bones: A chassis that doesn’t leak. Our Dual-Node Server Case and the high-capacity Dual-Node Server Case 2400TB route air where heat actually lives.

- Custom fit: OEM/ODM tweaks for shroud geometry, bay seals, sled fillers, probe mounts—so your computer case server plan lines up with real-world thermals. That’s the point of Customization Server Chassis Service.